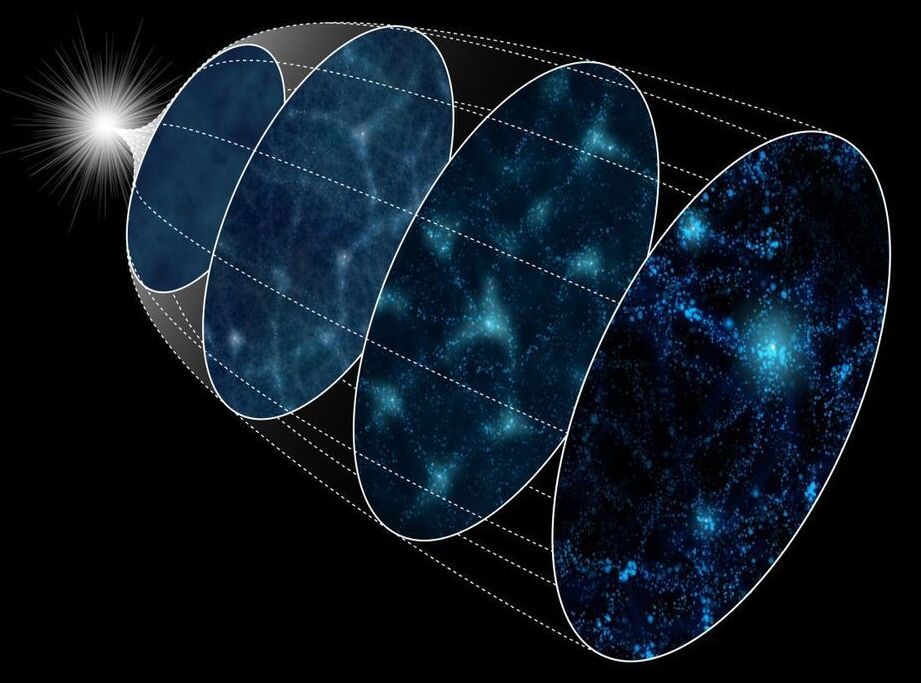

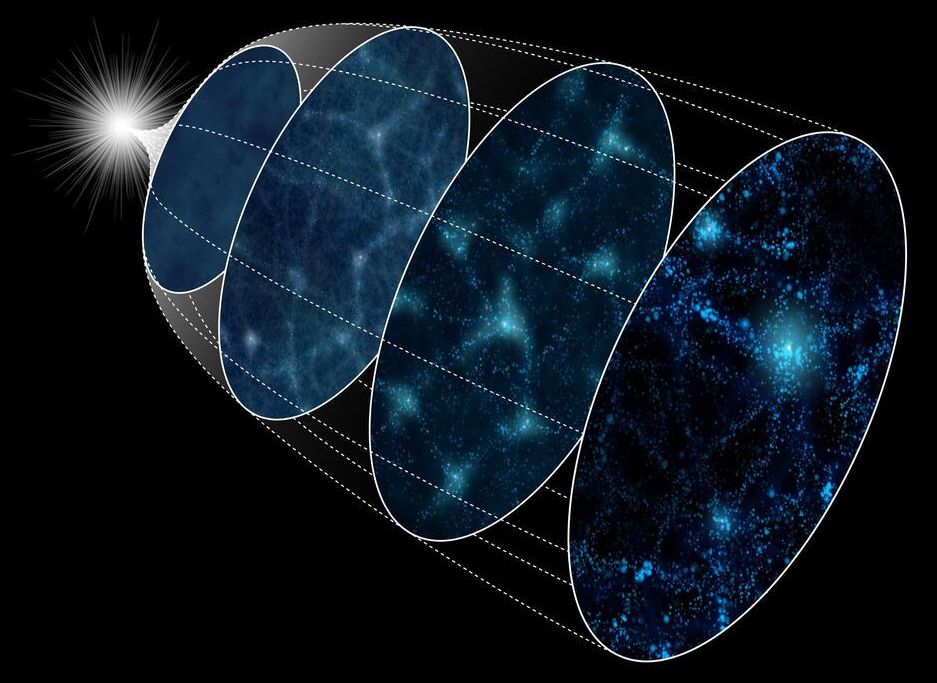

A supercomputer presses the rewind button on the universe’s creation.

Cosmologists simulated 4000 versions of the universe in order to understand what its structure today tells us about its origins.

In the absence of a TARDIS or Doc Brown’s DeLorean, how can you go back in time to see what supposedly happened when the universe exploded into being?

Astronomers have tested a method for reconstructing the state of the early universe by applying it to 4000 simulated universes using the ATERUI II supercomputer at the National Astronomical Observatory of Japan (NAOJ). They found that together with new observations, the method can set better constraints on inflation, one of the most enigmatic events in the history of the universe. The method can shorten the observation time required to distinguish between various inflation theories.

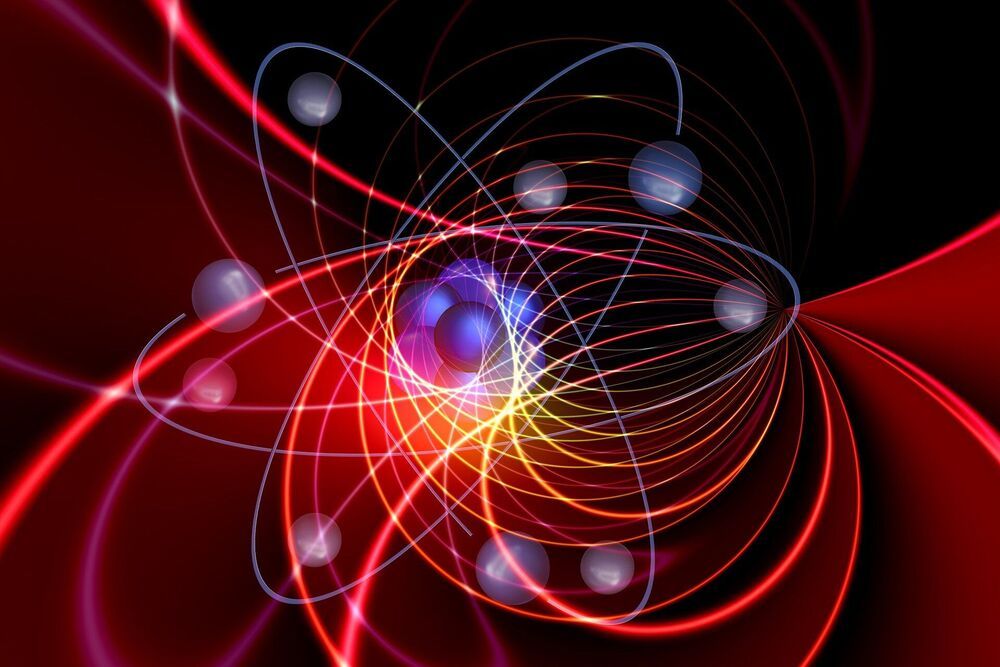

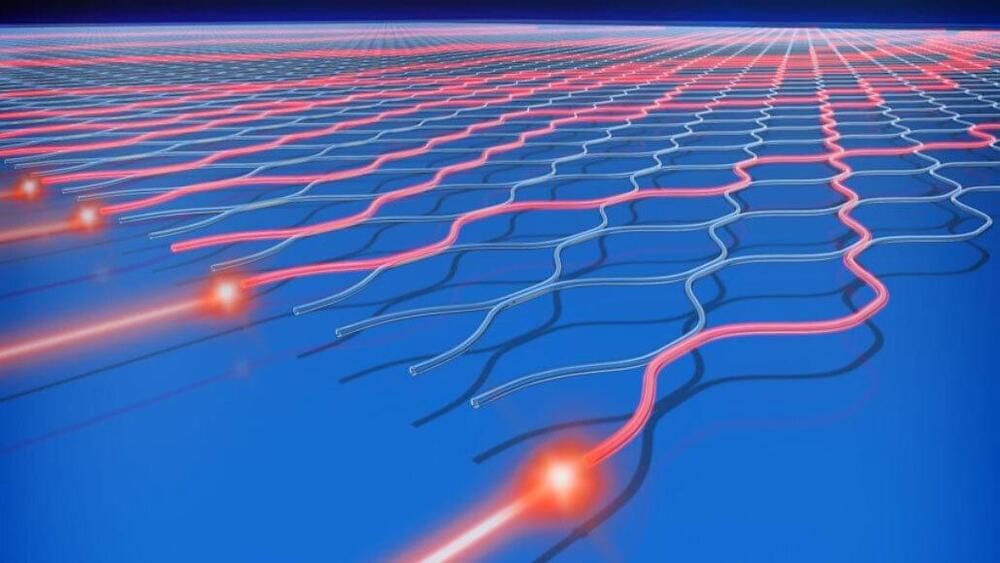

To build a universal quantum computer from fragile quantum components, effective implementation of quantum error correction (QEC) is an essential requirement and a central challenge. QEC is used in quantum computing, which has the potential to solve scientific problems beyond the scope of supercomputers, to protect quantum information from errors due to various noise.

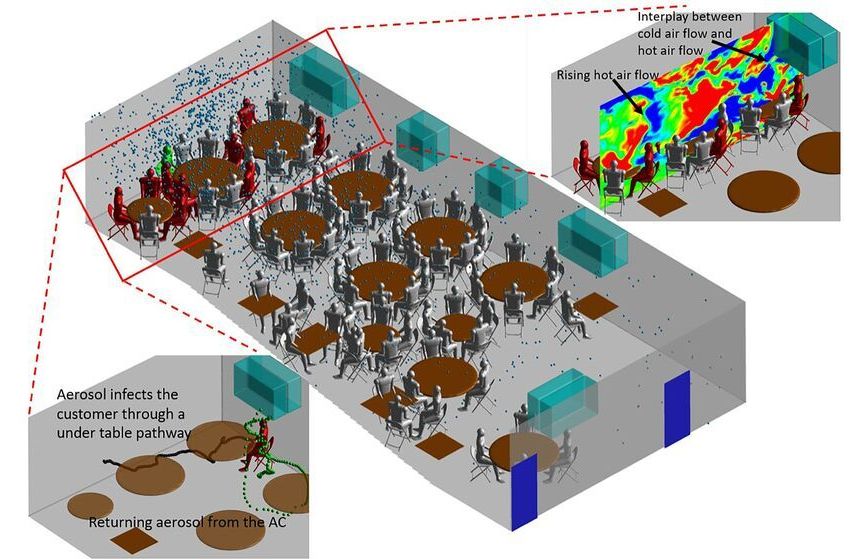

The detailed physical processes and pathways involved in the transmission of COVID-19 are still not well understood. Researchers decided to use advanced computational fluid dynamics tools on supercomputers to deepen understanding of transmission and provide a quantitative assessment of how different environmental factors influence transmission pathways and airborne infection risk.

Physicists have discovered a potentially game-changing feature of quantum bit behavior that would allow scientists to simulate complex quantum systems without the need for enormous computing power.

For some time, the development of the next generation of quantum computers has limited by the processing speed of conventional CPUs.

Even the world’s fastest supercomputers have not been powerful enough, and existing quantum computers are still too small, to be able to model moderate-sized quantum structures, such as quantum processors.

Circa 2020 o.o

Researchers in China claim to have achieved quantum supremacy, the point where a quantum computer completes a task that would be virtually impossible for a classical computer to perform. The device, named Jiuzhang, reportedly conducted a calculation in 200 seconds that would take a regular supercomputer a staggering 2.5 billion years to complete.

Traditional computers process data as binary bits – either a zero or a one. Quantum computers, on the other hand, have a distinct advantage in that their bits can also be both a one and a zero at the same time. That raises the potential processing power exponentially, as two quantum bits (qubits) can be in four possible states, three qubits can be in eight states, and so on.

That means quantum computers can explore many possibilities simultaneously, while a classical computer would have to run through each option one after the other. Progress so far has seen quantum computers perform calculations much faster than traditional ones, but their ultimate test would be when they can do things that classical computers simply can’t. And that milestone has been dubbed “quantum supremacy.”

Lightmatter bets that optical computing can solve AI’s efficiency problem.