In this presentation, Dr. Roman V. Yampolskiy provides a rigorous examination of the fundamental limitations of Artificial Intelligence, arguing that as systems approach and surpass human-level intelligence, they become inherently unexplainable, unpredictable, and uncontrollable. He illustrates how the black box nature of deep learning prevents full audits of decision-making, while concepts like computational irreducibility suggest we cannot forecast the actions of a smarter agent without running it – often until it is too late for safety. He asserts that there is currently no evidence or mathematical proof to guarantee that a superintelligent system can be safely contained or aligned with human values.

Dr. Yampolskiy further bridges theoretical computer science with safety engineering by applying impossibility results, such as the Halting Problem and Rice’s Theorem, to demonstrate that certain safety guarantees for Artificial General Intelligence (AGI) are mathematically unreachable. These technical impediments lead to a sobering discussion on existential risk, where the inability to verify or monitor advanced systems results in an alarmingly high probability of catastrophic outcomes. By analysing why advanced AI defies traditional engineering safety standards, he makes the case that current trajectories may lead to irreversible consequences for humanity.

To conclude, the talk shifts toward potential pathways for mitigation, emphasising the urgent need to prioritise specialised, narrow AI over the pursuit of general superintelligence. Dr. Yampolskiy argues that while narrow AI can solve global challenges within controllable parameters, the pursuit of AGI represents an existential gamble. He calls for a shift in the research community from a “move fast and break things” mentality to a mathematically grounded approach, urging that we must prove a problem is solvable before investing billions into its deployment.

Category: mathematics

Why numbers are more real than atoms (Part 1) Mathematical Realism

Like the mathematical universe.

This video about mathematical realism will probably not benefit you in any way. Enjoy. 1) What are numbers? 2) Why Hume’s understanding of mathematics is incorrect. 3) Why Plato’s understanding of mathematics is probably incorrect. 4) Why the common sense view of mathematics is probably incorrect. See second video for more.

Dynamical freezing can protect quantum information for near-cosmic timescales

Preserving quantum information is key to developing useful quantum computing systems. But interacting quantum systems are chaotic and follow laws of thermodynamics, eventually leading to information loss. Physicists have long known of a strange exception, called dynamical freezing, when quantum systems shaken at precisely tuned frequencies evade these laws. But how long can this phenomenon postpone thermodynamics?

Not forever, but for an astonishingly long time, Cornell physicists have determined, giving the first quantitative answer. Using a new mathematical framework, they demonstrate that the frozen state can be stabilized long enough to be a useful strategy for preserving information in quantum systems. This can be a promising route for maintaining coherence in quantum computers as the numbers of qubits scale up to the millions.

“It’s like asking, how do you evade the laws of physics from eventually taking over?” said Debanjan Chowdhury, associate professor of physics in the College of Arts and Sciences. “Imagine that you had a hot cup of coffee that even without a heater, stayed hot. Or a block of ice placed on a heater that never melts. Is that even possible? This has been one of the big open problems in the field of quantum many-body systems.”

From theory to safety: New model predicts how combustion scenarios unfold

Researchers from Skoltech have published a paper in the journal Physica D: Nonlinear Phenomena presenting an analysis of steady propagating combustion waves—from slow flames to supersonic detonation waves. The study relies on the authors’ mathematical model, which captures the key physical properties of complex combustion processes and yields accurate analytical and numerical solutions. The findings are important for understanding the physical mechanisms behind the transition from deflagration to detonation, as well as for developing safer engines, fuel combustion systems, and protection against unwanted explosions in industrial settings.

The scientists identified several main types of combustion waves. The most powerful is strong detonation —a supersonic shock wave that sharply compresses and heats the mixture, triggering a chemical reaction. This type of wave is highly stable. In weak detonations and weak deflagration waves, there is no abrupt shock front.

The chemical reaction only begins if the mixture has been preheated to a temperature where it can ignite. These regimes occur rarely, under specific conditions, and can easily break down or transition into another wave type.

Why you can’t tie knots in four dimensions

We all know we live in three-dimensional space. But what does it mean when people talk about four dimensions? Is it just a bigger kind of space? Is it “space-time,” the popular idea which emerged from Einstein’s theory of relativity?

If you have wondered what four dimensions really look like, you may have come across drawings of a “four-dimensional cube.” But our brains are wired to interpret drawings on flat paper as two-or at most three-dimensional, not four-dimensional.

The almost insurmountable difficulty of visualizing the fourth dimension has inspired mathematicians, physicists, writers and even some artists for centuries. But even if we can’t quite imagine it, we can understand it.

Biology, not physics, holds the key to reality

Three centuries after Newton described the universe through fixed laws and deterministic equations, science may be entering an entirely new phase.

According to biochemist and complex systems theorist Stuart Kauffman and computer scientist Andrea Roli, the biosphere is not a predictable, clockwork system. Instead, it is a self-organising, ever-evolving web of life that cannot be fully captured by mathematical models.

Organisms reshape their environments in ways that are fundamentally unpredictable. These processes, Kauffman and Roli argue, take place in what they call a “Domain of No Laws.”

This challenges the very foundation of scientific thought. Reality, they suggest, may not be governed by universal laws at all—and it is biology, not physics, that could hold the answers.

Tap here to read more.

The Man Who Stole Infinity

In 1874, German mathematician Georg Cantor published a groundbreaking paper showing that there are different sizes of infinity — a result that fundamentally changed mathematics by treating infinity as a concrete mathematical concept rather than a mere philosophical idea.

That paper became the foundation of set theory, a central pillar of modern mathematics.

Newly discovered letters from Cantor’s correspondence with fellow mathematician Richard Dedekind, believed lost until recently, suggest that a crucial part of the proof Cantor published came directly from Dedekind’s work.

Historian and journalist Demian Goos uncovered these letters while researching Cantor’s life. He found a key letter from November 30, 1873 that shows Dedekind’s proof of the countability of algebraic numbers — the same result Cantor would publish later under his own name.

Earlier histories had portrayed Cantor as a lone genius, but the new evidence reveals he relied heavily on Dedekind’s ideas and published them without proper credit, effectively erasing Dedekind’s role in the discovery.

Cantor’s strategy was partly tactical: because influential mathematician Leopold Kronecker vehemently opposed actual infinity, Cantor framed the paper under a less controversial title (about algebraic numbers), using Dedekind’s simplified methods to “sneak” in the revolutionary idea of comparing infinities.

The result was not just a new theorem but a new way of thinking about infinity, setting the stage for set theory and reshaping mathematics — even though the true story of its origins was more collaborative and ethically complicated than commonly told.

Abstract: Emily Gutierrez-Morton

Yanchang Wang and colleagues (Florida State University) show that in yeast, polo-like kinase Cdc5 promotes the phosphorylation of SUMO protease Ulp2, reducing its affinity for SUMO chains and thereby facilitating polySUMOylation.

Genetics CellCycle

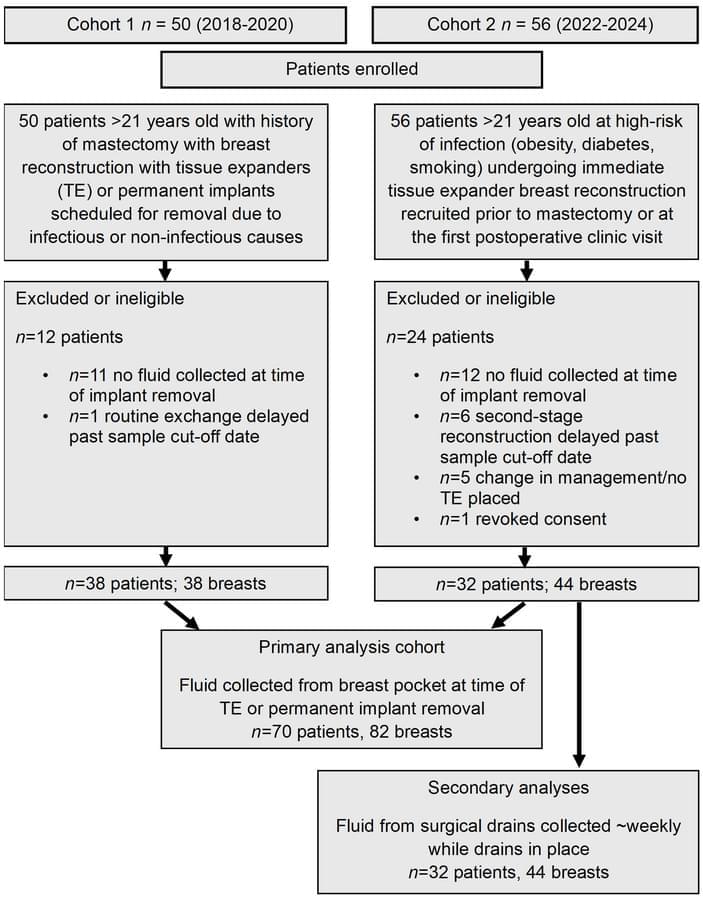

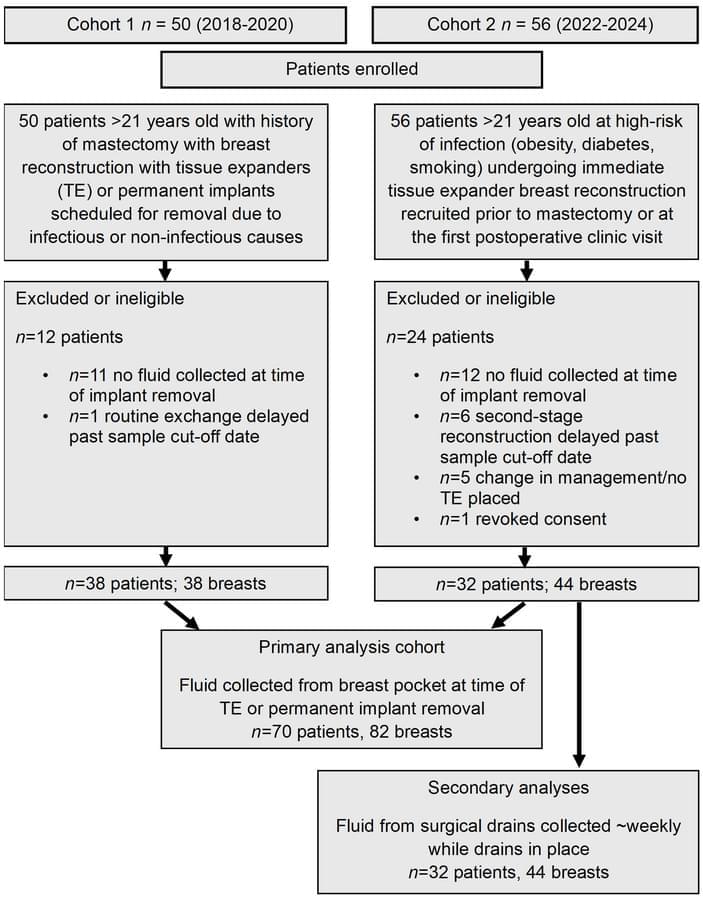

1Infectious Diseases Division, Department of Medicine and.

2Division of Plastic and Reconstructive Surgery, Washington University in St. Louis School of Medicine, St. Louis, Missouri, USA.

3Department of Mathematics, Dartmouth College, Hanover, New Hampshire, USA.

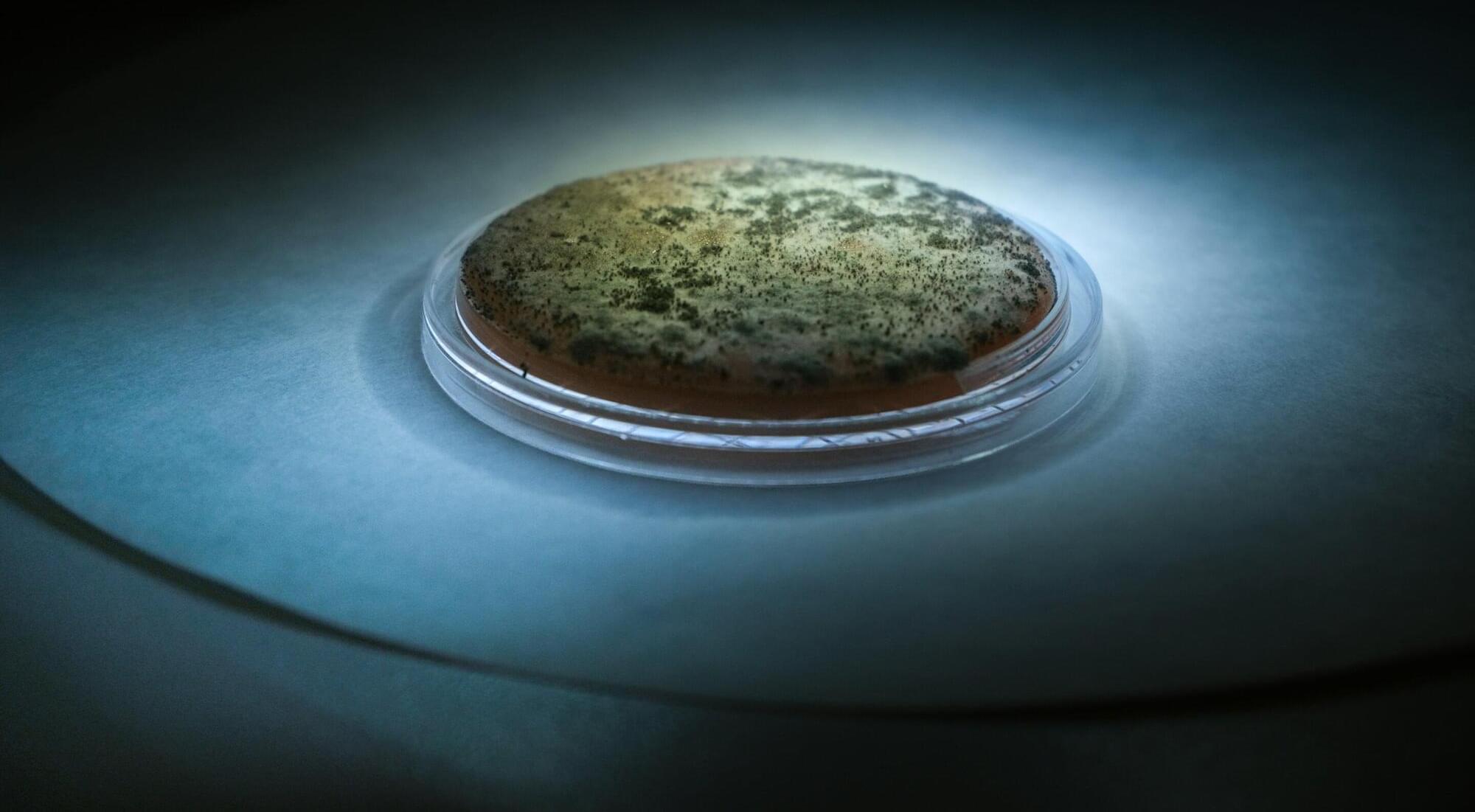

Abstract: Can we identify infections earlier in patients undergoing breast implant reconstruction?

Jeffrey P. Henderson use metabolomic profiling of postimplantation drain fluid, revealing an infection-associated molecular signature that, in longitudinal samples, substantially pre-dated clinical infection diagnosis.

1Infectious Diseases Division, Department of Medicine and.

2Division of Plastic and Reconstructive Surgery, Washington University in St. Louis School of Medicine, St. Louis, Missouri, USA.

3Department of Mathematics, Dartmouth College, Hanover, New Hampshire, USA.

Why do microbes team up? A new model explains nutrient sharing in fluctuating environments

Depending on others for something you need may feel like a risky proposition—and perhaps a human one. It is actually a survival strategy found in the microbial world, and far more frequently than one might expect. Discovering why is key to understanding how microbes form stable communities across medical, industrial, and ecological settings.

A new study by bioengineering professor Sergei Maslov (CAIM co-leader), computational scientist Ashish George, and biology professor Tong Wang explores why interdependence can be such a winning move for microbial communities. Their work, published in Cell Systems, demonstrated that a mathematical model of how bacteria produce and share resources accurately predicted the outcome of experiments with living E. coli strains.

The researchers’ collaboration began during their time as colleagues at the Carl R. Woese Institute for Genomic Biology at the University of Illinois Urbana-Champaign. George continued the collaboration in his position at the Broad Institute; Wang, in his appointment at Purdue University. Maslov, who led the study, remains at Illinois and is an affiliate member of the National Institute for Theory and Mathematics in Biology.