Natural ingredients have long been utilized to enhance human health.

Read “” by James P. Kowall on Medium.

Watch this very interesting video in which Roger Penrose argues that Consciousness is fundamental and came first before it created the universe through a process of observation that turns potentiality into actuality:

For 400 years, we’ve believed that mindless matter eventually evolved into conscious minds. But what if we have the causation completely backwards? What if consciousness is the precondition for the universe?

In this video, we dive deep into the quantum paradox, wave function collapse, and the radical scientific theory that consciousness isn’t an accident of evolution — it’s the fundamental building block of reality itself. From the Copenhagen interpretation to the mysteries of the biological brain, we explore how quantum mechanics suggests the physical world is simply what appears when consciousness observes itself.

If humankind is to explore deep space, one small passenger should not be left behind: microbes. In fact, it would be impossible to leave them behind, since they live on and in our bodies, surfaces and food. Learning how they react to space conditions is critical, but they could also be invaluable fellows in our endeavor to explore space.

Microorganisms such as bacteria and fungi can harvest crucial minerals from rocks and could provide a sustainable alternative to transporting much-needed resources from Earth.

Researchers from Cornell and the University of Edinburgh collaborated to study how those microbes extract platinum group elements from a meteorite in microgravity, with an experiment conducted aboard the International Space Station. They found that “biomining” fungi are particularly adept at extracting the valuable metal palladium, while removing the fungus resulted in a negative effect on nonbiological leaching in microgravity.

“Glass” has a unique and distinct meaning in physics—one that refers not just to the transparent material we associate with window glass. Instead, it refers to any system that looks solid but is not in true equilibrium and continues to change extremely slowly over time. Examples include window glass, plastics, metallic glasses, spin glasses (i.e., magnetic systems), and even some biological and computational systems.

When a liquid is cooled very quickly—a process called quenching—it doesn’t have time to organize into a crystal but becomes stuck in a disordered state far from equilibrium. Its properties—like stiffness and structure—slowly evolve through a process called “aging.”

Now, a research team from the Institute of Theoretical Physics of the Chinese Academy of Sciences has proposed a new theoretical framework for understanding the universal aging behavior of glassy materials. The study is published in the journal Science Advances.

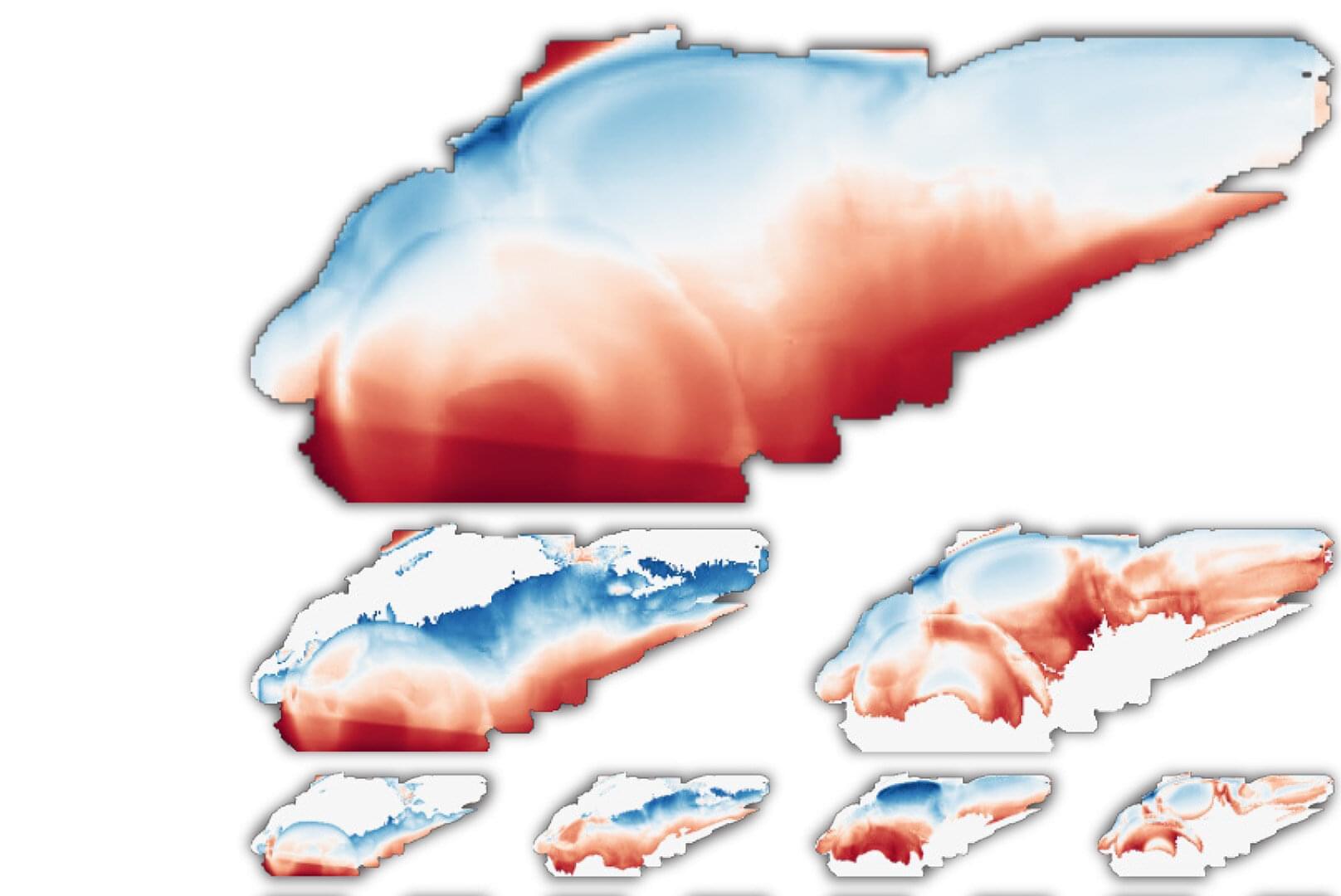

Your brain begins as a single cell. When all is said and done, it will house an incredibly complex and powerful network of some 170 billion cells. How does it organize itself along the way? Cold Spring Harbor Laboratory neuroscientists have come up with a surprisingly simple answer that could have far-reaching implications for biology and artificial intelligence.

Stan Kerstjens, a postdoc in Professor Anthony Zador’s lab, frames the question in terms of positional information. “The only thing a cell ‘sees’ is itself and its neighbors,” he explains. “But its fate depends on where it sits. A cell in the wrong place becomes the wrong thing, and the brain doesn’t develop right. So, every cell must solve two questions: Where am I? And who do I need to become?”

In a study published in Neuron, Kerstjens, Zador, and colleagues at Harvard University and ETH Zürich put forward a new theory for how the brain organizes itself during development.

In this video we look into one of the developing areas of computing: wetware. Most specifically neuromorphic computing, a science which uses actual neurons on chips.

We talk to Cortical labs, the company that developed the pong-playing dish brain, and professor Thomas Hartung to understand what the benefits of this technology are.

🚀 Discover deep-dive engineering stories and breakthrough technologies on Interesting Engineering:

/ @interestingengineeringie.

🪖 Explore military innovation and defense technology on Military Mechanics:

/ @militarymechanicsie.

🔔 Subscribe to IE Brief for daily updates on the discoveries, technologies, and global developments shaping our world:

/ @ienews-brief.

🔬 Complex tech, simply explained. Discover how the world works with IE Explains: / @ie-explains

When a human mind can be emulated — memories, habits, and the weather of thought running on engineered hardware — “uploading” stops being an ending and becomes a beginning. Substrate-independent minds can be backed up, restored, paused without time passing, and deployed into new bodies: telepresence robots, swarms, or chassis built for heat and radiation. Distance turns into bandwidth as consciousness moves as data, bound only by light. Under the spectacle is a harder, technical question: what must be captured, at what scale, for an emulation to be someone — and what rights and power follow once persons are portable infrastructure?

Mind uploading has usually been told as a one-way escape hatch: a last-minute transfer from a failing body into a machine, the technological equivalent of outrunning a deadline. That framing makes the idea feel like a hospice fantasy — dramatic, personal, terminal. But it leaves out the second verb that changes everything. If a mind can be reproduced as a running process, it isn’t just uploaded once; it can be instantiated again, moved, paused, restored, and redeployed. Uploading is capture. Downloading is what makes a mind into something mobile.

The phrase “substrate-independent mind” tries to name that mobility without the melodrama. A substrate is the medium a mind runs on: biological tissue, silicon, specialized hardware, something not yet invented. Independence doesn’t mean the mind floats free of physics; it means the same meaningful mental functions might be implementable on different platforms, like a program that can run on different computers. The promise is not that neurons are irrelevant, but that the mind might be the pattern of information processing the neurons carry out — the thing they do, not the stuff they’re made of.

Three centuries after Newton described the universe through fixed laws and deterministic equations, science may be entering an entirely new phase.

According to biochemist and complex systems theorist Stuart Kauffman and computer scientist Andrea Roli, the biosphere is not a predictable, clockwork system. Instead, it is a self-organising, ever-evolving web of life that cannot be fully captured by mathematical models.

Organisms reshape their environments in ways that are fundamentally unpredictable. These processes, Kauffman and Roli argue, take place in what they call a “Domain of No Laws.”

This challenges the very foundation of scientific thought. Reality, they suggest, may not be governed by universal laws at all—and it is biology, not physics, that could hold the answers.

Tap here to read more.