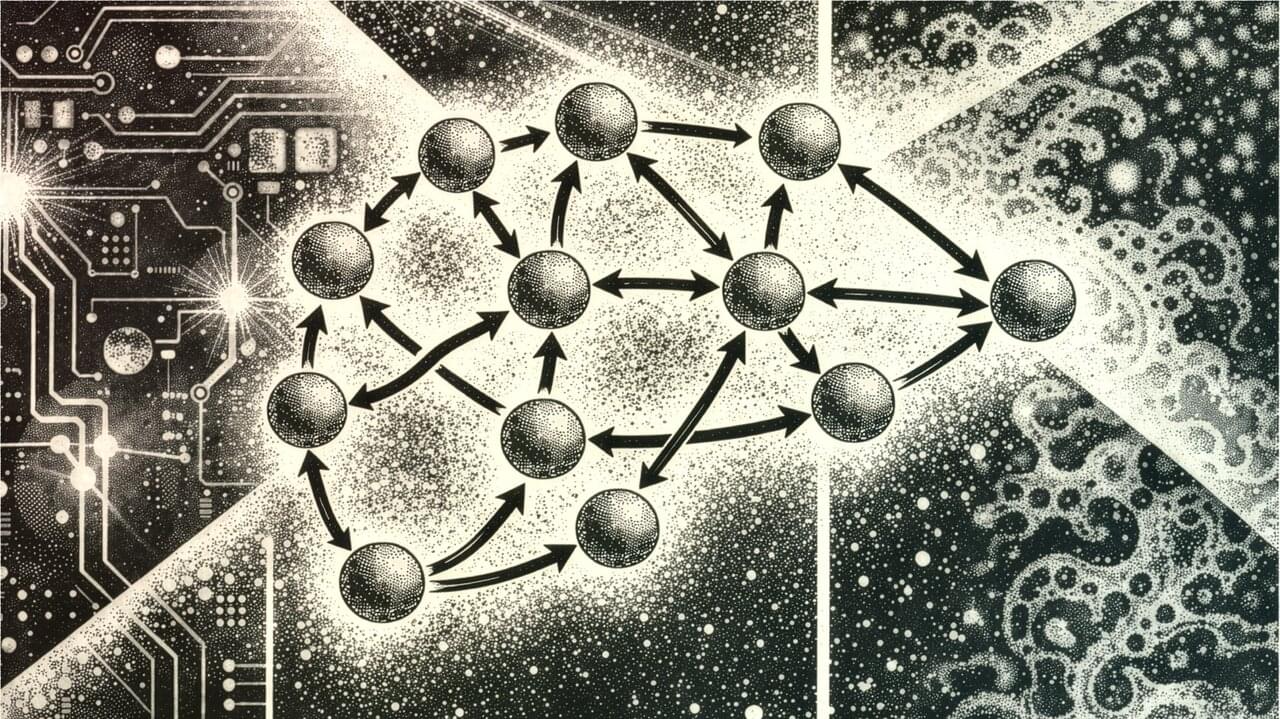

Scientists may have new answers to why pop-ups or notifications grab our attention. Turns out our attention is on a cycle, shifting seven to 10 times per second. This rhythmic occurrence may be crucial for survival, as it prevents us from becoming overly focused on one thing in our environment. It could help us to see a car backing up in a parking lot while we search for where we parked, or to duck to avoid a low-hanging tree branch on a walk while watching a kid ride a bike.

However, these windows that shift our attention could also make us more susceptible to distractions, especially in modern times. As we live in a world surrounded by screens, digital alerts, and other visual stimuli, these frequent and innate windows for shifting attention may make it easier to be pulled away from a task.

“For our ancestors who had to continue to monitor the environment for predators while foraging for food, this was a beneficial trait,” said Ian Fiebelkorn, Ph.D., assistant professor of Neuroscience at the Del Monte Institute for Neuroscience at the University of Rochester and senior author of a study out in the journal PLOS Biology. “But in our modern environment, with laptops open in front of us and a smartphone nearby, rhythmically occurring windows for beneficial attentional shifts might also work against us. That is, rhythmically occurring windows for attentional shifts are also associated with increased susceptibility to distracting information.”