A specialised quantum computer has achieved quantum supremacy, accomplishing in under 4 minutes what would take the biggest supercomputer 600 million years.

Since Xi put out the call to build up the new area, China’s tech giants have piled in. Alibaba Group Holding, Tencent Holdings, Baidu, Zhongguancun Science Park and Tsinghua University have all established projects in Xiongan. The projects include the use of sensors, 5G networks and facilities for supercomputing and big data in the pursuit of building up the smart city. Alibaba is the parent company of the Post.

JD Digits, the e-commerce giant’s big data arm, is building a smart city operating system that uses artificial intelligence for urban management.

The biggest computer chip in the world is so fast and powerful it can predict future actions “faster than the laws of physics produce the same result.”

That’s according to a post by Cerebras Systems, a startup company that made the claim at the online SC20 supercomputing conference this week.

Working with the U.S. Department of Energy’s National Energy Technology Laboratory, Cerebras designed what it calls “the world’s most powerful AI compute system.” It created a massive chip 8.5 inch-square chip, the Cerebras CS-1, housed in a refrigerator-sized computer in an effort to improve on deep-learning training models.

Scientists from NASA ’s Goddard Space Flight Center in Greenbelt, Maryland, and international collaborators demonstrated a new method for mapping the location and size of trees growing outside of forests, discovering billions of trees in arid and semi-arid regions and laying the groundwork for more accurate global measurement of carbon storage on land.

Using powerful supercomputers and machine learning algorithms, the team mapped the crown diameter – the width of a tree when viewed from above – of more than 1.8 billion trees across an area of more than 500,000 square miles, or 1,300,000 square kilometers. The team mapped how tree crown diameter, coverage, and density varied depending on rainfall and land use.

Special thanks to Lieuwe Vinkhuyzen for checking that this very simplified view on building neural nets did not stray too far from reality.

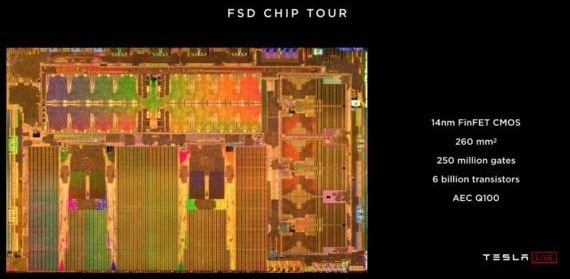

The inhabitants of the Tesla fanboy echo chamber have heard regularly about the Tesla Dojo supercomputer, with almost nobody knowing what it was. It was first mentioned, that I know of, at Tesla Autonomy Day on April 22, 2019. More recently a few comments from Georg Holtz, Tesmanian, and Elon Musk himself have shed some light on this project.

Aurora 21 will help the US keep pace among the other nations who own the fastest supercomputers. Scientists plan on using it to map the connectome of the human brain.

Harun Šiljak, Trinity College Dublin

Google reported a remarkable breakthrough towards the end of 2019. The company claimed to have achieved something called quantum supremacy, using a new type of “quantum” computer to perform a benchmark test in 200 seconds. This was in stark contrast to the 10,000 years that would supposedly have been needed by a state-of-the-art conventional supercomputer to complete the same test.

Despite IBM’s claim that its supercomputer, with a little optimisation, could solve the task in a matter of days, Google’s announcement made it clear that we are entering a new era of incredible computational power.

Another argument for government to bring AI into its quantum computing program is the fact that the United States is a world leader in the development of computer intelligence. Congress is close to passing the AI in Government Act, which would encourage all federal agencies to identify areas where artificial intelligences could be deployed. And government partners like Google are making some amazing strides in AI, even creating a computer intelligence that can easily pass a Turing test over the phone by seeming like a normal human, no matter who it’s talking with. It would probably be relatively easy for Google to merge some of its AI development with its quantum efforts.

The other aspect that makes merging quantum computing with AI so interesting is that the AI could probably help to reduce some of the so-called noise of the quantum results. I’ve always said that the way forward for quantum computing right now is by pairing a quantum machine with a traditional supercomputer. The quantum computer could return results like it always does, with the correct outcome muddled in with a lot of wrong answers, and then humans would program a traditional supercomputer to help eliminate the erroneous results. The problem with that approach is that it’s fairly labor intensive, and you still have the bottleneck of having to run results through a normal computing infrastructure. It would be a lot faster than giving the entire problem to the supercomputer because you are only fact-checking a limited number of results paired down by the quantum machine, but it would still have to work on each of them one at a time.

But imagine if we could simply train an AI to look at the data coming from the quantum machine, figure out what makes sense and what is probably wrong without human intervention. If that AI were driven by a quantum computer too, the results could be returned without any hardware-based delays. And if we also employed machine learning, then the AI could get better over time. The more problems being fed to it, the more accurate it would get.