If you’re interested in mind uploading, then I have an excellent article to recommend. This wide-ranging article is focused on neuromorphic computing and has sections on memristors. Here is a key excerpt:

“…Perhaps the most exciting emerging AI hardware architectures are the analog crossbar approaches since they achieve parallelism, in-memory computing, and analog computing, as described previously. Among most of the AI hardware chips produced in roughly the last 15 years, an analog memristor crossbar-based chip is yet to hit the market, which we believe will be the next wave of technology to follow. Of course, incorporating all the primitives of neuromorphic computing will likely require hardware solutions even beyond analog memristor crossbars…”

Here’s a web link to the research paper:

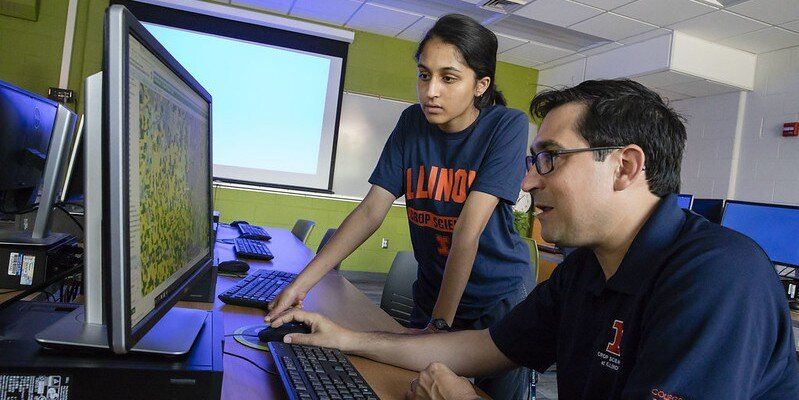

Computers have undergone tremendous improvements in performance over the last 60 years, but those improvements have significantly slowed down over the last decade, owing to fundamental limits in the underlying computing primitives. However, the generation of data and demand for computing are increasing exponentially with time. Thus, there is a critical need to invent new computing primitives, both hardware and algorithms, to keep up with the computing demands. The brain is a natural computer that outperforms our best computers in solving certain problems, such as instantly identifying faces or understanding natural language. This realization has led to a flurry of research into neuromorphic or brain-inspired computing that has shown promise for enhanced computing capabilities. This review points to the important primitives of a brain-inspired computer that could drive another decade-long wave of computer engineering.