Science today stands at a crossroads: will its progress be driven by human minds or by the machines that we’ve created?

The AI nanny is here! In a new feat for science, robots and AI can now be paired to optimise the creation of human life. In a Matrix-esque reality, robotics and artificial intelligence can now help to develop babies with algorithms and artificial wombs.

Reported by South China Morning Post, Chinese scientists in Suzhou have developed the new technology. However, there are worries surrounding the ethics of actually artificially growing human babies.

That is not to say that the advantage has been proven yet. The quantum algorithm developed by IBM performed comparably to classical methods on the limited quantum processors that exist today – but those systems are still in their very early stages.

And with only a small number of qubits, today’s quantum computers are not capable of carrying out computations that are useful. They also remain crippled by the fragility of qubits, which are highly sensitive to environmental changes and are still prone to errors.

Rather, IBM and CERN are banking on future improvements in quantum hardware to demonstrate tangibly, and not only theoretically, that quantum algorithms have an advantage.

In part because the technologies have not yet been widely adopted, previous analyses have had to rely either on case studies or subjective assessments by experts to determine which occupations might be susceptible to a takeover by AI algorithms. What’s more, most research has concentrated on an undifferentiated array of “automation” technologies including robotics, software, and AI all at once. The result has been a lot of discussion—but not a lot of clarity—about AI, with prognostications that range from the utopian to the apocalyptic.

Given that, the analysis presented here demonstrates a new way to identify the kinds of tasks and occupations likely to be affected by AI’s machine learning capabilities, rather than automation’s robotics and software impacts on the economy. By employing a novel technique developed by Stanford University Ph.D. candidate Michael Webb, the new report establishes job exposure levels by analyzing the overlap between AI-related patents and job descriptions. In this way, the following paper homes in on the impacts of AI specifically and does it by studying empirical statistical associations as opposed to expert forecasting.

Artificial intelligence will soon become one of the most important, and likely most dangerous, aspects of the metaverse. I’m talking about agenda-driven artificial agents that look and act like any other users but are virtual simulations that will engage us in “conversational manipulation,” targeting us on behalf of paying advertisers.

This is especially dangerous when the AI algorithms have access to data about our personal interests, beliefs, habits and temperament, while also reading our facial expressions and vocal inflections. Such agents will be able to pitch us more skillfully than any salesman. And it won’t just be to sell us products and services – they could easily push political propaganda and targeted misinformation on behalf of the highest bidder.

And because these AI agents will look and sound like anyone else in the metaverse, our natural skepticism to advertising will not protect us. For these reasons, we need to regulate some aspects of the coming metaverse, especially AI-driven agents. If we don’t, promotional AI-avatars will fill our lives, sensing our emotions in real time and quickly adjusting their tactics for a level of micro-targeting never before experienced.

Learnings For Regenerative Morphogenesis, Astro-Biology And The Evolution Of Minds — Dr. Michael Levin, Tufts University, and Dr. Josh Bongard, University of Vermont.

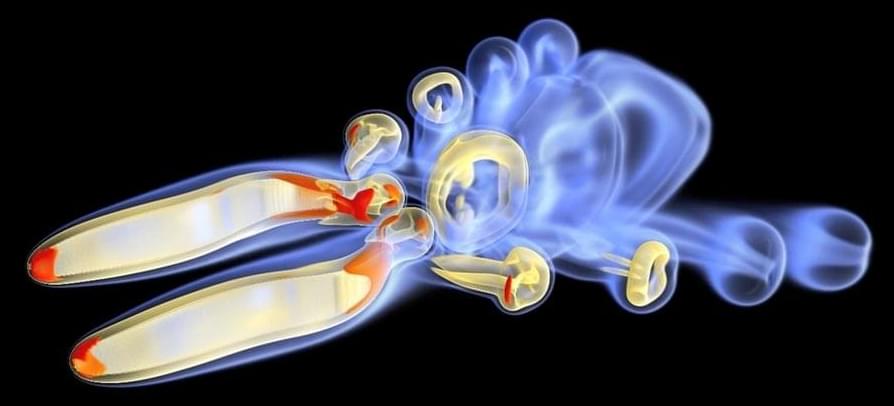

Xenobots are living micro-robots, built from cells, designed and programmed by a computer (an evolutionary algorithm) and have been demonstrated to date in the laboratory to move towards a target, pick up a payload, heal themselves after being cut, and reproduce via a process called kinematic self-replication.

In addition to all of their future potential that has been mentioned in the press, including Xenobot applications for cleaning up radioactive wastes, collecting micro-plastics in the oceans, and even helping terraform planets, Xenobot research offers a completely new tool kit to help increase our understanding of how complex tissues/organs/body segments are cooperatively formed during the process of morphogenesis, how minds develop, and even offers glimpses of possibilities of what novel life forms we may encounter one day in the cosmos.

This cutting edge Xenobot research has been conducted by an interdisciplinary team composed of scientists from University of Vermont, Tufts University, and Harvard, and our show is honored to be joined by two members of this team today.

Dr. Josh Bongard, Ph.D. (https://www.uvm.edu/cems/cs/profiles/josh_bongard), is Professor, Morphology, Evolution & Cognition Laboratory, Department of Computer Science, College of Engineering and Mathematical Sciences, University of Vermont.

Though Meta didn’t give numbers on RSC’s current top speed, in terms of raw processing power it appears comparable to the Perlmutter supercomputer, ranked fifth fastest in the world. At the moment, RSC runs on 6,800 NVIDIA A100 graphics processing units (GPUs), a specialized chip once limited to gaming but now used more widely, especially in AI. Already, the machine is processing computer vision workflows 20 times faster and large language models (like, GPT-3) 3 times faster. The more quickly a company can train models, the more it can complete and further improve in any given year.

In addition to pure speed, RSC will give Meta the ability to train algorithms on its massive hoard of user data. In a blog post, the company said that they previously trained AI on public, open-source datasets, but RSC will use real-world, user-generated data from Meta’s production servers. This detail may make more than a few people blanch, given the numerous privacy and security controversies Meta has faced in recent years. In the post, the company took pains to note the data will be carefully anonymized and encrypted end-to-end. And, they said, RSC won’t have any direct connection to the larger internet.

To accommodate Meta’s enormous training data sets and further increase training speed, the installation will grow to include 16,000 GPUs and an exabyte of storage—equivalent to 36,000 years of high-quality video—later this year. Once complete, Meta says RSC will serve training data at 16 terabytes per second and operate at a top speed of 5 exaflops.

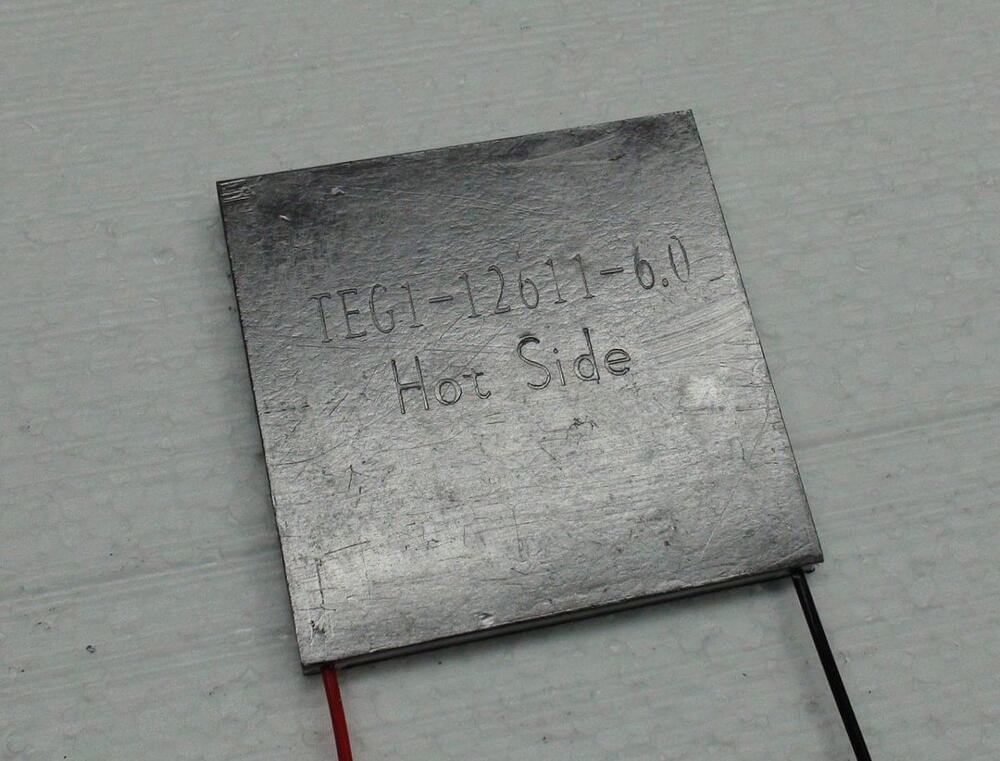

The RVFL was used in combination with four different techniques: the Jellyfish Search Algorithm (JFSA); the Artificial Ecosystem-based Optimization (AEO); the Manta Ray Foraging Optimization (MRFO) model; and the Sine Cosine Algorithm (SCA). Through the four models, the academics assessed the PV-fed current, the cooling power, the average air chamber temperature, and the coefficient of performance (COP) of a PV-powered STEACS for air conditioning of a 1m3 test chamber under diversified cooling loads varying from 65 to 260W.

The system was built with six solar panels, an air duct system, four batteries, a charge controller, TECs, an inverter, heat sinks, a test chamber, and condenser fans. “The TECs were mainly connected with the air duct arrangement and placed close to each other [and] were placed between the air duct and heat sinks,” the researchers explained. “When direct PV current was fed to TECs arranged on the sheet of the air duct system, one face [became] cold, defined as a cold air duct, and another side [became] hot, called “hot air.” The air ducts were composed of an acrylic enclosure wrapped with a protection sheet.”

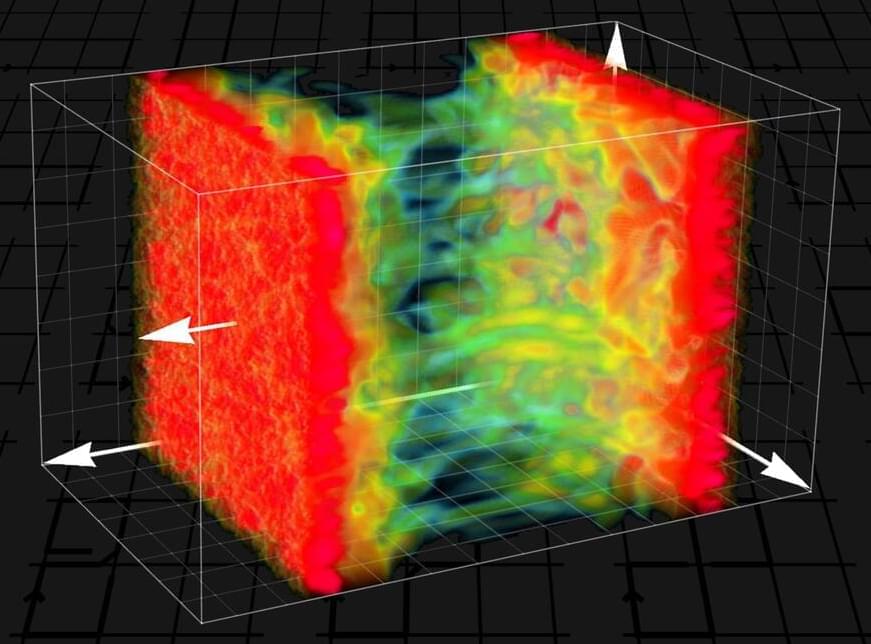

It could hardly be more complicated: tiny particles whir around wildly with extremely high energy, countless interactions occur in the tangled mess of quantum particles, and this results in a state of matter known as “quark-gluon plasma”. Immediately after the Big Bang, the entire universe was in this state; today it is produced by high-energy atomic nucleus collisions, for example at CERN.

Such processes can only be studied using high-performance computers and highly complex computer simulations whose results are difficult to evaluate. Therefore, using artificial intelligence or machine learning for this purpose seems like an obvious idea. Ordinary machine-learning algorithms, however, are not suitable for this task. The mathematical properties of particle physics require a very special structure of neural networks. At TU Wien (Vienna), it has now been shown how neural networks can be successfully used for these challenging tasks in particle physics.

There are few environments as unforgiving as the ocean. Its unpredictable weather patterns and limitations in terms of communications have left large swaths of the ocean unexplored and shrouded in mystery.

“The ocean is a fascinating environment with a number of current challenges like microplastics, algae blooms, coral bleaching, and rising temperatures,” says Wim van Rees, the ABS Career Development Professor at MIT. “At the same time, the ocean holds countless opportunities — from aquaculture to energy harvesting and exploring the many ocean creatures we haven’t discovered yet.”

Ocean engineers and mechanical engineers, like van Rees, are using advances in scientific computing to address the ocean’s many challenges, and seize its opportunities. These researchers are developing technologies to better understand our oceans, and how both organisms and human-made vehicles can move within them, from the micro scale to the macro scale.