Speech is more than just a form of communication. A person’s voice conveys emotions and personality and is a unique trait we can recognize. Our use of speech as a primary means of communication is a key reason for the development of voice assistants in smart devices and technology. Typically, virtual assistants analyze speech and respond to queries by converting the received speech signals into a model they can understand and process to generate a valid response. However, they often have difficulty capturing and incorporating the complexities of human speech and end up sounding very unnatural.

Now, in a study published in the journal IEEE Access, Professor Masashi Unoki from Japan Advanced Institute of Science and Technology (JAIST), and Dung Kim Tran, a doctoral course student at JAIST, have developed a system that can capture the information in speech signals similarly to how humans perceive speech.

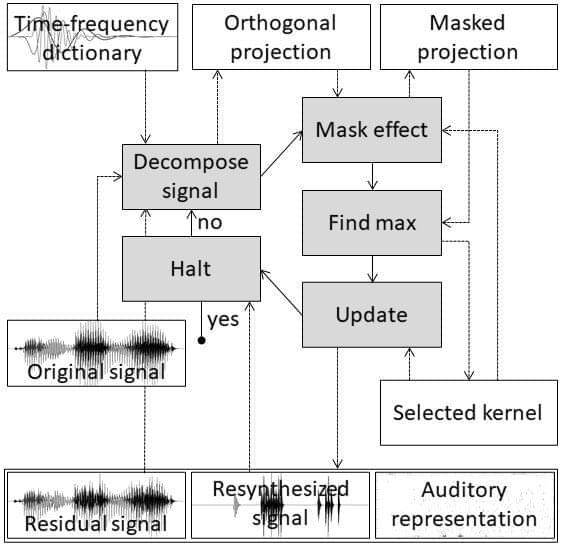

“In humans, the auditory periphery converts the information contained in input speech signals into neural activity patterns (NAPs) that the brain can identify. To emulate this function, we used a matching pursuit algorithm to obtain sparse representations of speech signals, or signal representations with the minimum possible significant coefficients,” explains Prof. Unoki. “We then used psychoacoustic principles, such as the equivalent rectangular bandwidth scale, gammachirp function, and masking effects to ensure that the auditory sparse representations are similar to that of the NAPs.”