An atomic-scale experiment all but settles the origin of the strong form of superconductivity seen in cuprate crystals, confirming a 35-year-old theory.

https://youtube.com/watch?v=-ZeeuDrknYc&feature=share

Technology — An investigation into the advancements in digital technology unique to the gaming industry. They can either enhance our lives and make the world a better place to live, or we may find ourselves in a dystopian future where we are ruled and controlled by the very technologies we rely on.

End Game — Technology (2021)

Director: J. Michael Long.

Writers: O.H. Krill.

Stars: Paul Jamison, Razor Keeves.

Genre: Documentary.

Country: United States.

Language: English.

Release Date: 2021 (USA)

Synopsis:

The technology we rely on for everyday communication, entertainment and medicine could one day be used against us. With facial recognition, drone surveillance, human chipping, and nano viruses, the possibility is no longer just science-fiction. Could artificial intelligence become the dominant life form?

Reviews:

“Shocking insight into the possibilities that lie ahead.” — Philip Gardiner, best selling author.

“Well researched and highly captivating.” — Phenomenon Magazine.

MORE DOCS!

Yakir Aharanov, winner of the U.S. Presidential Medal of Science, summarizes the main ideas behind the two-state vector formalism.

At the research workshop on the Many-Worlds Interpretation of Quantum Mechanics at the Center for Quantum Science and Technology, Tel-Aviv University, 18–24 October 2022, Prof Lev Vaidman asked: “Why is the many-worlds interpretation not in the consensus?” This was my answer.

😗

Elon GOAT Token’s efforts to deliver the statue landed the company on Twitter’s U.S. trending page. Musk purchased the social network last month, causing an upheaval with mass layoffs, departed advertisers and potential changes to the process of obtaining a Twitter Blue check mark.

Costing a total of $600,000, according to Elon GOAT Token, the Musk sculpture is a nod to the billionaire’s fame — a rocket representing SpaceX, the spacecraft company Musk founded; and the literal goat, a word that is also used as an acronym for the phrase “Greatest Of All Time.”

QC: Still not actually useful, but it’s increasingly intriguing.

• Through Innovate UK, the Department for Transport has commissioned a Costain led consortium to assess the economic and technical potential of the UK’s first ‘eHighway’

• The study is part of the UK government’s plan to reach zero net emissions for heavy road freight.

• It aims to demonstrate the technology is ready for a national roll-out.

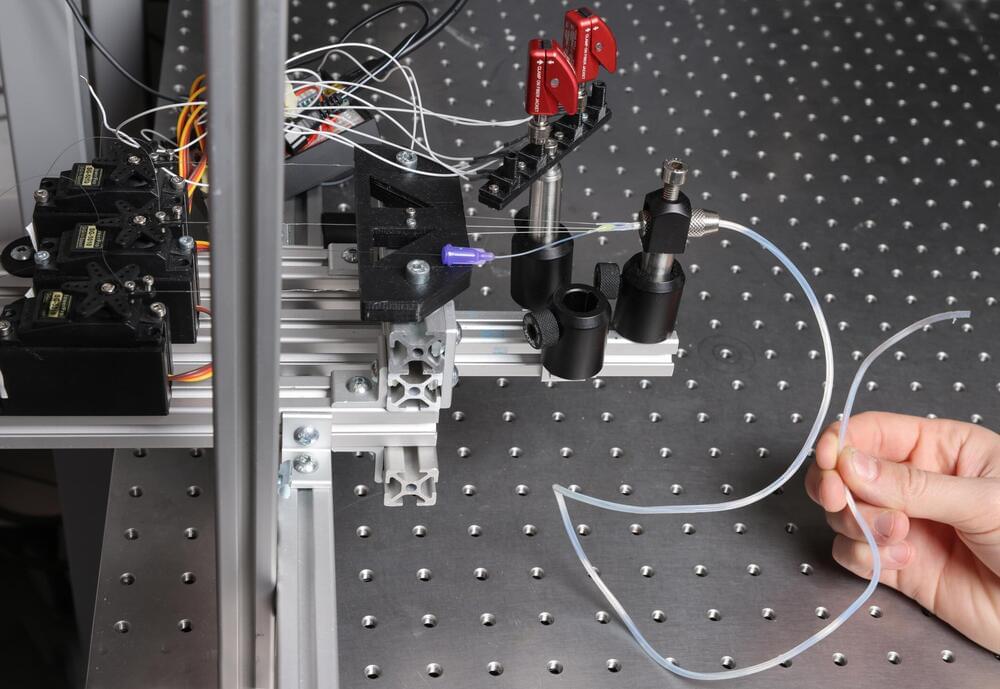

Borrowing from methods used to produce optical fibers, researchers from EPFL and Imperial College have created fiber-based soft robots with advanced motion control that integrate other functionalities, such as electric and optical sensing and targeted delivery of fluids.

In recent decades, catheter-based surgery has transformed medicine, giving doctors a minimally invasive way to do anything from placing stents and targeting tumors to extracting tissue samples and delivering contrast agents for medical imaging. While today’s catheters are highly engineered robotic devices, in most cases, the task of pushing them through the body to the site of intervention continues to be a manual and time-consuming procedure.

Combining advances in the development of functional fibers with developments in smart robotics, researchers from the Laboratory of Photonic Materials and Fiber Devices in EPFL’s School of Engineering have created multifunctional catheter-shaped soft robots that, when used as catheters, could be remotely guided to their destination or possibly even find their own way through semi-autonomous control. “This is the first time that we can generate soft catheter-like structures at such scalability that can integrate complex functionalities and be steered, potentially, inside the body,” says Fabien Sorin, the study’s principal investigator. Their work was published in the journal Advanced Science.