Hallucinations are spooky and, fortunately, fairly rare. But, a new study suggests, the real question isn’t so much why some people occasionally experience them. It’s why all of us aren’t hallucinating all the time.

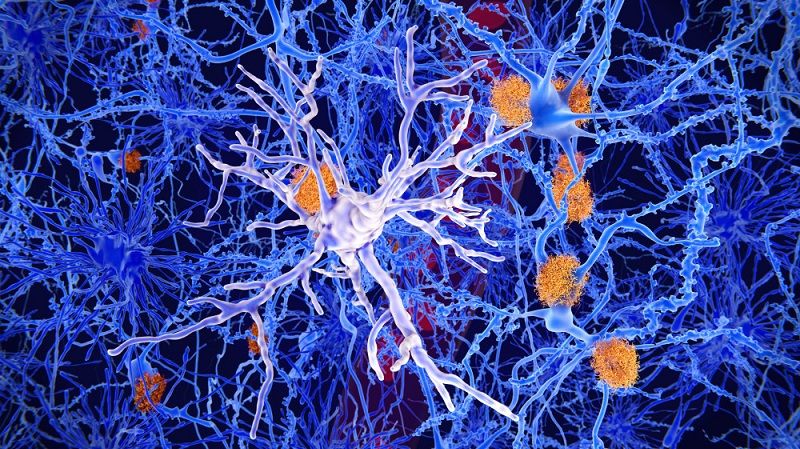

In the study, Stanford University School of Medicine neuroscientists stimulated nerve cells in the visual cortex of mice to induce an illusory image in the animals’ minds. The scientists needed to stimulate a surprisingly small number of nerve cells, or neurons, in order to generate the perception, which caused the mice to behave in a particular way.

“Back in 2012, we had described the ability to control the activity of individually selected neurons in an awake, alert animal,” said Karl Deisseroth, MD, Ph.D., professor of bioengineering and of psychiatry and behavioral sciences. “Now, for the first time, we’ve been able to advance this capability to control multiple individually specified cells at once, and make an animal perceive something specific that in fact is not really there—and behave accordingly.”