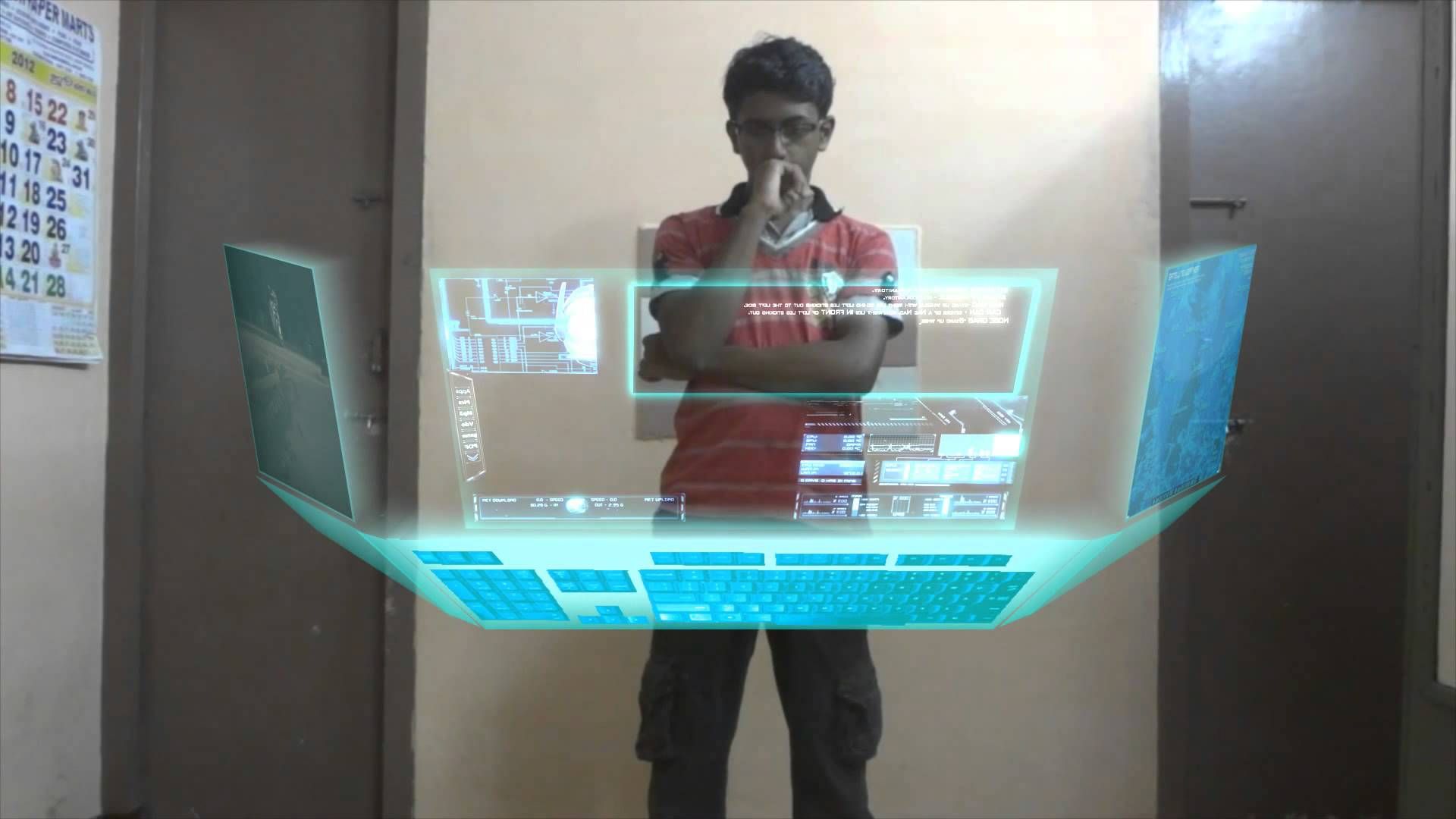

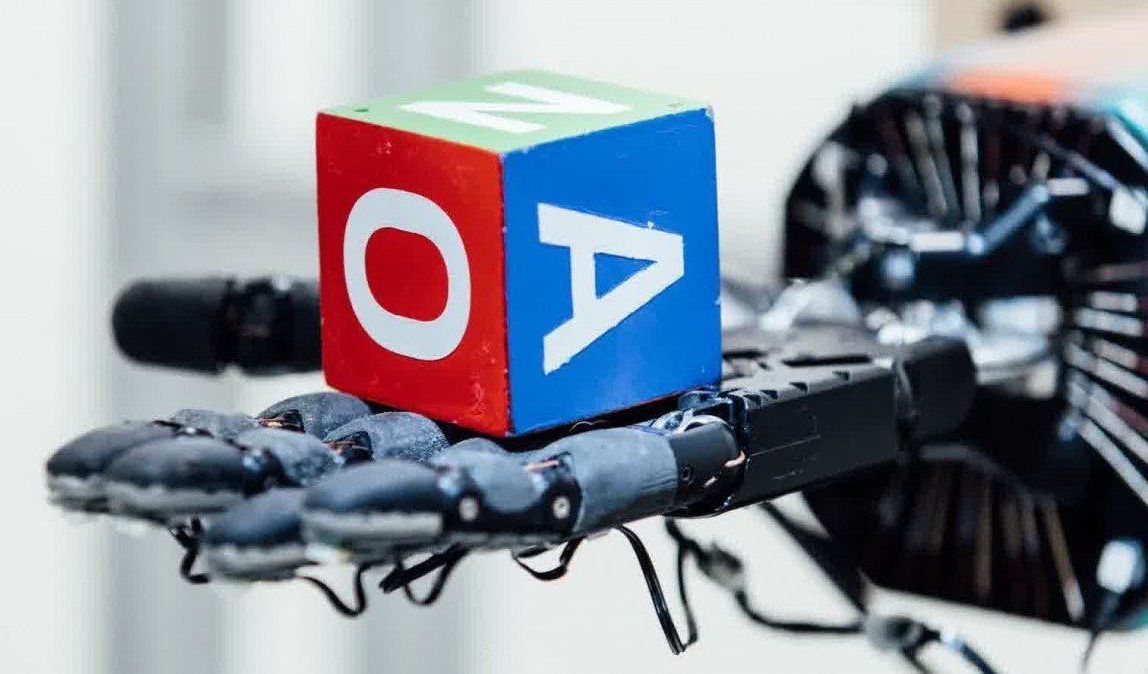

Computing innovation, computer-generated images, Virtual Reality Glasses, Hybrid Reality, communications, Holographic platform, AR, VR, PC, lifelike experience, 3D cameras, cosmic computing, computer security, gaming displays, in-flight entertainment, computer code, Holographic ideal/paradigm, gaming mechanics, automotive, medical, space, spatial, holographic memory, Artificial Neural Networks, Robotics, holographic 3D, software company, mixed-realty, holographic data, hologram monitors, hologram keyboards, voice equipment, projector system, Holographic apps, HD photography, smartphones, tablets, TVs, laptops, digital displays, 360 Video, Virtual Realty Headsets, Mobile Platforms, holographic universe, ubiquitous computing paradigm, virtual images, Holoquad, Holographic Projector Pyramid, cloud computing, spaceships, teleportation, anti-gravity devices, emulation, advanced technology, light field displays, Mobile Hologram Technology, computer programs, untethered, Immersive Technology, Computer Chips, Elohim computer, custom software, mobile application development, computing library, human-computer interactions, Artificial Neural Networks, holographic memory, Spider-Robots, pop-up gaming displays, automate machinery, computer-generated simulation, 3D Pyramid, consumer electronics, personal computers, holographic images, real-world objects, hardware interconnection, missionary, virtual assistant, Computer Systems Structure, two-dimensional computer display, computerization, Projection Screen, Portable, 3D printer, Hologram goggles, 3D Holographic Projection Technology, Hologram Computer Table, hologram generator, multilevel computer, mixed reality, Bluetooth enabled, Virtual Reality Display, transparent screen display, quantum computer, computer animation, 3D plasma display, meta surface, Dark Energy, holographic interferograms, photorefractive, Holographic atomic memory, computer-generated hologram, real-time hologram, x-ray mirror mandrels, virtual wavefront recording plane, Artificial intelligence, AI, Human Resources, Advertising, Animation, Graphic Web Design, Photography, Robotics, computer science, human-robot interaction, Emergency Medical Hologram, wearable computing, bio-computing, battlefield simulations, Holographic Associative Memory, artificial neural network, Digital Avatar.