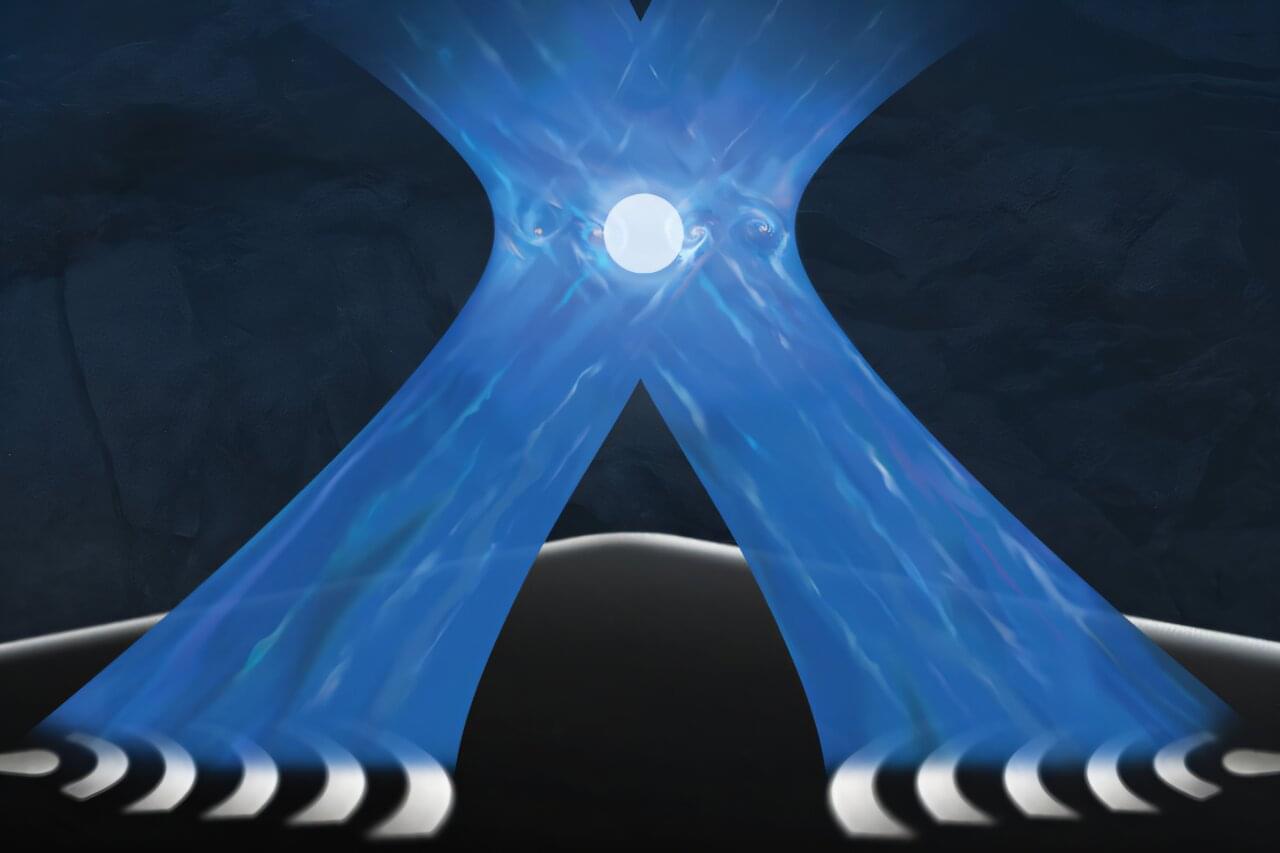

NASA is announcing the availability of its newest supercomputer, Athena, an advanced system designed to support a new generation of missions and research projects. The newest member of the agency’s High-End Computing Capability project expands the resources available to help scientists and engineers tackle some of the most complex challenges in space, aeronautics, and science.

Housed in the agency’s Modular Supercomputing Facility at NASA’s Ames Research Center in California’s Silicon Valley, Athena delivers more computing power than any other NASA system, surpassing the capabilities of its predecessors, Aitken and Pleiades, in power and efficiency. The new system, which was rolled out in January to existing users after a beta testing period, delivers over 20 petaflops of peak performance – a measurement of the number of calculations it can make per second – while reducing the agency’s supercomputing utility costs.

“Exploration has always driven NASA to the edge of what’s computationally possible,” said Kevin Murphy, chief science data officer and lead for the agency’s High-End Computing Capability portfolio at NASA Headquarters in Washington. “Now with Athena, NASA will expand its efforts to provide tailored computing resources that meet the evolving needs of its missions.”