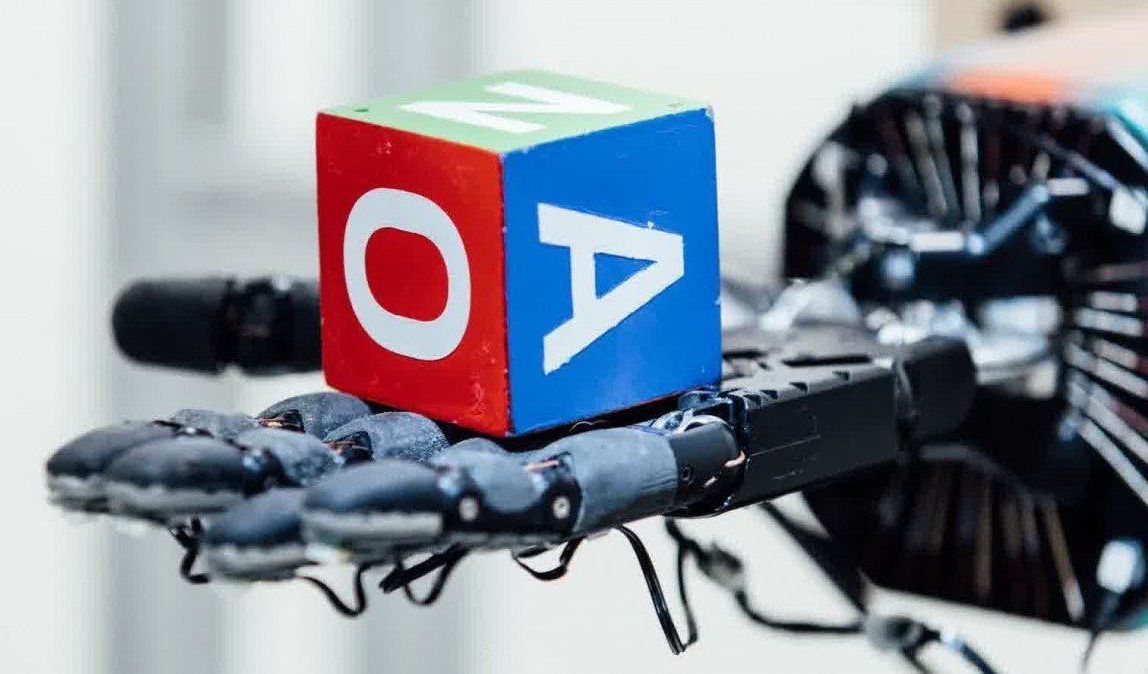

A reinforcement learning algorithm allows Dactyl to learn physical tasks by practicing them in a virtual-reality environment.

This robot was designed for harvesting vegetables inside greenhouses.

This robot cleans all of the glass surfaces in your home via ECOVACS ROBOTICS.

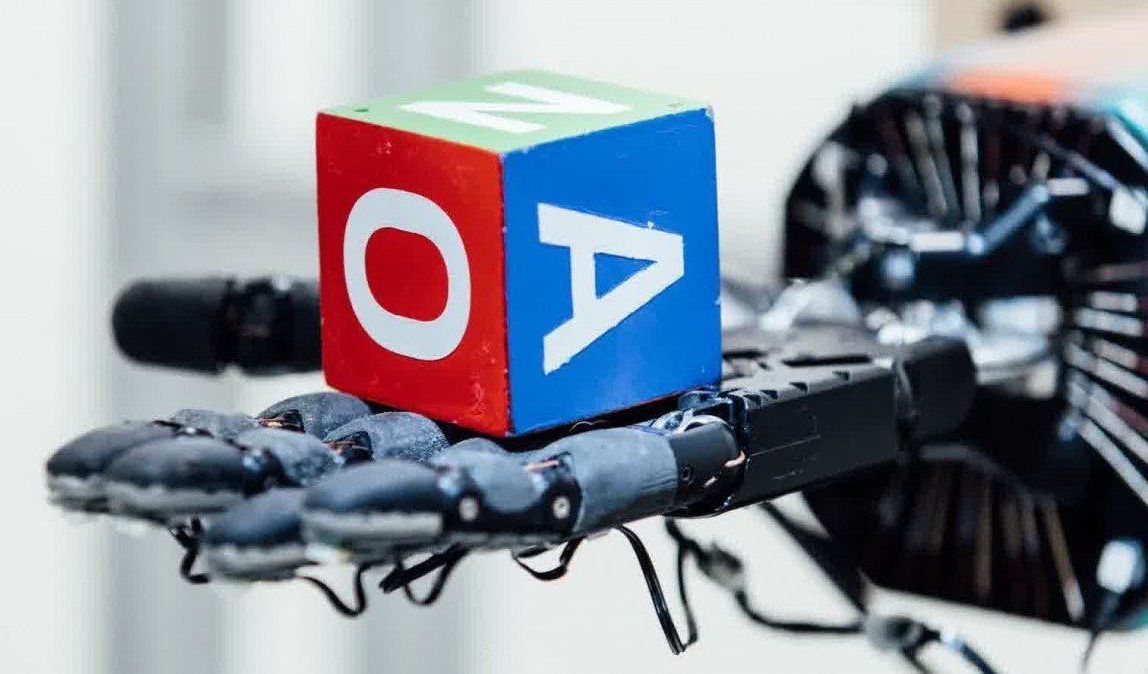

Space agencies have successfully studied asteroid and comets up close on several occasions, but capturing one for mining is also in the works. A group of Chinese scientists is looking to go a step further. Their ambitious plan involves not just capturing an asteroid, but bringing it down to the surface of Earth for study and mining.

This does sound pretty crazy on the face of it, but researchers from the National Space Science Center of the Chinese Academy of Sciences say it’s feasible. Researcher Li Mingtao and his team presented the idea at a conference to explore ideas for future technology in Shenzhen. Li says that the mission could focus on asteroids that cross Earth’s orbit, which could make them a potential hazard in the future. The Chinese plan could turn a hazard into a new source of rare materials.

The asteroids targeted by this project would be on the small side — probably just a few hundred tons. The first step is to send a fleet of small robotic probes to intercept the space rock. Then, they would deploy a “bag” of some sort that covers the asteroid, allowing the robots to slowly alter its course and steer it back to Earth.

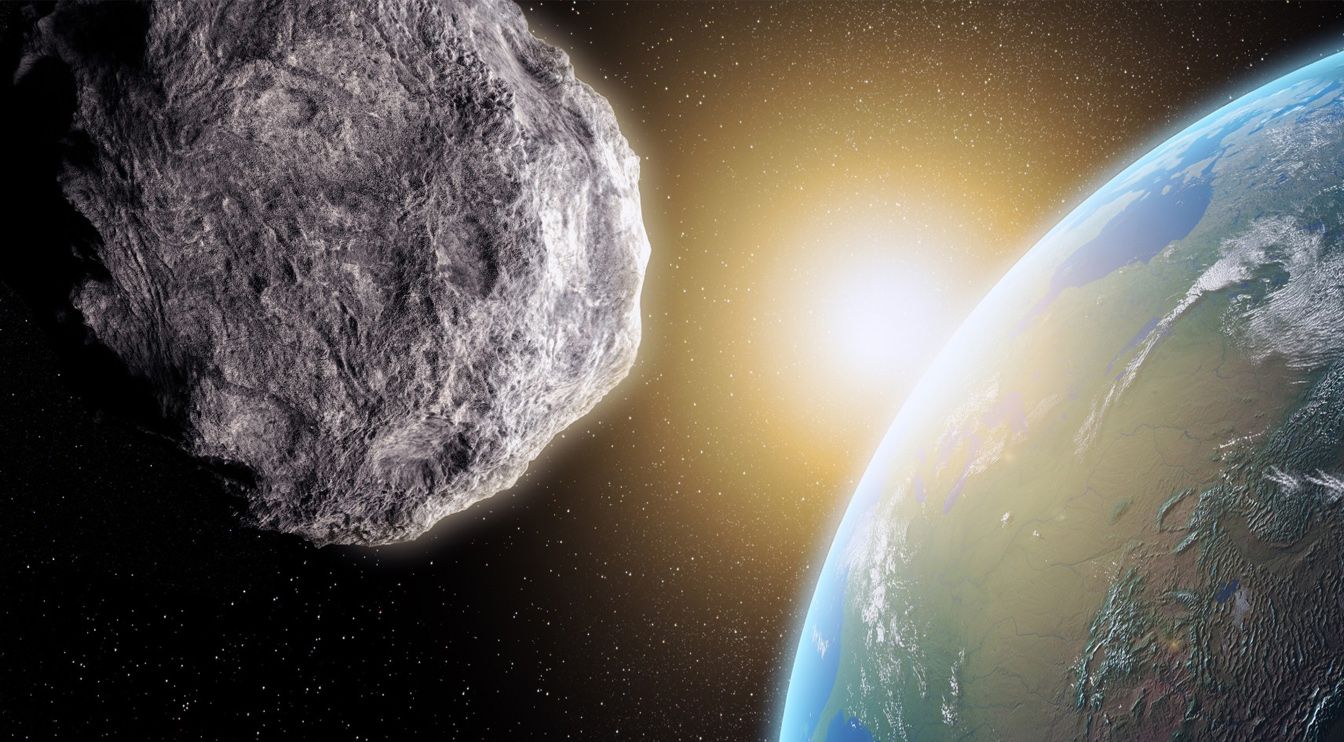

Companies like to flaunt their use of artificial intelligence to the point where it’s virtually meaningless, but the truth is that AI as we know it is still quite dumb. While it can generate useful results, it can’t explain why it produced those results in meaningful terms, or adapt to ever-evolving situations. DARPA thinks it can move AI forward, though. It’s launching an Artificial Intelligence Exploration program that will invest in new AI concepts, including “third wave” AI with contextual adaptation and an ability to explain its decisions in ways that make sense. If it identified a cat, for instance, it could explain that it detected fur, paws and whiskers in a familiar cat shape.

Importantly, DARPA also hopes to step up the pace. It’s promising “streamlined” processes that will lead to projects starting three months after a funding opportunity shows up, with feasibility becoming clear about 18 months after a team wins its contract. You might not have to wait several years or more just to witness an AI breakthrough.

The industry isn’t beholden to DARPA’s schedule, of course. It’s entirely possible that companies will develop third wave AI as quickly on their own terms. This program could light a fire under those companies, mind you. And if nothing else, it suggests that AI pioneers are ready to move beyond today’s ‘basic’ machine learning and closer to AI that actually thinks instead of merely churning out data.

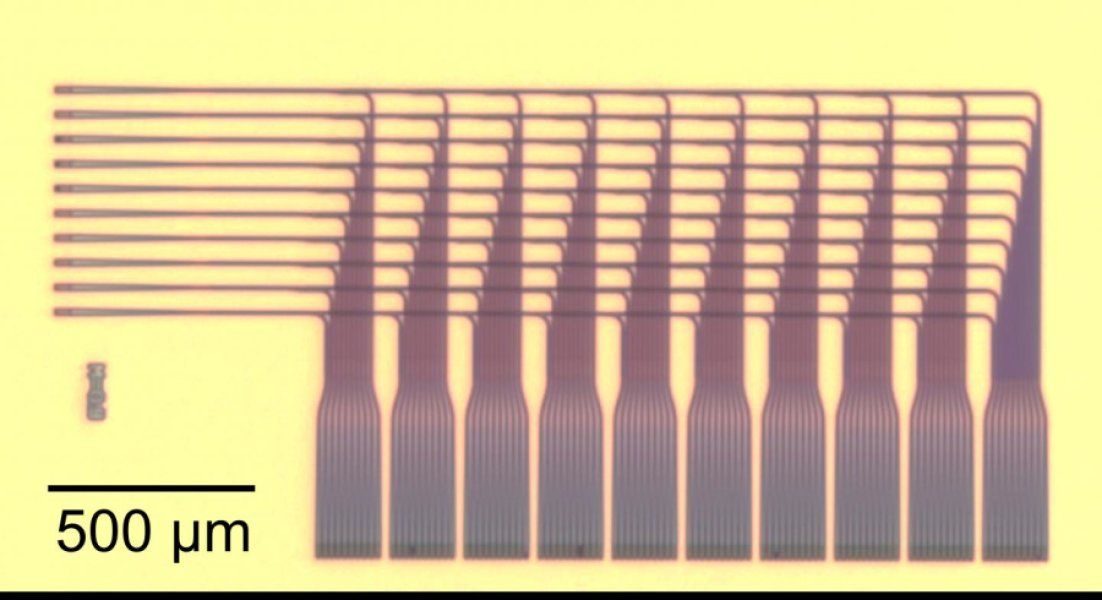

This is the next generation of robotic prosthetics. Here’s how they work.

Watch the full TED Talk here: http://bit.ly/2LQErPg

“The eyes are the window of the soul.” Cicero said that. But it’s a bunch of baloney.

Unless you’re a state-of-the-art set of machine-learning algorithms with the ability to demonstrate links between eye movements and four of the big five personality traits.

If that’s the case, then Cicero was spot on.