It happens only once every 100 million decays of the Σ+ particle.

Category: particle physics – Page 78

SOSV bets plasma will change everything from semiconductors to spacecraft

It sees so much potential that it plans on investing in more than 25 plasma-related startups over the next five years. It is also opening a new Hax lab space in partnership with the New Jersey Economic Development Authority and the U.S. Department of Energy’s Princeton Plasma Physics Laboratory.

Nuclear fusion is an obvious place to seed plasma startups. The potential power source works by compressing fuel until it turns into a dense plasma, so dense that atoms begin fusing, releasing energy in the process.

“There’s so much here. The best ideas have yet to come to unlock a lot of potential in the fusion space,” Duncan Turner, general partner at SOSV, told TechCrunch.

Chemistry at the beginning: How molecular reactions influenced the formation of the first stars

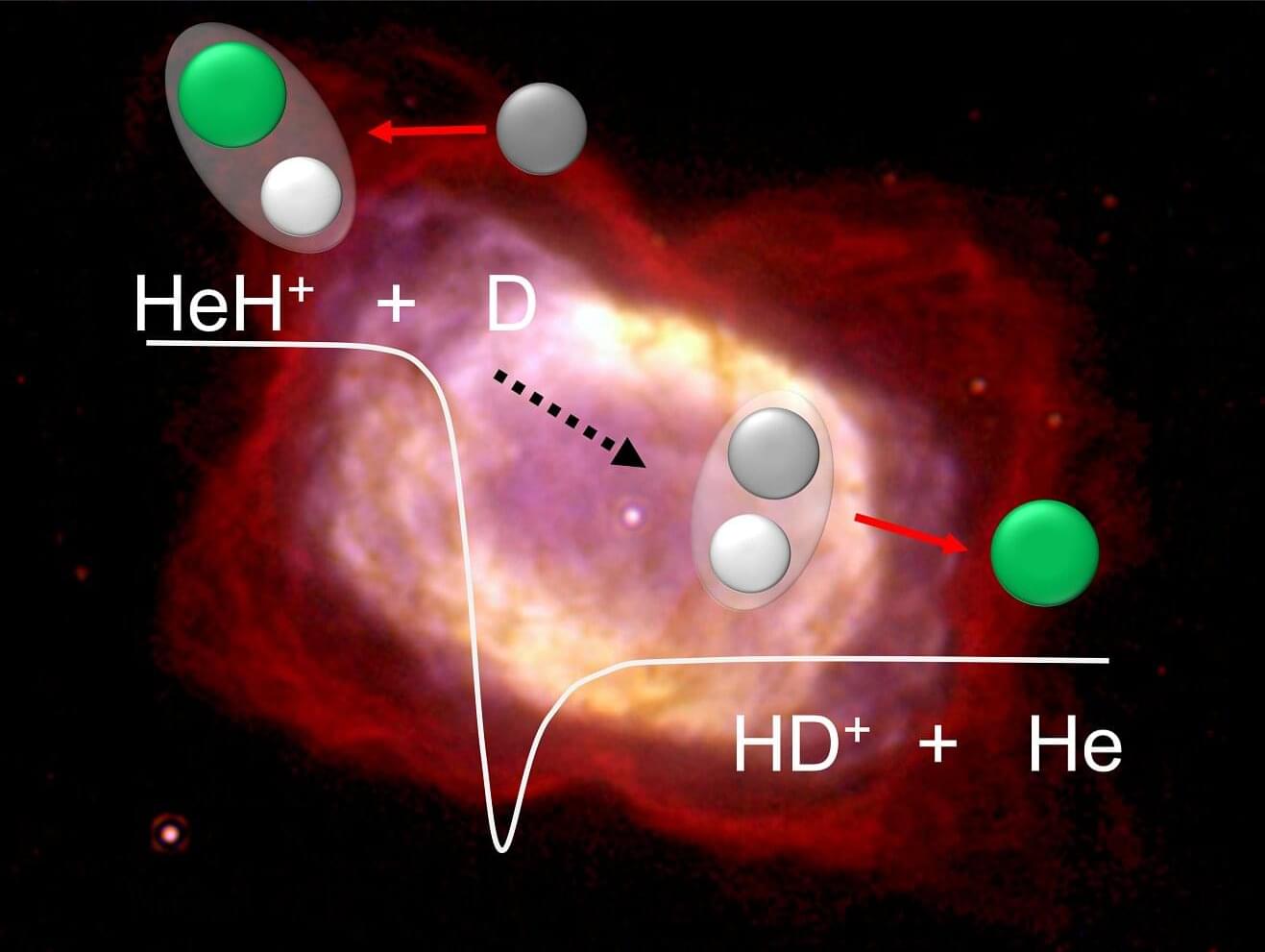

Immediately after the Big Bang, which occurred around 13.8 billion years ago, the universe was dominated by unimaginably high temperatures and densities. However, after just a few seconds, it had cooled down enough for the first elements to form, primarily hydrogen and helium. These were still completely ionized at this point, as it took almost 380,000 years for the temperature in the universe to drop enough for neutral atoms to form through recombination with free electrons. This paved the way for the first chemical reactions.

The oldest molecule in existence is the helium hydride ion (HeH⁺), formed from a neutral helium atom and an ionized hydrogen nucleus. This marks the beginning of a chain reaction that leads to the formation of molecular hydrogen (H₂), which is by far the most common molecule in the universe.

Recombination was followed by the “dark age” of cosmology: although the universe was now transparent due to the binding of free electrons, there were still no light-emitting objects, such as stars. Several hundred million years passed before the first stars formed.

Light Versus Light: The Secret Physics Battle That Could Rewrite the Rules

In a fascinating dive into the strange world of quantum physics, scientists have shown that light can interact with itself in bizarre ways—creating ghost-like virtual particles that pop in and out of existence.

This “light-on-light scattering” isn’t just a theoretical curiosity; it could hold the key to solving long-standing mysteries in particle physics.

Quantum light: why lasers don’t clash like lightsabers.

How materials science could revolutionise technology — with Jess Wade

Jess Wade explains the concept of chirality, and how it might revolutionise technological innovation.

Join this channel to get access to perks:

https://www.youtube.com/channel/UCYeF244yNGuFefuFKqxIAXw/join.

Watch the Q&A here (exclusively for our Science Supporters): https://youtu.be/VlkHT-0zx9U

This lecture was recorded at the Ri on 14 June 2025.

Imagine if we could keep our mobile phones on full brightness all day, without worrying about draining our battery? Or if we could create a fuel cell that used sunlight to convert water into hydrogen and oxygen? Or if we could build a low-power sensor that could map out brain function?

Whether it’s optoelectronics, spintronics or quantum, the technologies of tomorrow are underpinned by advances in materials science and engineering. For example, chirality, a symmetry property of mirror-image systems that cannot be superimposed, can be used to control the spin of electrons and photons. Join functional materials scientist Jess Wade as she explores how advances in chemistry, physics and materials offer new opportunities in technological innovation.

–

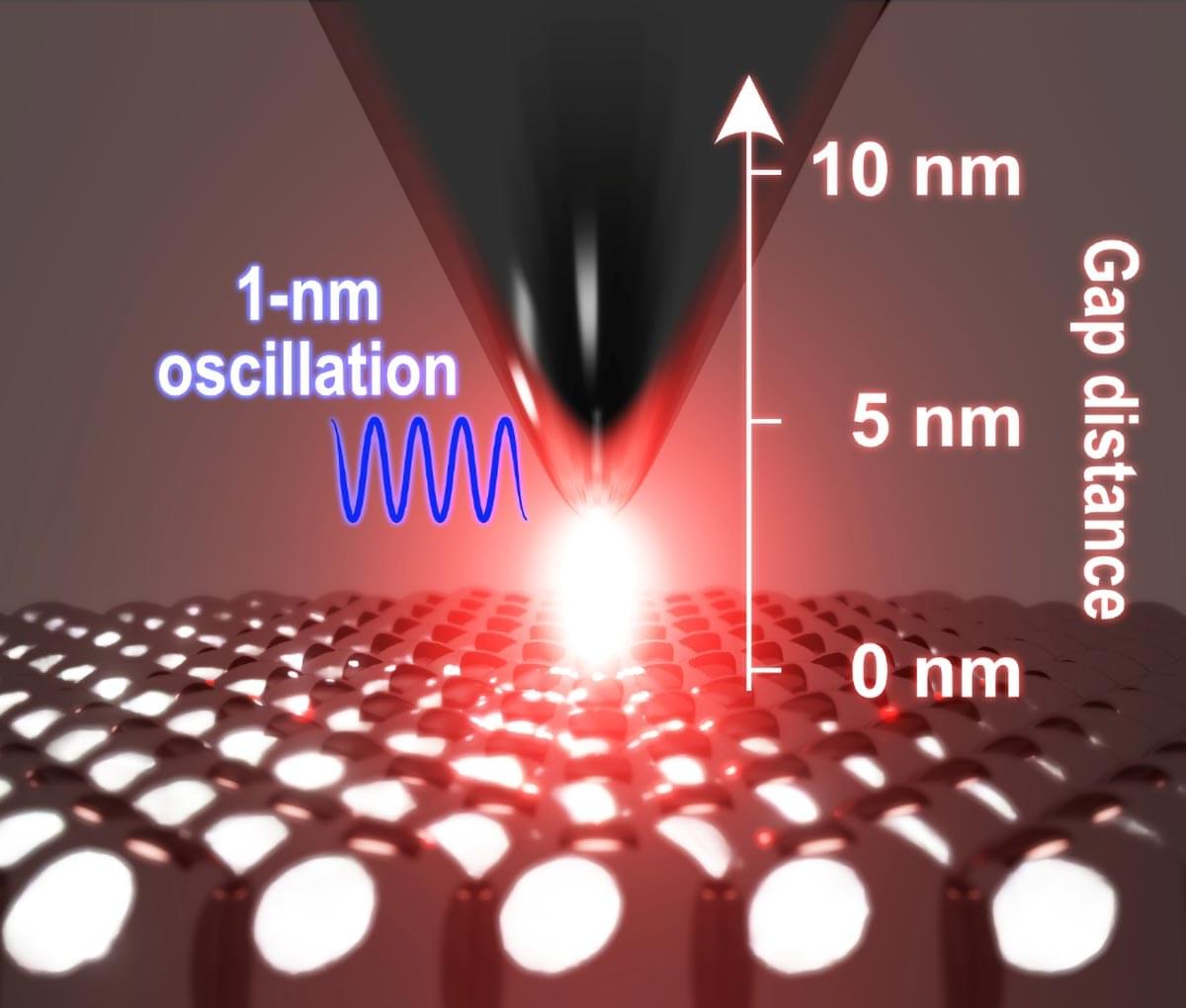

Laser pulses and nanoscale changes yield stable skyrmion bags for advanced spintronics

A team of researchers at the Max Born Institute and collaborating institutions has developed a reliable method to create complex magnetic textures, known as skyrmion bags, in thin ferromagnetic films. Skyrmion bags are donut-like, topologically rich spin textures that go beyond the widely studied single skyrmions.

Gold Does Something Unexpected When Superheated Past Its Melting Point

Gold remains perfectly solid when briefly heated beyond previously hypothesized limits, a new study reports, which may mean a complete reevaluation of how matter behaves under extreme conditions.

The international team of scientists behind the study used intense, super-short laser blasts to push thin fragments of gold past a limit known as the entropy catastrophe; the point at which a solid becomes too hot to resist melting. It’s like a melting point, but for edge cases where the physics isn’t conventional.

In a phenomenon called superheating, a solid can be heated too quickly for its atoms to have time enter a liquid state. Crystals can remain intact way past their standard melting point, albeit for a very, very brief amount of time.

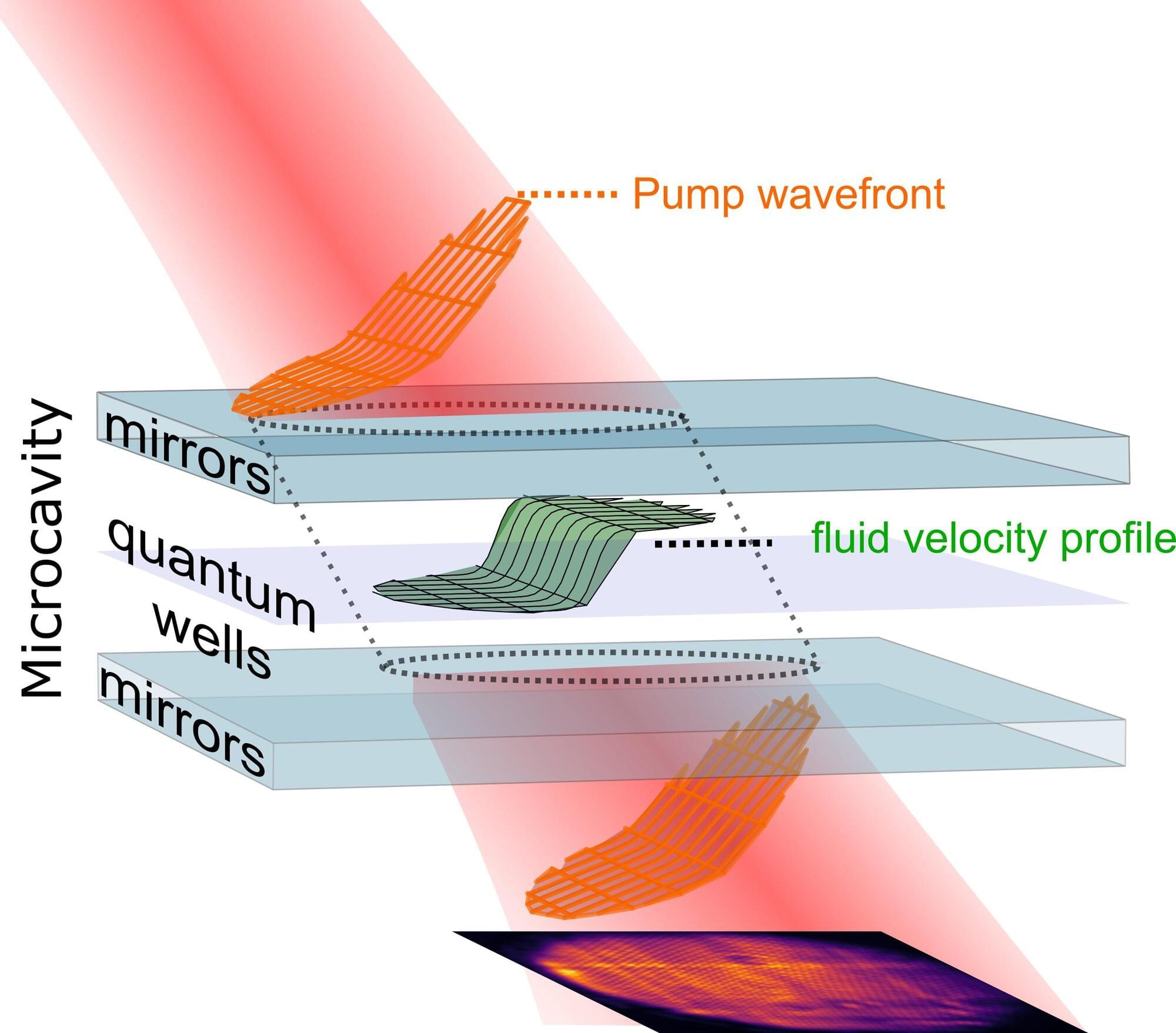

Simulating the Hawking effect and other quantum field theory predictions with polariton fluids

Quantum field theory (QFT) is a physics framework that describes how particles and forces behave based on principles rooted in quantum mechanics and Albert Einstein’s special relativity theory. This framework predicts the emergence of various remarkable effects in curved spacetimes, including Hawking radiation.

Hawking radiation is the thermal radiation theorized to be emitted by black holes close to the event horizon (i.e., the boundary around a black hole after which gravity becomes too strong for anything to escape). As ascertaining the existence of Hawking radiation and testing other QFT predictions in space is currently impossible, physicists have been trying to identify physical systems that could mimic aspects of curved spacetimes in experimental settings.

Researchers at Sorbonne University recently identified a new promising experimental platform for simulating QFT and testing its predictions. Their proposed QFT simulator, outlined in a paper published in Physical Review Letters, consists of a one-dimensional quantum fluid made of polaritons, quasiparticles that emerge from strong interactions between photons (i.e., light particles) and excitons (i.e., bound pairs of electrons and holes in semiconductors).