As the utility of AI systems has grown dramatically, so has their energy demand. Training new systems is extremely energy intensive, as it generally requires massive data sets and lots of processor time. Executing a trained system tends to be much less involved—smartphones can easily manage it in some cases. But, because you execute them so many times, that energy use also tends to add up.

Fortunately, there are lots of ideas on how to bring the latter energy use back down. IBM and Intel have experimented with processors designed to mimic the behavior of actual neurons. IBM has also tested executing neural network calculations in phase change memory to avoid making repeated trips to RAM.

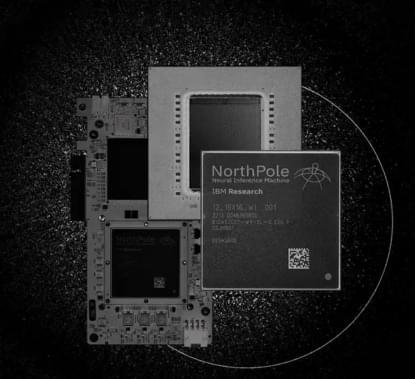

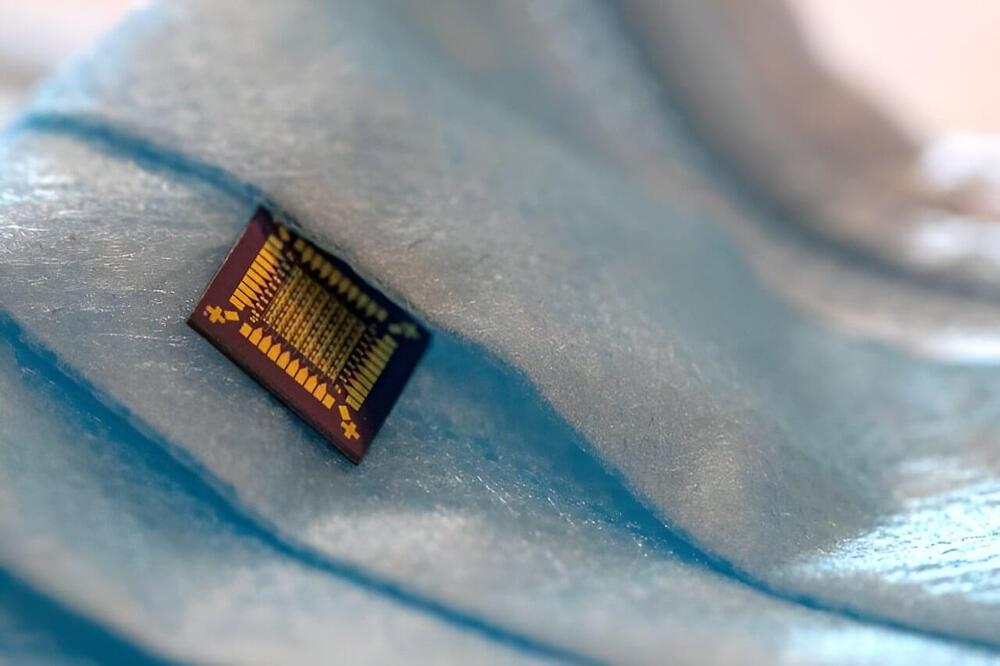

Now, IBM is back with yet another approach, one that’s a bit of “none of the above.” The company’s new NorthPole processor has taken some of the ideas behind all of these approaches and merged them with a very stripped-down approach to running calculations to create a highly power-efficient chip that can efficiently execute inference-based neural networks. For things like image classification or audio transcription, the chip can be up to 35 times more efficient than relying on a GPU.