Authy’s 2FA app has been hacked and as many as 33 million cellphone numbers have been stolen.

AR/VR/MR glasses released in April 2024? i didnt know they were so far along already. Im curious if anyone used these, and impressions of? Still a little bulky, but, my current prediction is this will take over place of cell phones 2029/2030. But, needs to be slimmed down a bit yet; 5 years.

Step into the future with Rokid AR Lite! Our sleek and stylish design lets you take to the streets in style, while its multi-screen feature wraps around your space for seamless work and play. Activate sports mode for unwavering screen stabilization, and immerse yourself in vivid spatial videos in 3D, bringing your memories to life like never before. Don’t miss out on this revolutionary AR experience!

🛒 Get it at the best price on Kickstarter: https://bit.ly/3X1P0X1

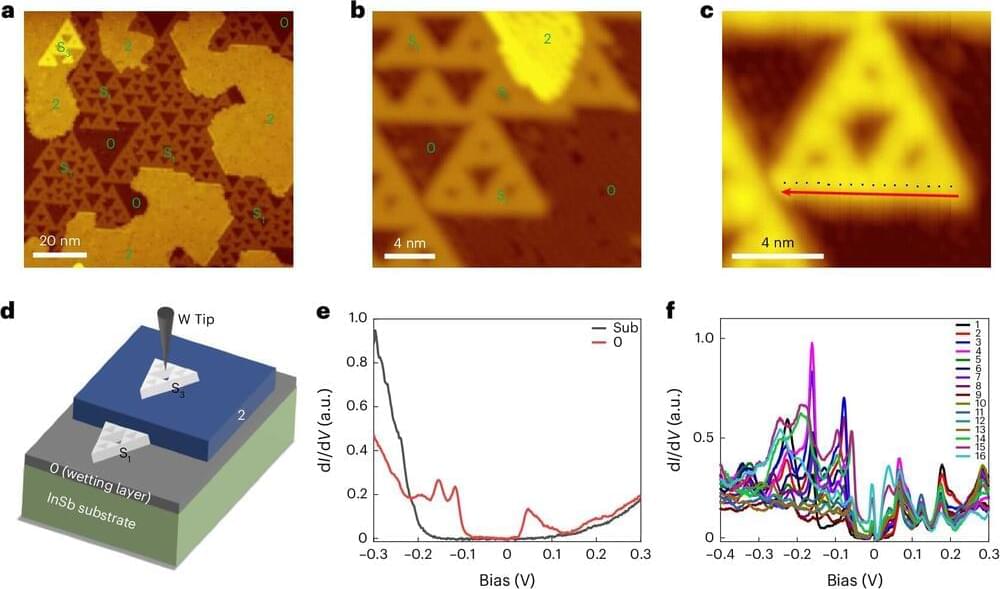

What if we could find a way to make electric currents flow, without energy loss? A promising approach for this involves using materials known as topological insulators. They are known to exist in one (wire), two (sheet) and three (cube) dimensions; all with different possible applications in electronic devices.

Theoretical physicists at Utrecht University, together with experimentalists at Shanghai Jiao Tong University, have discovered that topological insulators may also exist at 1.58 dimensions, and that these could be used for energy-efficient information processing. Their study was published in Nature Physics.

Classical bits, the units of computer operation, are based on electric currents: electrons running means 1, no electrons running means 0. With a combination of 0’s and 1’s, one can build all the devices that you use in your daily life, from cellphones to computers. However, while running, these electrons meet defects and impurities in the material, and lose energy. This is what happens when your device gets warm: the energy is converted into heat, and so your battery is drained faster.

I have been off Facebook, and didn’t want to return, but I came across a lighthearted post in which technology “safeguarded a human life” we are experiencing alot of censorship where I live because youth have used technology in interesting ways to take on a Government.

A Samsung Galaxy A10s phone has emerged as the unlikely hero for taking a bullet for a protestor and earning the title of ‘lifesaver.’

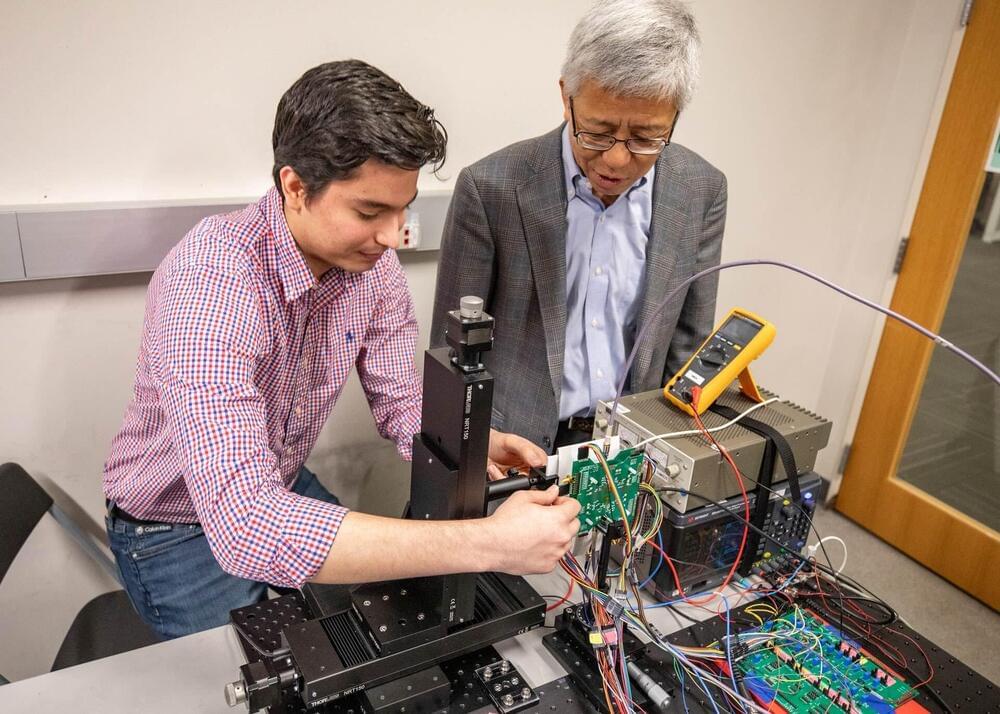

Researchers from The University of Texas at Dallas and Seoul National University have developed an imager chip inspired by Superman’s X-ray vision that could be used in mobile devices to make it possible to detect objects inside packages or behind walls.

Chip-enabled cellphones might be used to find studs, wooden beams or wiring behind walls, cracks in pipes, or outlines of contents in envelopes and packages. The technology also could have medical applications.

The researchers first demonstrated the imaging technology in a 2022 study. Their latest paper, published in the March print edition of IEEE Transactions on Terahertz Science and Technology, shows how researchers solved one of their biggest challenges: making the technology small enough for handheld mobile devices while improving image quality.

Receiver blocks interference early:

MIT researchers also state that the interference-blocking components can be switched on and off as needed to conserve energy.

Specifically, the release says, carriers would simply have to provide unlocking services 60 days after activation. A welcome standard, but it may run afoul of today’s phone and wireless markets.

For instance, although the dreaded two-year contract is no longer forced on most consumers, many still opt for them to lock in the price and get other benefits. And perhaps more to the point, the phones themselves are often paid for in what amount to installment plans: You get a phone for “free” and then pay it off over the next few years.

The NPRM is the stage of FCC rulemaking where it has a draft rule but has not yet solicited public feedback. On July 18, the agency will publish the full document and open up commentary on the above issues. And you can be sure there will be some squawking from mobile providers!

For more than 15 years, a group of scientists in Texas have been hard at work creating smaller and smaller devices to “see” through barriers using medium-frequency electromagnetic waves — and now, they seem closer than ever to cracking the code.

In an interview with Futurism, electrical engineering professor Kenneth O of the University of Texas explained that the tiny new imager chip he made with the help of his research team, which can detect the outlines of items through barriers like cardboard, was the result of repeat advances and breakthroughs in microprocessor technology over the better half of the last two decades.

“This is actually similar technology as what they’re using at the airport for security inspection,” O told us.

Geospatial data has undergone significant transformations due to the internet and smartphones, revolutionizing accessibility and real-time updates.

A collaborative international team reviewed this evolution, highlighting growth opportunities and challenges.

‘Seismic Shift’ to Crowdsourced Scientific Data Presents Promising Opportunities.