Plus: how a new California law could change how you browse the internet

Rising cannabis use and falling smoking rates suggest legalization drives substitution of cannabis for cigarettes.

How does cannabis use influence cigarette smoking? This is what a recent study published in Addictive Behaviors hopes to address as a team of researchers investigated how recreational cannabis legalization has caused shifts in social dynamics, specifically regarding cigarette use. This study has the potential to help researcher better understand the social impacts of recreational cannabis legalization and the steps that can be taken to mitigate the negative impacts.

For the study, the researchers analyzed data obtained from the National Survey on Drug Use and Health for 30-day trends regarding cannabis-only use, cigarette-only use, and co-use for individuals 18 years and older and from time periods of 2015–2019, 2020, and 2021–2023. The goal of the study was to draw a connection between cannabis legalization and cigarette use, or co-use. In the end, the researchers found increases in cannabis-only use in 2015–2019, 2020, and 2021–2023 at 3.9% to 6.5%, 7.1%, and 7.9% to 10.6%, respectively. In contrast, cigarette-only use decreased during these same time periods at 15% to 12%, 10.3%, and 10.8% to 8.8%, respectively. Finally, the researchers observed consistent co-use during all three periods.

“The rising cannabis-only use across groups parallels the expanding state-level recreational cannabis legalization, increasing accessibility and normalization,” the study notes. “Conversely, continued declines in cigarette-only use align with decades of tobacco control efforts and evolving norms surrounding smoking. The relatively stable co-use trends may reflect substitution dynamics whereby some individuals replace cigarettes with cannabis, preventing co-use from rising in tandem with cannabis-only use.”

Join Adam Perella and I at the Schellman AI Summit on November 18th, 2025 at Schellman HQ in Tampa Florida.

Your AI doesn’t just use data; it consumes it like a hungry teenager at a buffet.

This creates a problem when the same AI system operating across multiple regulatory jurisdictions is subject to conflicting legal requirements. Imagine your organization trains your AI in California, deploys it in Dublin, and serves users globally.

This means that you operate in multiple jurisdictions, each demanding different regulatory requirements from your organization.

Welcome to the fragmentation of cross-border AI governance, where over 1,000 state AI bills introduced in 2025 meet the EU’s comprehensive regulatory framework, creating headaches for businesses operating internationally.

As compliance and attestation leaders, we’re well-positioned to offer advice on how to face this challenge as you establish your AI governance roadmap.

Cross-border AI accountability isn’t going away; it’s only accelerating. The companies that thrive will be those that treat regulatory complexity as a competitive advantage, not a compliance burden.

A recent breakthrough from Mayo Clinic researchers offers new hope. Using the world’s largest imaging dataset, Mayo Clinic’s team has developed a cutting-edge AI model capable of detecting pancreatic cancer on standard CT scans—when surgery is still an option. This breakthrough represents a leap forward in the fight against pancreatic cancer, with the potential to save lives. Learn more about this life-changing innovation in early cancer detection. Featured experts include Ajit Goenka, M.D., radiologist and professor of radiology at Mayo Clinic’s Comprehensive Cancer Center and Suresh Chari, M.D., professor, Department of Gastroenterology, Hepatology, and Nutrition in the Division of Internal Medicine at MD Anderson Cancer Center. Subscribe to Tomorrow’s Cure wherever you get your podcasts. Visit tomorrowscure.com for more information.

This podcast is for informational purposes only and should not be relied upon as professional, medical or legal advice. Always consult with a qualified health care provider for any medical advice. The appearance of any guest does not imply an endorsement of them, their employer, or any entity they represent. The views and opinions are those of the speakers and do not necessarily reflect the views of Mayo Clinic. Reference to any product, service or entity does not constitute an endorsement or recommendation by Mayo Clinic.

From Mayo Clinic to your inbox, sign-up for free: https://mayocl.in/3e71zfi.

Visit Mayo Clinic: https://www.mayoclinic.org/appointmen… Mayo Clinic on Facebook: / mayoclinic Follow Mayo Clinic on Instagram:

/ mayoclinic Follow Mayo Clinic on X, formerly Twitter: https://twitter.com/MayoClinic Follow Mayo Clinic on Threads: https://www.threads.net/@mayoclinic.

Like Mayo Clinic on Facebook: / mayoclinic.

Follow Mayo Clinic on Instagram: / mayoclinic.

Follow Mayo Clinic on X, formerly Twitter: https://twitter.com/MayoClinic.

Follow Mayo Clinic on Threads: https://www.threads.net/@mayoclinic

At 47 years of age, Emi Bossio was feeling good about where she was. She had a successful law practice, two growing children and good health. Then she developed a nagging cough. The diagnosis to come would take her breath away.

“I never smoked, never. I ate nutritiously and stayed fit. I thought to myself, I can’t have lung cancer,” says Bossio. “It was super shocking. A cataclysmic moment. There are no words to describe it.”

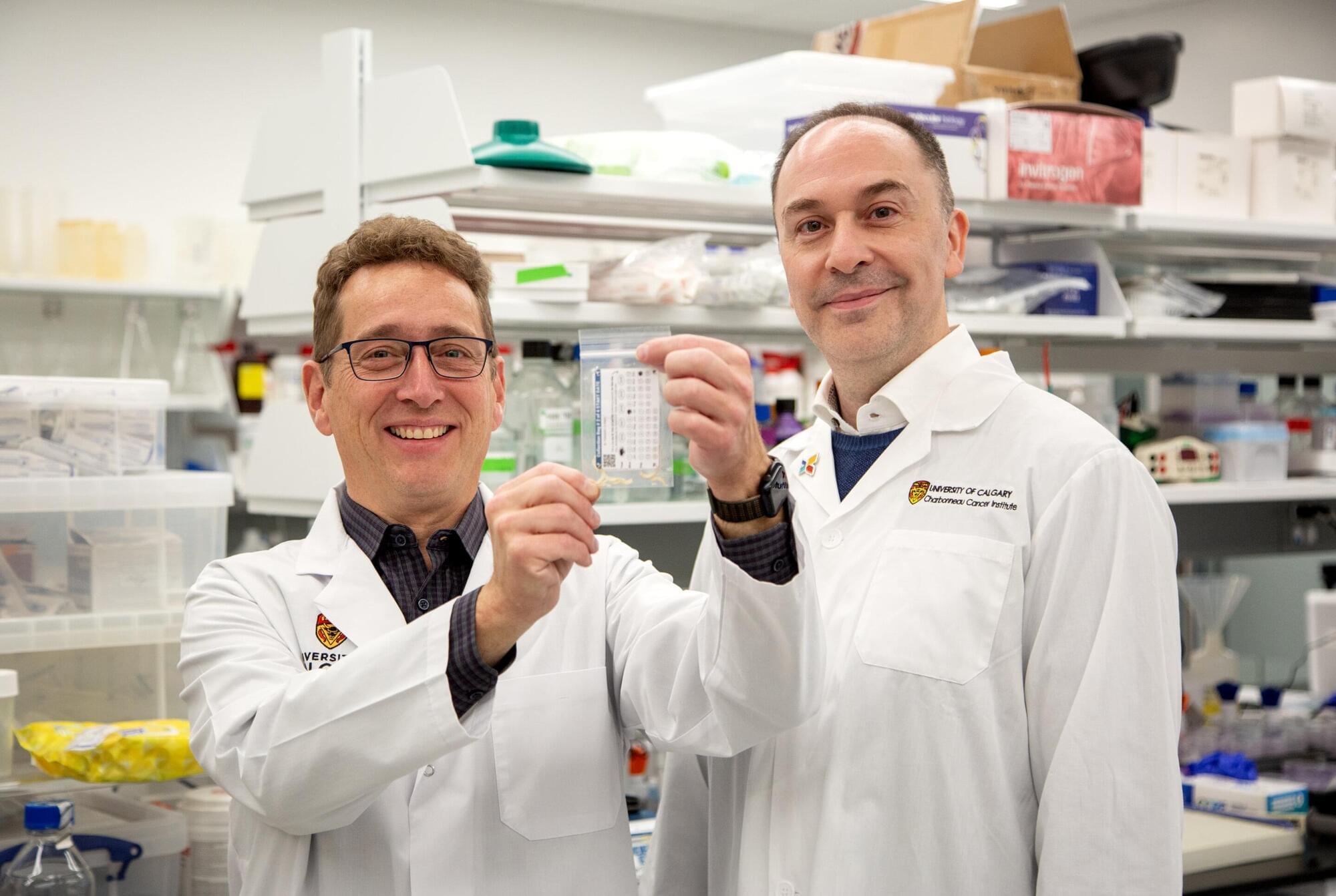

Bossio had to give up her law practice to focus on treatment and healing. As part of that journey, she’s taken on a new role as an advocate to increase awareness about lung cancer. She still has no idea what caused her lung cancer. Trying to answer that question is how Bossio became interested in the research Dr. Aaron Goodarzi, Ph.D., is doing at the University of Calgary.

Ladislaus Bortkiewicz was born in Saint Petersburg, Imperial Russia, to two ethnic Polish parents: Józef Bortkiewicz and Helena Bortkiewicz (née Rokicka). His father was a Polish nobleman who served in the Russian Imperial Army.

Bortkiewicz graduated from the Law Faculty in 1890. In 1898 he published a book about the Poisson distribution, titled The Law of Small Numbers. [ 1 ] In this book he first noted that events with low frequency in a large population follow a Poisson distribution even when the probabilities of the events varied. It was that book that made the Prussian horse-kicking data famous. The data gave the number of soldiers killed by being kicked by a horse each year in each of 14 cavalry corps over a 20-year period. Bortkiewicz showed that those numbers followed a Poisson distribution. The book also examined data on child-suicides. Some [ 2 ] have suggested that the Poisson distribution should have been named the “Bortkiewicz distribution.”

In political economy, Bortkiewicz is important for his analysis of Karl Marx’s reproduction schema in the last two volumes of Capital. Bortkiewicz identified a transformation problem in Marx’s work. Making use of Dmitriev’s analysis of Ricardo, Bortkiewicz proved that the data used by Marx was sufficient to calculate the general profit rate and relative prices. Though Marx’s transformation procedure was not correct—because it did not calculate prices and profit rate simultaneously, but sequentially—Bortkiewicz has shown that it is possible to get the correct results using the Marxian framework, i.e. using the Marxian variables constant capital and variable capital it is possible to obtain the profit rate and the relative prices in a three-sector model. This “correction of the Marxian system” has been the great contribution of Bortkiewicz to classical and Marxian economics but it was completely unnoticed until Paul Sweezy’s 1942 book “Theory of Capital ist Development”

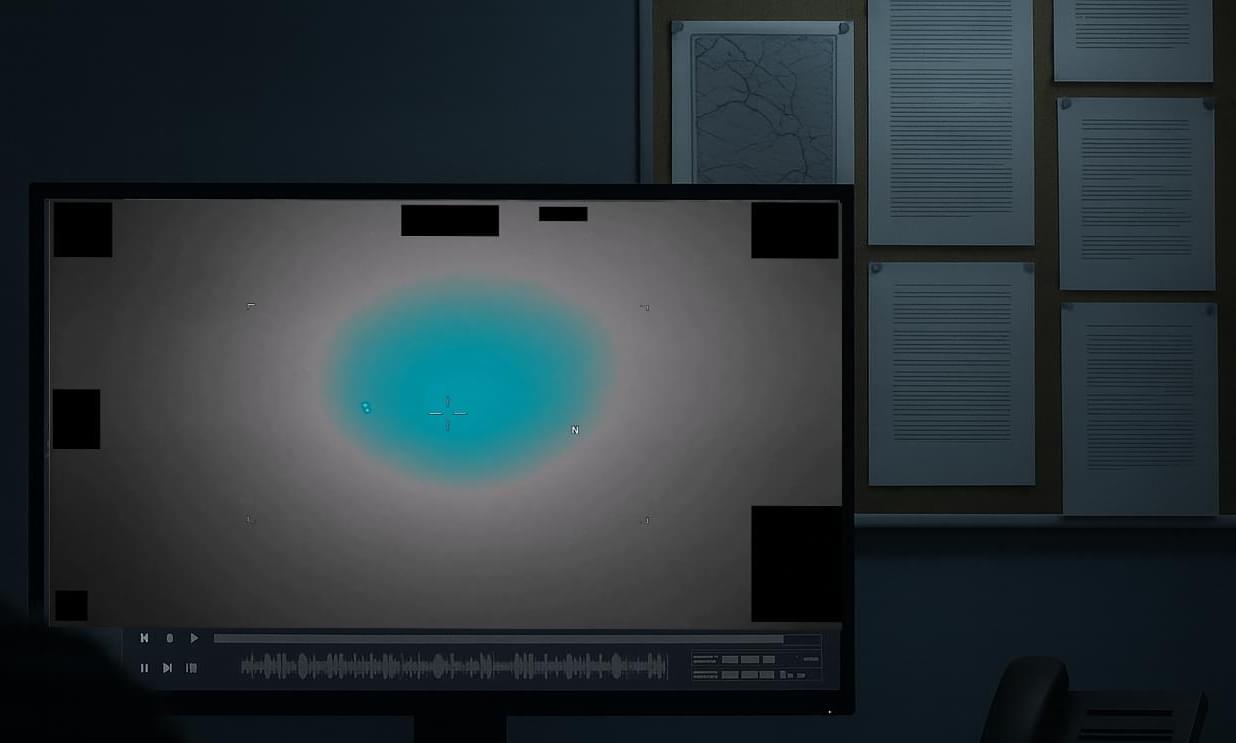

The Department of Defense (DoD) has denied a Freedom of Information Act (FOIA) request seeking records connected to the review, redaction, and release of a UAP video published by the All-domain Anomaly Resolution Office (AARO) earlier this year.

The request, filed May 19, 2025, sought internal communications, review logs, classification guidance, legal opinions, and technical documentation tied to the public posting of the video titled “Middle East 2024.” The video, showing more than six minutes of infrared footage from a U.S. military platform, was released in May 2025 and remains unresolved by AARO.

Video Player