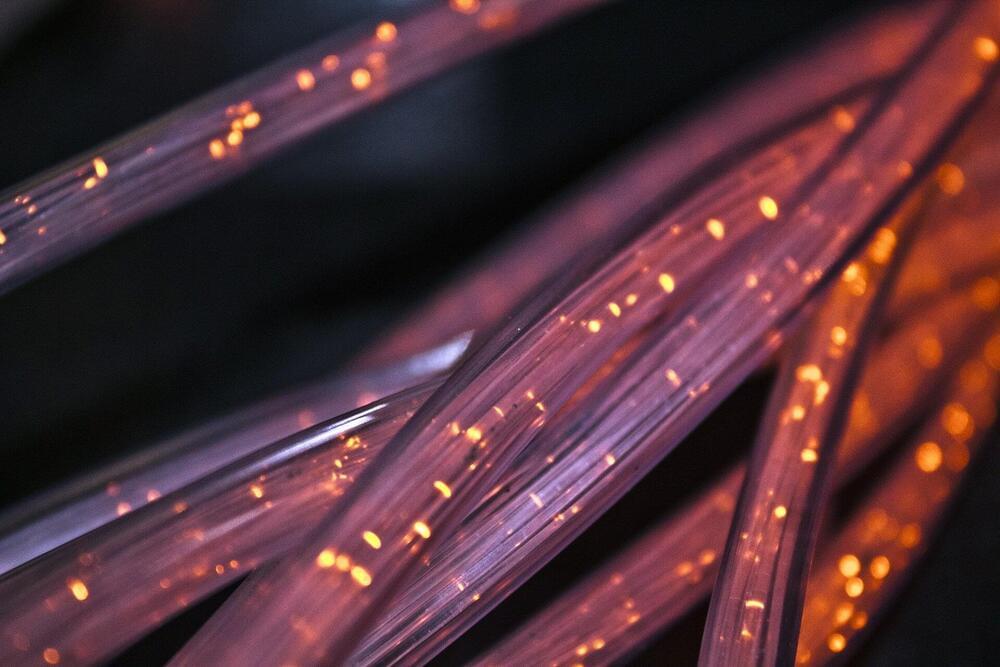

Northwestern University engineers have successfully demonstrated quantum teleportation over fiber optic cables actively carrying Internet traffic, marking a significant step toward practical quantum communication networks that could use existing infrastructure.

Published in Optica | Estimated reading time: 4 minutes

“This is incredibly exciting because nobody thought it was possible,” said Northwestern’s Prem Kumar, who led the study. “Our work shows a path towards next-generation quantum and classical networks sharing a unified fiber optic infrastructure. Basically, it opens the door to pushing quantum communications to the next level.”