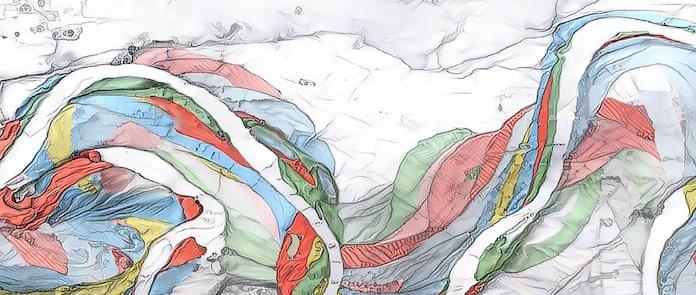

Koo and his team tested CREME on another AI-powered DNN genome analysis tool called Enformer. They wanted to know how Enformer’s algorithm makes predictions about the genome. Koo says questions like that are central to his work.

“We have these big, powerful models,” Koo said. “They’re quite compelling at taking DNA sequences and predicting gene expression. But we don’t really have any good ways of trying to understand what these models are learning. Presumably, they’re making accurate predictions because they’ve learned a lot of the rules about gene regulation, but we don’t actually know what their predictions are based off of.”

With CREME, Koo’s team uncovered a series of genetic rules that Enformer learned while analyzing the genome. That insight may one day prove invaluable for drug discovery. The investigators stated, “CREME provides a powerful toolkit for translating the predictions of genomic DNNs into mechanistic insights of gene regulation … Applying CREME to Enformer, a state-of-the-art DNN, we identify cis-regulatory elements that enhance or silence gene expression and characterize their complex interactions.” Koo added, “Understanding the rules of gene regulation gives you more options for tuning gene expression levels in precise and predictable ways.”