The famed Navier-Stokes equations can lead to cases where more than one result is possible, but only in an extremely narrow set of situations.

Summary

The human brain has the, remarkable ability to learn patterns from small amounts of data and then recognize novel instances of those patterns despite distortion and noise. Although advances in machine learning algorithms have been weakly informed by the brain since the 1940’s, they do not yet rival human performance.

Algorithm set for deployment in Japan could identify giant temblors faster and more reliably.

Two minutes after the world’s biggest tectonic plate shuddered off the coast of Japan, the country’s meteorological agency issued its final warning to about 50 million residents: A magnitude 8.1 earthquake had generated a tsunami that was headed for shore. But it wasn’t until hours after the waves arrived that experts gauged the true size of the 11 March 2011 Tohoku quake. Ultimately, it rang in at a magnitude 9—releasing more than 22 times the energy experts predicted and leaving at least 18,000 dead, some in areas that never received the alert. Now, scientists have found a way to get more accurate size estimates faster, by using computer algorithms to identify the wake from gravitational waves that shoot from the fault at the speed of light.

“This is a completely new [way to recognize] large-magnitude earthquakes,” says Richard Allen, a seismologist at the University of California, Berkeley, who was not involved in the study. “If we were to implement this algorithm, we’d have that much more confidence that this is a really big earthquake, and we could push that alert out over a much larger area sooner.”

Scientists typically detect earthquakes by monitoring ground vibrations, or seismic waves, with devices called seismometers. The amount of advance warning they can provide depends on distance between the earthquake and the seismometers, and the speed of the seismic waves, which travel less than 6 kilometers per second. Networks in Japan, Mexico, and California provide seconds or even minutes of advance warning, and the approach works well for relatively small temblors. But beyond magnitude 7, the earthquake waves can saturate seismometers. This makes the most destructive earthquakes, like Japan’s Tohoku quake, the most challenging to identify, Allen says.

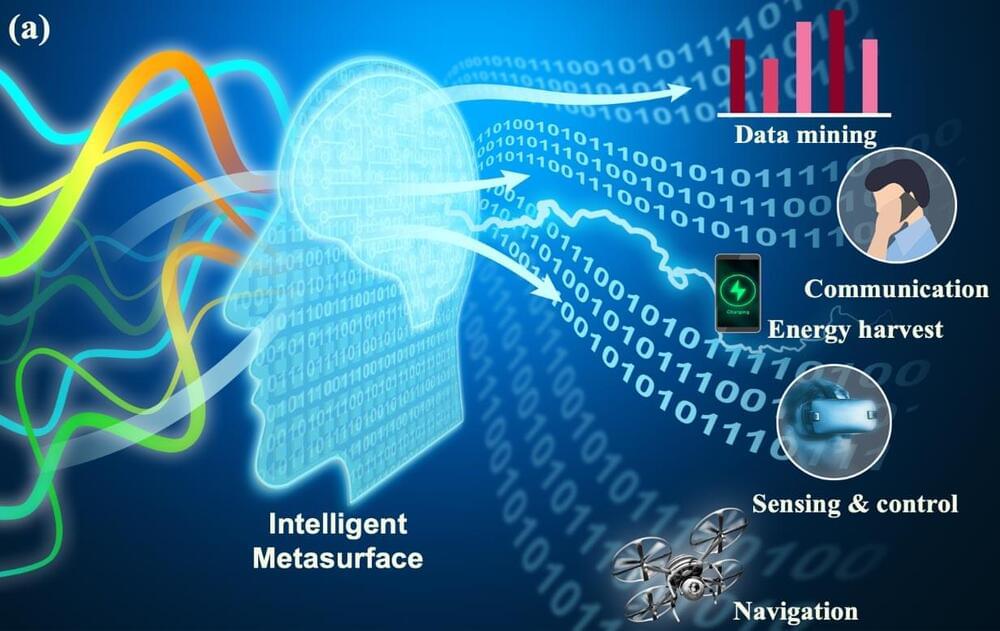

The manipulation of electromagnetic waves and information has become an important part of our everyday lives. Intelligent metasurfaces have emerged as smart platforms for automating the control of wave-information-matter interactions without manual intervention. They evolved from engineered composite materials, including metamaterials and metasurfaces. As a society, we have seen significant progress in the development of metamaterials and metasurfaces of various forms and properties.

In a paper published in the journal eLight on May 6, 2022, Professor Tie Jun Cui of Southeast University and Professor Lianlin Li of Peking University led a research team to review intelligent metasurfaces. “Intelligent metasurfaces: Control, Communication and Computing” investigated the development of intelligent metasurfaces with an eye for the future.

This field has refreshed human insights into many fundamental laws. They have unlocked many novel devices and systems, like cloaking, tunneling, and holograms. Conventional structure-alone or passive metasurfaces has moved towards intelligent metasurfaces by integrating algorithms and nonlinear materials (or active devices).

The latest “machine scientist” algorithms can take in data on dark matter, dividing cells, turbulence, and other situations too complicated for humans to understand and provide an equation capturing the essence of what’s going on.

Despite rediscovering Kepler’s third law and other textbook classics, BACON remained something of a curiosity in an era of limited computing power. Researchers still had to analyze most data sets by hand, or eventually with Excel-like software that found the best fit for a simple data set when given a specific class of equation. The notion that an algorithm could find the correct model for describing any data set lay dormant until 2009, when Lipson and Michael Schmidt, roboticists then at Cornell University, developed an algorithm called Eureqa.

Their main goal had been to build a machine that could boil down expansive data sets with column after column of variables to an equation involving the few variables that actually matter. “The equation might end up having four variables, but you don’t know in advance which ones,” Lipson said. “You throw at it everything and the kitchen sink. Maybe the weather is important. Maybe the number of dentists per square mile is important.”

One persistent hurdle to wrangling numerous variables has been finding an efficient way to guess new equations over and over. Researchers say you also need the flexibility to try out (and recover from) potential dead ends. When the algorithm can jump from a line to a parabola, or add a sinusoidal ripple, its ability to hit as many data points as possible might get worse before it gets better. To overcome this and other challenges, in 1992 the computer scientist John Koza proposed “genetic algorithms,” which introduce random “mutations” into equations and test the mutant equations against the data. Over many trials, initially useless features either evolve potent functionality or wither away.

Would start with scanning and reverse engineering brains of rats, crows, pigs, chimps, and end on the human brain. Aim for completion by 12/31/2025. Set up teams to run brain scans 24÷7÷365 if we need to, and partner w/ every major neuroscience lab in the world.

If artificial intelligence is intended to resemble a brain, with networks of artificial neurons substituting for real cells, then what would happen if you compared the activities in deep learning algorithms to those in a human brain? Last week, researchers from Meta AI announced that they would be partnering with neuroimaging center Neurospin (CEA) and INRIA to try to do just that.

Through this collaboration, they’re planning to analyze human brain activity and deep learning algorithms trained on language or speech tasks in response to the same written or spoken texts. In theory, it could decode both how human brains —and artificial brains—find meaning in language.

By comparing scans of human brains while a person is actively reading, speaking, or listening with deep learning algorithms given the same set of words and sentences to decipher, researchers hope to find similarities as well as key structural and behavioral differences between brain biology and artificial networks. The research could help explain why humans process language much more efficiently than machines.

Quantum machine learning is a field of study that investigates the interaction of concepts from quantum computing with machine learning.

For example, we would wish to see if quantum computers can reduce the amount of time it takes to train or assess a machine learning model. On the other hand, we may use machine learning approaches to discover quantum error-correcting algorithms, estimate the features of quantum systems, and create novel quantum algorithms.

Interactive tools that allow online media users to navigate, save and customize graphs and charts may help them make better sense of the deluge of data that is available online, according to a team of researchers. These tools may help users identify personally relevant information, and check on misinformation, they added.

In a study advancing the concept of “news informatics,” which provides news in the form of data rather than stories, the researchers reported that people found news sites that offered certain interactive tools—such as modality, message and source interactivity tools—to visualize and manipulate data were more engaging than ones without the tools. Modality interactivity includes tools to interact with the content, such as hyperlinks and zoom-ins, while message interactivity focuses on how the users exchange messages with the site. Source interactivity allows users to tailor the information to their individual needs and contribute their own content to the site.

However, it was not the case that more is always better, according to S. Shyam Sundar, James P. Jimirro Professor of Media Effects in the Donald P. Bellisario College of Communications and co-director of the Media Effects Research Laboratory at Penn State. The user’s experience depended on how these tools were combined and how involved they are in the topic, he said.

Consciousness defines our existence. It is, in a sense, all we really have, all we really are, The nature of consciousness has been pondered in many ways, in many cultures, for many years. But we still can’t quite fathom it.

Consciousness Cannot Have Evolved Read more Consciousness is, some say, all-encompassing, comprising reality itself, the material world a mere illusion. Others say consciousness is the illusion, without any real sense of phenomenal experience, or conscious control. According to this view we are, as TH Huxley bleakly said, ‘merely helpless spectators, along for the ride’. Then, there are those who see the brain as a computer. Brain functions have historically been compared to contemporary information technologies, from the ancient Greek idea of memory as a ‘seal ring’ in wax, to telegraph switching circuits, holograms and computers. Neuroscientists, philosophers, and artificial intelligence (AI) proponents liken the brain to a complex computer of simple algorithmic neurons, connected by variable strength synapses. These processes may be suitable for non-conscious ‘auto-pilot’ functions, but can’t account for consciousness.

Finally there are those who take consciousness as fundamental, as connected somehow to the fine scale structure and physics of the universe. This includes, for example Roger Penrose’s view that consciousness is linked to the Objective Reduction process — the ‘collapse of the quantum wavefunction’ – an activity on the edge between quantum and classical realms. Some see such connections to fundamental physics as spiritual, as a connection to others, and to the universe, others see it as proof that consciousness is a fundamental feature of reality, one that developed long before life itself.