Some insightful experiments have occasionally been made on the subject of this review, but those studies have had almost no impact on mainstream neuroscience. In the 1920s (Katz, E. [ 1 ]), it was shown that neurons communicate and fire even if transmission of ions between two neighboring neurons is blocked indicating that there is a nonphysical communication between neurons. However, this observation has been largely ignored in the neuroscience field, and the opinion that physical contact between neurons is necessary for communication prevailed. In the 1960s, in the experiments of Hodgkin et al. where neuron bursts could be generated even with filaments at the interior of neurons dissolved into the cell fluid [ 3 0, 4 ], they did not take into account one important question. Could the time gap between spikes without filaments be regulated? In cognitive processes of the brain, subthreshold communication that modulates the time gap between spikes holds the key to information processing [ 14 ][ 6 ]. The membrane does not need filaments to fire, but a blunt firing is not useful for cognition. The membrane’s ability to modulate time has thus far been assigned only to the density of ion channels. Such partial evidence was debated because neurons would fail to process a new pattern of spike time gaps before adjusting density. If a neuron waits to edit the time gap between two consecutive spikes until the density of ion channels modifies and fits itself with the requirement of modified time gaps, which are a few milliseconds (~20 minutes are required for ion-channel density adjustment [ 25 ]), the cognitive response would become non-functional. Thus far, many discrepancies were noted. However, no efforts were made to resolve these issues. In the 1990s, there were many reports that electromagnetic bursts or electric field imbalance in the environment cause firing [ 7 ]. However, those reports were not considered in work on modeling of neurons. This is not surprising because improvements to the Hodgkin and Huxley model made in the 1990s were ignored simply because it was too computationally intensive to automate neural networks according to the new more complex equations and, even when greater computing powers became available, these remained ignored. We also note here the final discovery of the grid-like network of actin and beta-spectrin just below the neuron membrane [ 26 ], which is directly connected to the membrane. This prompts the question: why is it present bridging the membrane and the filamentary bundles in a neuron?

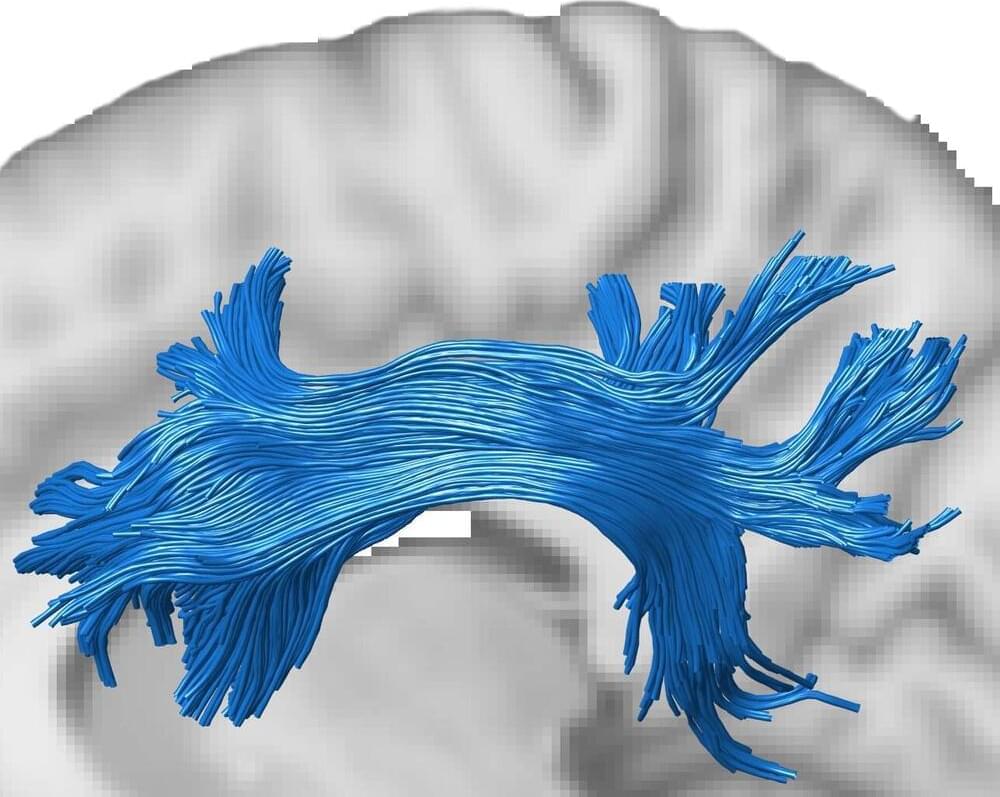

The list is endless, but the supreme concern is probably the simplest question ever asked in neuroscience. What does a nerve spike look like reality? The answer is out there. It is a 2D ring shaped electric field perturbation, since the ring has a width, we could also state that a nerve spike is a 3D structure of electric field. In Figure 1a, we have compared the shape of a nerve spike, perception vs. reality. The difference is not so simple. Majority of the ion channels in that circular strip area requires to be activated simultaneously. In this circular area, polarization and depolarization for all ion channels should happen together. That is easy to presume but it is difficult to explain the mechanism.