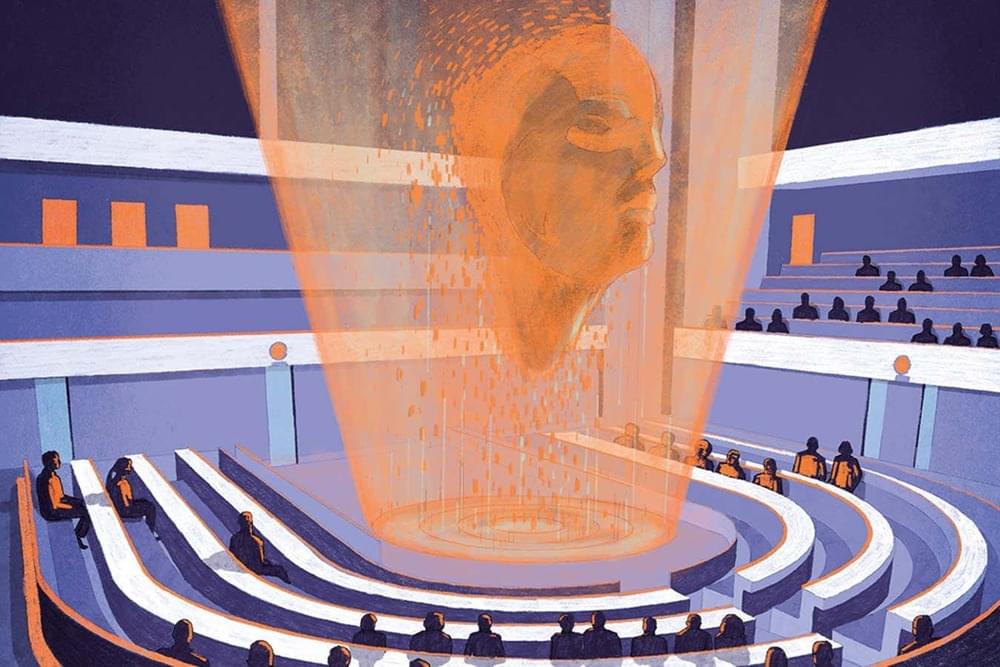

Claims that superintelligent AI poses a threat to humanity are frightening, but only because they distract from the real issues today, argues Mhairi Aitken, an ethics fellow at The Alan Turing Institute.

Claims that superintelligent AI poses a threat to humanity are frightening, but only because they distract from the real issues today, argues Mhairi Aitken, an ethics fellow at The Alan Turing Institute.

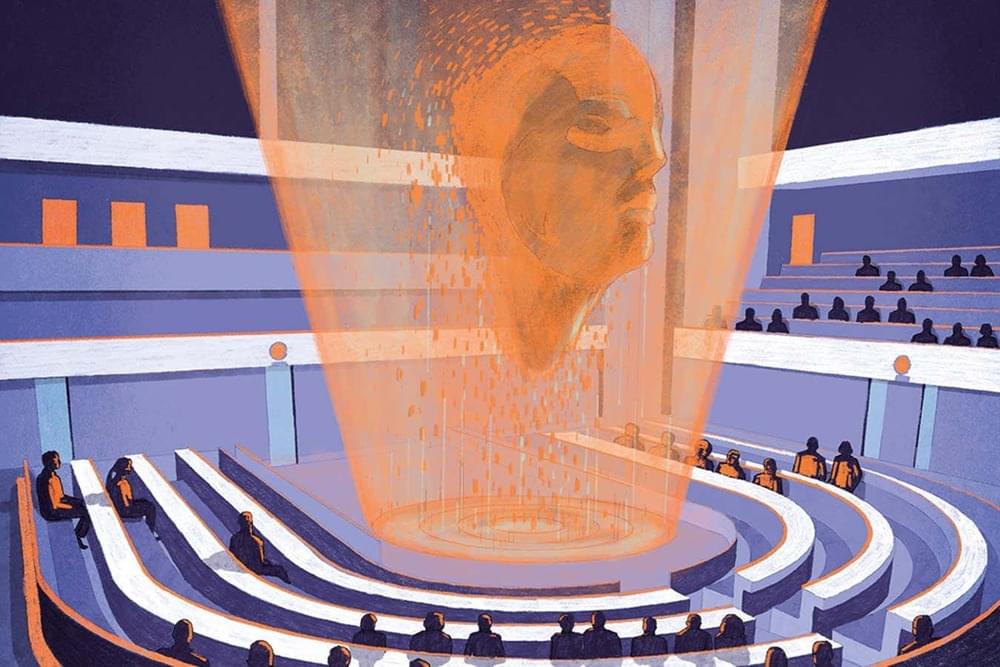

The first “AI incident” almost caused global nuclear war. More recent AI-enabled malfunctions, errors, fraud, and scams include deepfakes used to influence politics, bad health information from chatbots, and self-driving vehicles that are endangering pedestrians.

The worst offenders, according to security company Surfshark, are Tesla, Facebook, and OpenAI, with 24.5% of all known AI incidents so far.

In 1983, an automated system in the Soviet Union thought it detected incoming nuclear missiles from the United States, almost leading to global conflict. That’s the first incident in Surfshark’s report (though it’s debatable whether an automated system from the 1980s counts specifically as artificial intelligence). In the most recent incident, the National Eating Disorders Association (NEDA) was forced to shut down Tessa, its chatbot, after Tessa gave dangerous advice to people seeking help for eating disorders. Other recent incidents include a self-driving Tesla failing to notice a pedestrian and then breaking the law by not yielding to a person in a crosswalk, and a Jefferson Parish resident being wrongfully arrested by Louisiana police after a facial recognition system developed by Clearview AI allegedly mistook him for another individual.

Taiwan was on high alert after two Russian warships entered its waters. Taiwan is used to incursions by China, not Russia. It marks a new flare-up in East Asia. Moscow then doubled down by releasing footage of a military drill in the Sea of Japan. East Asia is becoming a powder keg.

The region already deals with tensions between North Korea, South Korea & Japan. And now the US is trying to send a message to Pyongyang by having its largest nuclear submarine visit South Korea.

Firstpost | world news | vantage.

#firstpost #vantageonfirstpost #worldnews.

Vantage is a ground-breaking news, opinions, and current affairs show from Firstpost. Catering to a global audience, Vantage covers the biggest news stories from a 360-degree perspective, giving viewers a chance to assess the impact of world events through a uniquely Indian lens.

When Max More writes, it’s always worth paying attention.

His recent article Existential Risk vs. Existential Opportunity: A balanced approach to AI risk is no exception. There’s much in that article that deserves reflection.

Nevertheless, there are three key aspects where I see things differently.

Who’s afraid of the big bad bots? A lot of people, it seems. The number of high-profile names that have now made public pronouncements or signed open letters warning of the catastrophic dangers of artificial intelligence is striking.

Hundreds of scientists, business leaders, and policymakers have spoken up, from deep learning pioneers Geoffrey Hinton and Yoshua Bengio to the CEOs of top AI firms, such as Sam Altman and Demis Hassabis, to the California congressman Ted Lieu and the former president of Estonia Kersti Kaljulaid.

Elon Musk is exploring the possibility of upgrading the human brain to allow humans to compete with sentient AI through ‘a brain computer interface’ created by his company Neuralink. “I created [Neuralink] specifically to address the AI symbiosis problem, which I think is an existential threat,” says Musk.

While Neuralink has just received FDA approval to start clinical trials in humans (intended to empower those with paralysis), only time will tell whether this technology will succeed in augmenting human intelligence as Musk first intended. But the use of AI to augment human intelligence brings up some interesting ethical questions as to which tools are acceptable (a subject to be discussed… More.

Chat GPT may have an effect on critical thinking. Also early adopters may be at an advantage with GPT. Study with students.

Have you ever wondered how can North Korea afford its nuclear program and the luxury goods for its leadership when its economy is effectively cut off from the world? Well… let me tell you a little secret.

If you want to support the channel, check out my Patreon: https://www.patreon.com/ExplainedWithDom.

Selected sources and further reading:

https://www.wilsoncenter.org/event/north-koreas-criminal-act…-challenge.

https://press.armywarcollege.edu/cgi/viewcontent.cgi?article…monographs.

https://www.airuniversity.af.edu/JIPA/Display/Article/328526…ase-study/

https://sgp.fas.org/crs/row/RL33885.pdf.

https://www.rusi.org/events/open-to-all/organised-crime-north-korea.

https://moneyweek.com/19827/north-koreas-criminal-economy

A video worth watching. An amazingly detailed deep dive into Sam Altman’s interviews and a high-level look at AI LLMs.

Missed by much of the media, Sam Altman (and co) have revealed at least 16 surprising things over his World Tour. From AI’s designing AIs to ‘unstoppable opensource’, the ‘customisation’ leak (with a new 16k ChatGPT and ‘steerable GPT 4), AI and religion, and possible regrets over having ‘pushed the button’.

I’ll bring in all of this and eleven other insights, together with a new and highly relevant paper just released this week on ‘dual-use’. Whether you are interested in ‘solving climate change by telling AIs to do it’, ‘staring extinction in the face’ or just a deepfake Altman, this video touches on it all, ending with comments from Brockman in Seoul.

I watched over ten hours of interviews to bring you this footage from Jordan, India, Abu Dhabi, UK, South Korea, Germany, Poland, Israel and more.

Altman Abu Dhabi, HUB71, ‘change it’s architecture’: https://youtu.be/RZd870NCukg.

Join top executives in San Francisco on July 11–12, to hear how leaders are integrating and optimizing AI investments for success. Learn More

The skies above where I reside near New York City were noticeably apocalyptic last week. But to some in Silicon Valley, the fact that we wimpy East Coasters were dealing with a sepia hue and a scent profile that mixed cigar bar, campfire and old-school happy hour was nothing to worry about. After all, it is AI, not climate change, that appears to be top of mind to this cohort, who believe future superintelligence is either going to kill us all, save us all, or almost kill us all if we don’t save ourselves first.

Whether they predict the “existential risks” of runaway AGI that could lead to human “extinction” or foretell an AI-powered utopia, this group seems to have equally strong, fixed opinions (for now, anyway — perhaps they are “loosely held”) that easily tip into biblical prophet territory.