Max Tegmark argues that the downplaying is not accidental and threatens to delay, until it’s too late, the strict regulations needed.

Since the release of ChatGPT in November 2022, artificial intelligence (AI) has both entered the common lexicon and sparked substantial public intertest. A blunt yet clear example of this transition is the drastic increase in worldwide Google searches for ‘AI’ from late 2022, which reached a record high in February 2024.

You would therefore be forgiven for thinking that AI is suddenly and only recently a ‘big thing.’ Yet, the current hype was preceded by a decades-long history of AI research, a field of academic study which is widely considered to have been founded at the 1956 Dartmouth Summer Research Project on Artificial Intelligence.1 Since its beginning, a meandering trajectory of technical successes and ‘AI winters’ subsequently unfolded, which eventually led to the large language models (LLMs) that have nudged AI into today’s public conscience.

Alongside those who aim to develop transformational AI as quickly as possible – the so-called ‘Effective Accelerationism’ movement, or ‘e/acc’ – exist a smaller and often ridiculed group of scientists and philosophers who call attention to the inherent profound dangers of advanced AI – the ‘decels’ and ‘doomers.’2 One of the most prominent concerned figures is Nick Bostrom, the Oxford philosopher whose wide-ranging works include studies of the ethics of human enhancement,3 anthropic reasoning,4 the simulation argument,5 and existential risk.6 I first read his 2014 book Superintelligence: Paths, Dangers, Strategies7 five years ago, which convinced me that the risks which would be posed to humanity by a highly capable AI system (a ‘superintelligence’) ought to be taken very seriously before such a system is brought into existence. These threats are of a different kind and scale to those posed by the AIs in existence today, including those developed for use in medicine and healthcare (such as the consequences of training set bias,8 uncertainties over clinical accountability, and problems regarding data privacy, transparency and explainability),9 and are of a truly existential nature. In light of the recent advancements in AI, I recently revisited the book to reconsider its arguments in the context of today’s digital technology landscape.

This is an issue that the character Ye Wenjie wrestles with in the first episode of Netflix’s 3 Body Problem. Working at a radio observatory, she does finally receive a message from a member of an alien civilization—telling her they are a pacifist and urging her not to respond to the message or Earth will be attacked.

The series will ultimately offer a detailed, elegant solution to the Fermi Paradox, but we will have to wait until the second season.

Or you can read the second book in Cixin Liu’s series, The Dark Forest. Without spoilers, the explanation set out in the books runs as follows: “The universe is a dark forest. Every civilization is an armed hunter stalking through the trees like a ghost, gently pushing aside branches that block the path and trying to tread without sound.”

As we go on with our everyday lives, it’s very easy to forget about the sheer size of the universe.

The Earth may seem like a mighty place, but it’s practically a grain within a grain of sand in a universe that is estimated to contain over 200 billion galaxies. That’s something to think about the next time you take life too seriously.

So when we gaze up into the starry night sky, we have every reason to be awestruck—and overwhelmed with curiosity. With the sheer size of the universe and the number of galaxies, stars, and planets in it, surely there are other sentient beings out there. But how come we haven’t heard from them?

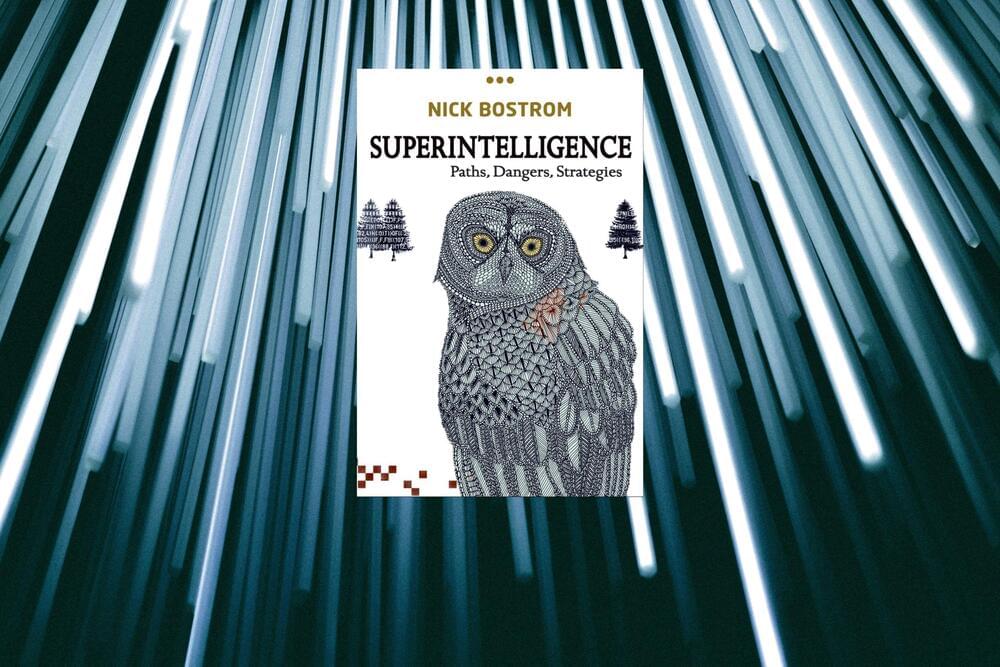

Almost 99% of all human ancestors may have been wiped out around 930,000 years ago, a new paper has claimed.

The new research, published in the journal Science, used DNA from living people to suggest that humans went through a bottleneck, an event where populations shrink drastically. The paper estimates that as few as 1,300 humans were left for a period of around 120,000 years.

While the exact causes aren’t certain, the near-extinction has been blamed on Africa’s climate getting much colder and drier.

New Atlas robot from Boston Dynamics and Figure 1 from OpenAI, leaked $100b OpenAI plan and a new project to avoid our extinction.

Sam Altman, Elon Musk, Geoffrey Hinton, Sora.

To support us and learn more about the project, please visit: / digitalengine.

Experts please get in touch via:

aisafetypath.org.

More about us:

https://aisafetypath.org/about-us.

If we’re lucky enough to reach someone who can fund us on a larger scale, we’d love to hear from you.

Sources.

Sunspot as large as the Carrington Event sunspot from from 1,859 prompts NOAA Storm alert — first time since 2005 that a G4 Severe Geomagnetic storm alert has been issued. This sunspot is capable of even worse. Watch to learn more.

Go to https://brilliant.org/IsaacArthur/ to get a 30-day free trial and 20% off their annual subscription.

We often look out into the galaxy and wonder where all the civilizations are, but could it be that we don’t see them because they have all chosen to exist in fortress star systems, surrounded by despoiled deserts of their own making?

Join this channel to get access to perks:

/ @isaacarthursfia.

Visit our Website: http://www.isaacarthur.net.

Join Nebula: https://go.nebula.tv/isaacarthur.

Support us on Patreon: / isaacarthur.

Support us on Subscribestar: https://www.subscribestar.com/isaac-a…

Facebook Group: / 1583992725237264

Reddit: / isaacarthur.

Twitter: / isaac_a_arthur on Twitter and RT our future content.

SFIA Discord Server: / discord.

Credits:

The Fermi Paradox: Interdiction.

Episode 446; May 9, 2024

Produced \& Narrated by: Isaac Arthur.

Written by: Isaac Arthur \& Mark Warburton.

Graphics:

LegionTech Studios.

Sergio Botero.

Udo Schroeter.

YD Visual.

Music Courtesy of:

Epidemic Sound http://epidemicsound.com/creator.

Stellardrone, \