Researchers in the Department of Physics of ETH Zurich have measured how electrons in so-called transition metals get redistributed within a fraction of an optical oscillation cycle. They observed the electrons getting concentrated around the metal atoms within less than a femtosecond. This regrouping might influence important macroscopic properties of these compounds, such as electrical conductivity, magnetization or optical characteristics. The work therefore suggests a route to controlling these properties on extremely fast time scales.

The distribution of electrons in transition metals, which represent a large part of the periodic table of chemical elements, is responsible for many of their interesting properties used in applications. The magnetic properties of some of the members of this group of materials are, for example, exploited for data storage, whereas others exhibit excellent electrical conductivity. Transition metals also have a decisive role for novel materials with more exotic behaviour that results from strong interactions between the electrons. Such materials are promising candidates for a wide range of future applications.

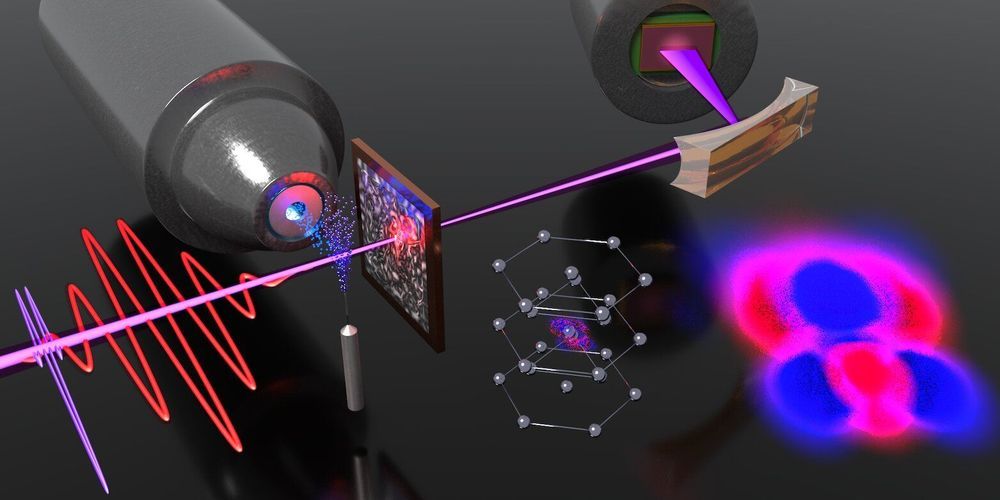

In their experiment, whose results they report in a paper published today in Nature Physics, Mikhail Volkov and colleagues in the Ultrafast Laser Physics group of Prof. Ursula Keller exposed thin foils of the transition metals titanium and zirconium to short laser pulses. They observed the redistribution of the electrons by recording the resulting changes in optical properties of the metals in the extreme ultraviolet (XUV) domain. In order to be able to follow the induced changes with sufficient temporal resolution, XUV pulses with a duration of only few hundred attoseconds (10-18 s) were employed in the measurement. By comparing the experimental results with theoretical models, developed by the group of Prof. Angel Rubio at the Max Planck Institute for the Structure and Dynamics of Matter in Hamburg, the researchers established that the change unfolding in less than a femtosecond (10-15 s) is due to a modification of the electron localization in the vicinity of the metal atoms.