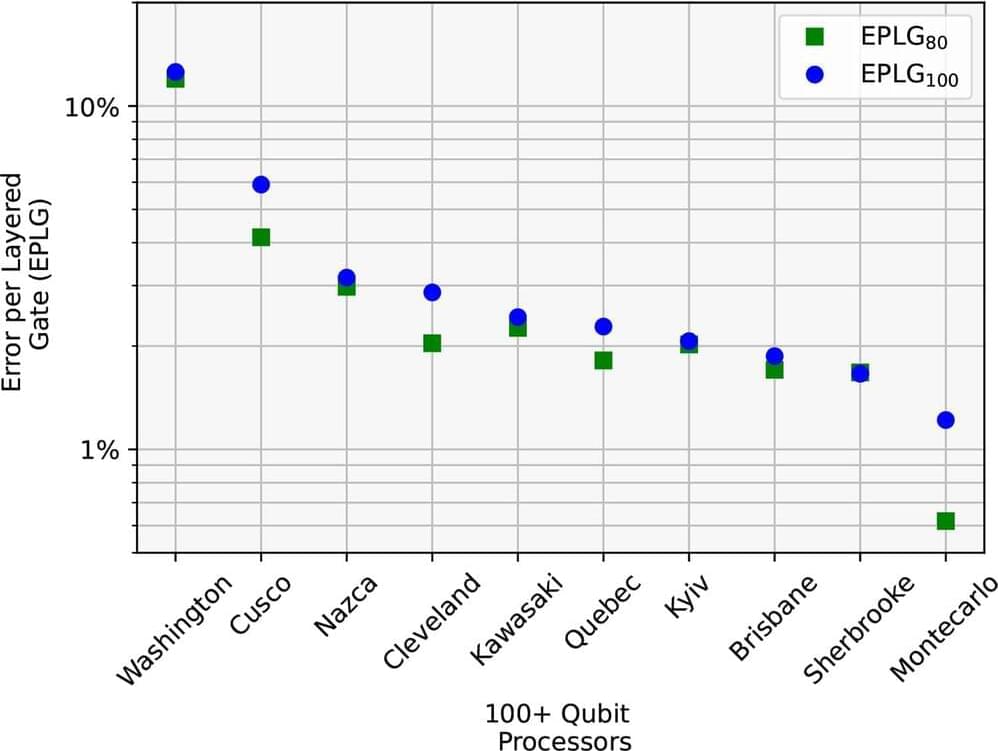

IBM introduces introducing two new metrics — error per layered gate (EPLG) and CLOPSh — to fully encapsulate the performance of 100+ qubit processors powering this utility-scale era.

Layer fidelity provides a benchmark that encapsulates the entire processor’s ability to run circuits while revealing information about individual qubits, gates, and crosstalk. It expands on a well-established way to benchmark quantum computers, called randomized benchmarking. With randomized benchmarking, we add a set of randomized Clifford group gates (that’s the basic set of gates we use: X, Y, Z, H, SX, CNOT, ECR, CZ, etc.) to the circuit, then run an operation that we know, mathematically, should represent the inverse of the sequence of operations that precede it.

If any of the qubits do not return to their original state by the inverse operation upon measurement, then we know there was an error. We extract a number from this experiment by repeating it multiple times with more and more random gates, plotting on a graph how the errors increase with more gates, fitting an exponential decay to the plot, and using that line to calculate a number between 0 and 1, called the fidelity.

So, layer fidelity gives us a way to combine randomized benchmarking data for larger circuits to tell us things about the whole processor and its subsets of qubits.