GAIA-1 is a cutting-edge generative world model built for autonomous driving. A world model learns representations of the environment and its future dynamics, providing a structured understanding of the surroundings that can be leveraged for making informed decisions when driving. Predicting future events is a fundamental and critical aspect of autonomous systems. Accurate future prediction enables autonomous vehicles to anticipate and plan their actions, enhancing safety and efficiency on the road. Incorporating world models into driving models yields the potential to enable them to understand human decisions better and ultimately generalise to more real-world situations.

GAIA-1 is a model that leverages video, text and action inputs to generate realistic driving videos and offers fine-grained control over ego-vehicle behaviour and scene features. Due to its multi-modal nature, GAIA-1 can generate videos from many prompt modalities and combinations.

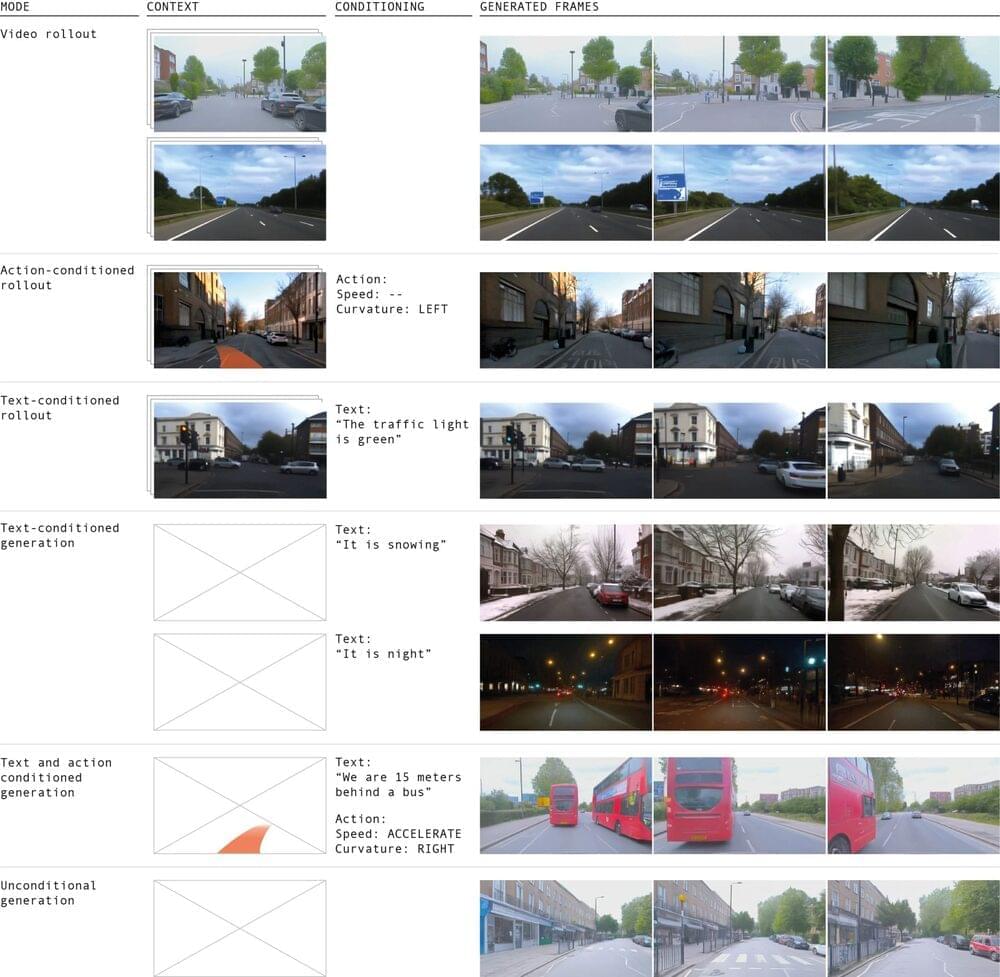

Examples of types of prompts that GAIA-1 can use to generate videos. GAIA-1 can generate videos by performing the future rollout starting from a video prompt. These future rollouts can be further conditioned on actions to influence particular behaviours of the ego-vehicle (e.g. steer left), or by text to drive a change in some aspects of the scene (e.g. change the colour of the traffic light). For speed and curvature, we condition GAIA-1 by passing the sequence of future speed and/or curvature values. GAIA-1 can also generate realistic videos from text prompts, or by simply drawing samples from its prior distribution (fully unconditional generation).