ChatGPT is currently deployed on A100 chips that have 80 GB of cache each. Nvidia decided this was a bit wimpy so they developed much faster H100 chips (H100 is about twice as fast as A100) that have 94 GB of cache each and then found a way to put two of them on a card with high speed connections between them for a total of 188 GB of cache per card.

So hardware is getting more and more impressive!

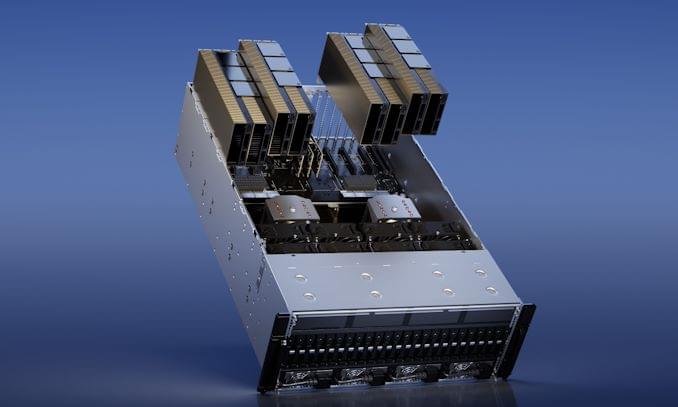

While this year’s Spring GTC event doesn’t feature any new GPUs or GPU architectures from NVIDIA, the company is still in the process of rolling out new products based on the Hopper and Ada Lovelace GPUs its introduced in the past year. At the high-end of the market, the company today is announcing a new H100 accelerator variant specifically aimed at large language model users: the H100 NVL.

The H100 NVL is an interesting variant on NVIDIA’s H100 PCIe card that, in a sign of the times and NVIDIA’s extensive success in the AI field, is aimed at a singular market: large language model (LLM) deployment. There are a few things that make this card atypical from NVIDIA’s usual server fare – not the least of which is that it’s 2 H100 PCIe boards that come already bridged together – but the big takeaway is the big memory capacity. The combined dual-GPU card offers 188GB of HBM3 memory – 94GB per card – offering more memory per GPU than any other NVIDIA part to date, even within the H100 family.

Driving this SKU is a specific niche: memory capacity. Large language models like the GPT family are in many respects memory capacity bound, as they’ll quickly fill up even an H100 accelerator in order to hold all of their parameters (175B in the case of the largest GPT-3 models). As a result, NVIDIA has opted to scrape together a new H100 SKU that offers a bit more memory per GPU than their usual H100 parts, which top out at 80GB per GPU.