LLMs stands for Large Language Models. These are advanced machine learning models that are trained to comprehend massive volumes of text data and generate natural language. Examples of LLMs include GPT-3 (Generative Pre-trained Transformer 3) and BERT (Bidirectional Encoder Representations from Transformers). LLMs are trained on massive amounts of data, often billions of words, to develop a broad understanding of language. They can then be fine-tuned on tasks such as text classification, machine translation, or question-answering, making them highly adaptable to various language-based applications.

LLMs struggle with arithmetic reasoning tasks and frequently produce incorrect responses. Unlike natural language understanding, math problems usually have only one correct answer, making it difficult for LLMs to generate precise solutions. As far as it is known, no LLMs currently indicate their confidence level in their responses, resulting in a lack of trust in these models and limiting their acceptance.

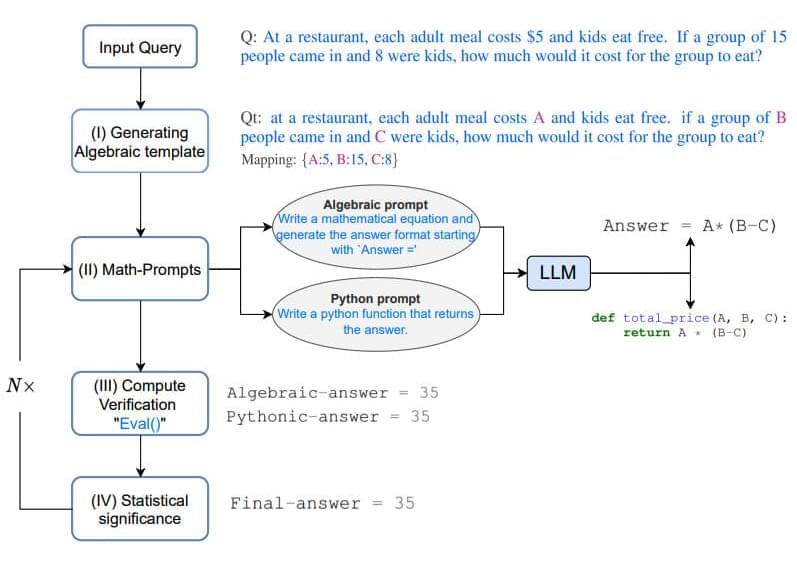

To address this issue, scientists proposed ‘MathPrompter,’ which enhances LLM performance on mathematical problems and increases reliance on forecasts. MathPrompter is an AI-powered tool that helps users solve math problems by generating step-by-step solutions. It uses deep learning algorithms and natural language processing techniques to understand and interpret math problems, then generates a solution explaining each process step.