How far away could an artificial brain be? Perhaps a very long way off still, but a working analogue to the essential element of the brain’s networks, the synapse, appears closer at hand now.

That’s because a device that draws inspiration from batteries now appears surprisingly well suited to run artificial neural networks. Called electrochemical RAM (ECRAM), it is giving traditional transistor-based AI an unexpected run for its money—and is quickly moving toward the head of the pack in the race to develop the perfect artificial synapse. Researchers recently reported a string of advances at this week’s IEEE International Electron Device Meeting (IEDM 2022) and elsewhere, including ECRAM devices that use less energy, hold memory longer, and take up less space.

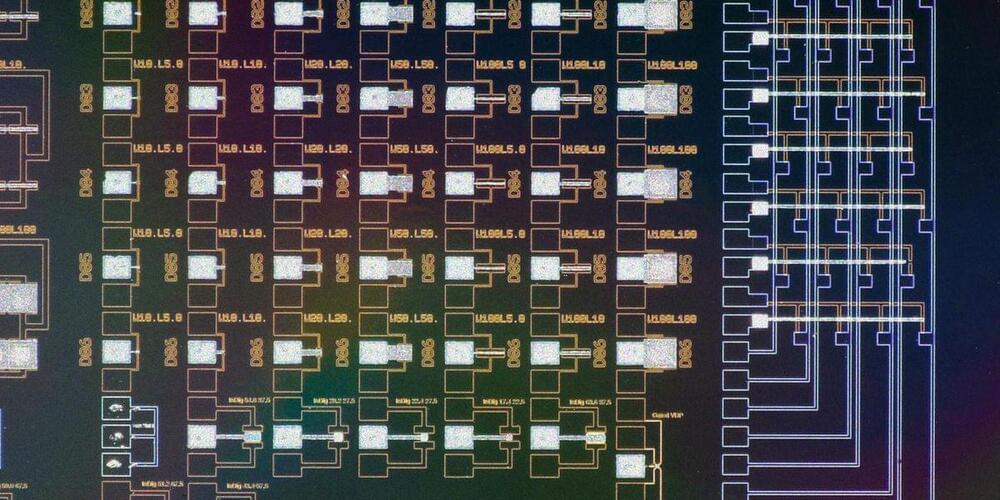

The artificial neural networks that power today’s machine-learning algorithms are software that models a large collection of electronics-based “neurons,” along with their many connections, or synapses. Instead of representing neural networks in software, researchers think that faster, more energy-efficient AI would result from representing the components, especially the synapses, with real devices. This concept, called analog AI, requires a memory cell that combines a whole slew of difficult-to-obtain properties: it needs to hold a large enough range of analog values, switch between different values reliably and quickly, hold its value for a long time, and be amenable to manufacturing at scale.