Recent progress in generative models has paved the way to a manifold of tasks that some years ago were only imaginable. With the help of large-scale image-text datasets, generative models can learn powerful representations exploited in fields such as text-to-image or image-to-text translation.

The recent release of Stable Diffusion and the DALL-E API led to great excitement around text-to-image generative models capable of generating complex and stunning novel images from an input descriptive text, similar to performing a search on the internet.

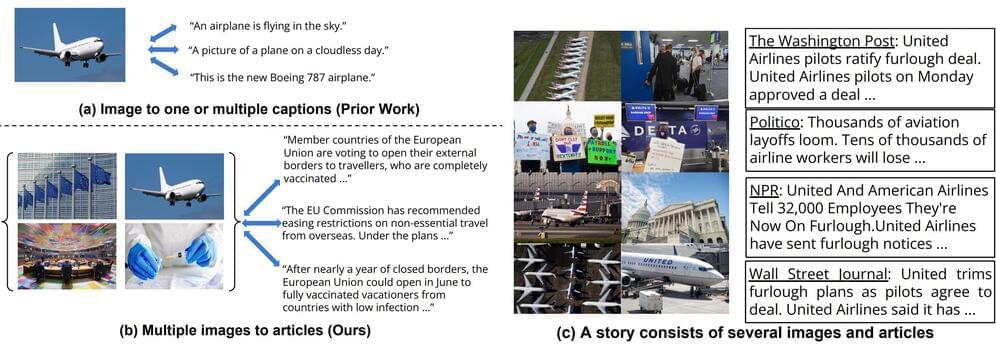

With the rising interest in the reverse task, i.e., image-to-text translation, several studies tried to generate captions from input images. These methods often presume a one-to-one correspondence between pictures and their captions. However, multiple images can be connected to and paired with a long text narrative, such as photos in a news article. Therefore, the need for illustrative correspondences (e.g., “travel” or “vacation”) rather than literal one-to-one captions (e.g., “airplane flying”).