When video chatting with colleagues, coworkers, or family, many of us have grown accustomed to using virtual backgrounds and background filters. It has been shown to offer more control over the surroundings, allowing fewer distractions, preserving the privacy of those around us, and even liven up our virtual presentations and get-togethers. However, Background filters don’t always work as expected or perform well for everyone.

Image segmentation is a computer vision process of separating the different components of a photo or video. It has been widely used to improve backdrop blurring, virtual backgrounds, and other augmented reality (AR) effects. Despite advanced algorithms, achieving highly accurate person segmentation seems challenging.

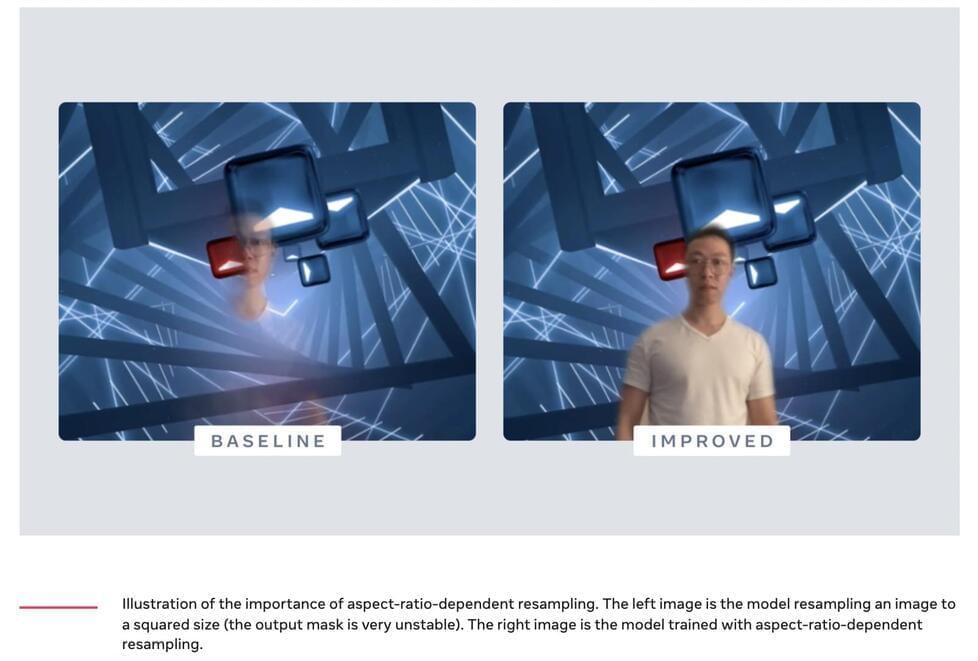

The model used for image segmentation tasks must be incredibly consistent and lag-free. Inefficient algorithms may result in bad experiences for the users. For instance, during a video conference, artifacts generated by erroneous segmentation output might easily confuse persons utilizing virtual background programs. More importantly, segmentation problems may result in unwanted exposure to people’s physical environments when applying backdrop effects.