By Watching Unlabeled Videos.

Recent advances in machine learning (ML) and artificial intelligence (AI) are increasingly being adopted by people worldwide to make decisions in their daily lives. Many studies are now focusing on developing ML agents that can make acceptable predictions about the future over various timescales. This would help them anticipate changes in the world around them, including the actions of other agents, and plan their next steps. Making judgments require accurate future prediction necessitates both collecting important environmental transitions and responding to how changes develop over time.

Previous work in visual observation-based future prediction has been limited by the output format or a manually defined set of human activities. These are either overly detailed and difficult to forecast, or they are missing crucial information about the richness of the real world. Predicting “someone jumping” does not account for why they are jumping, what they are jumping onto, and so on. Previous models were also meant to make predictions at a fixed offset into the future, which is a limiting assumption because we rarely know when relevant future states would occur.

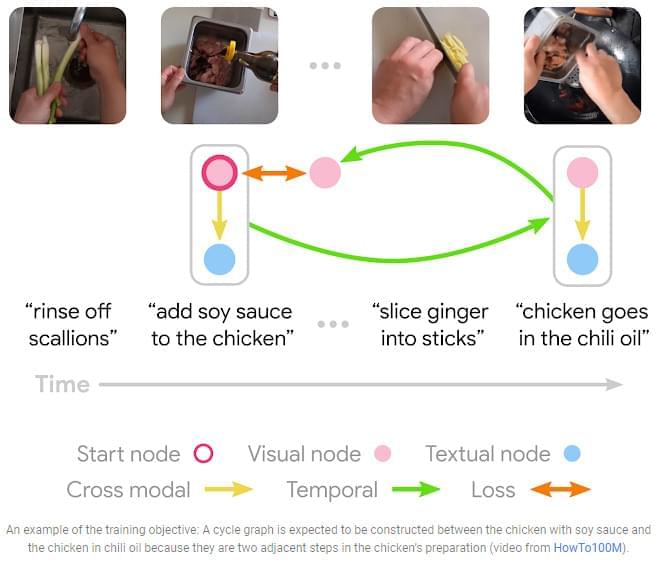

A new Google study introduces a Multi-Modal Cycle Consistency (MMCC) method, which uses narrated instructional video to train a strong future prediction model. It is a self-supervised technique that was developed utilizing a huge unlabeled dataset of various human actions. The resulting model operates at a high degree of abstraction, can anticipate arbitrarily far into the future, and decides how far to predict based on context.