A team of researchers at OpenAI, a San Francisco artificial intelligence development company, has added a new module to its GPT-3 autoregressive language model. Called DALL·E, the module excerpts text with multiple characteristics, analyzes it and then draws a picture based on what it believes was described. On their webpage describing the new module, the team at OpenAI describe it as “a simple decoder-only transformer” and note that they plan to provide more details about its architecture and how it can be used as they learn more about it themselves.

GPT-3 was developed by the company to demonstrate how far neural networks could take text processing and creation. It analyzes user-selected text and generates new text based on that input. For example, if a user types “tell me a story about a dog that saves a child in a fire,” GPT-3 can create such a story in a human-like way. The same input a second time results in the generation of another version of the story.

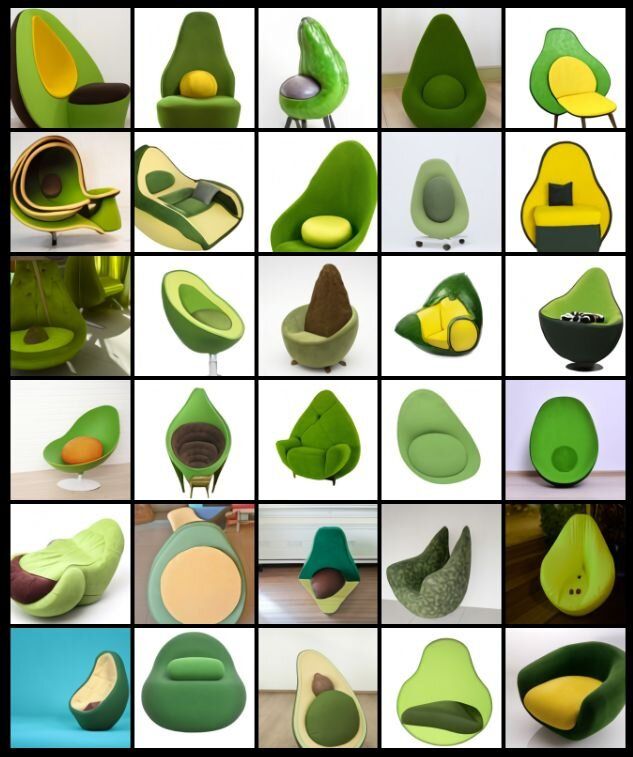

In this new effort, the researchers have extended this ability to graphics. A user types in a sentence and DALL·E attempts to generate what is described using graphics and other imagery. As an example, if a user types in “a dog with cat claws and a bird tail,” the system would produce a cartoon-looking image of a dog with such features—and not just one. It would produce a whole line of them, each created using slightly different interpretations of the original text.