Gollum in “The Lord of the Rings,” Thanos in the “Avengers,” Snoke in “Star Wars,” the Na’vi in “Avatar”—we have all experienced the wonders of motion-capture, a cinema technique that tracks an actor’s movements and translates them into computer animation to create a moving, emoting—and maybe one day Oscar-winning—digital character.

But what many might not realize is that motion capture isn’t limited to the big screen, but extends into science. Behavioral scientists have been developing and using similar tools to study and analyze the posture and movement of animals under a variety of conditions. But motion-capture approaches also require that the subject wears a complex suit with markers that let the computer “know” where each part of the body is in three-dimensional space. That might be okay for a professional actor, but animals tend to resist dressing up.

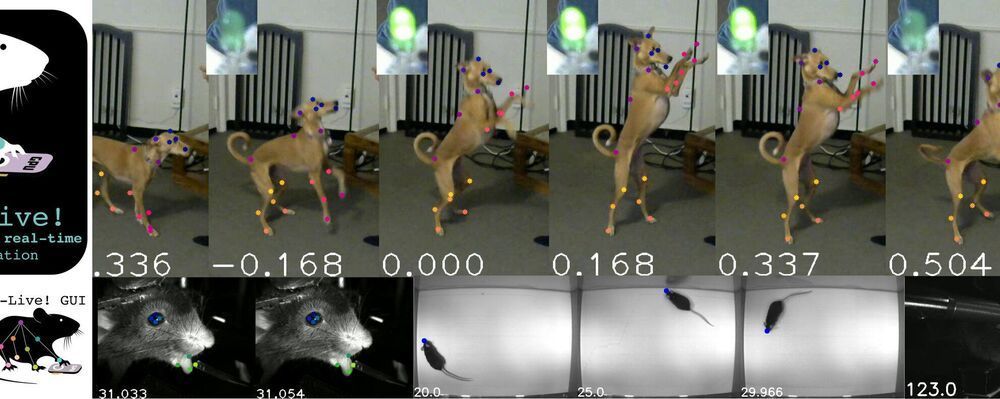

To solve the problem, scientists have begun combining motion-capture with deep learning, a method that lets a computer essentially teach itself how to optimize performing a task, e.g., recognizing a specific “key-point” in videos. The idea is to teach the computer to track and even predict the movements or posture of an animal without the need for motion capture markers.