Neural networks have become enormously successful – but we often don’t know how or why they work. Now, computer scientists are starting to peer inside their artificial minds.

A PENNY for ’em? Knowing what someone is thinking is crucial for understanding their behaviour. It’s the same with artificial intelligences. A new technique for taking snapshots of neural networks as they crunch through a problem will help us fathom how they work, leading to AIs that work better – and are more trustworthy.

In the last few years, deep-learning algorithms built on neural networks – multiple layers of interconnected artificial neurons – have driven breakthroughs in many areas of artificial intelligence, including natural language processing, image recognition, medical diagnoses and beating a professional human player at the game Go.

The trouble is that we don’t always know how they do it. A deep-learning system is a black box, says Nir Ben Zrihem at the Israel Institute of Technology in Haifa. “If it works, great. If it doesn’t, you’re screwed.”

Neural networks are more than the sum of their parts. They are built from many very simple components – the artificial neurons. “You can’t point to a specific area in the network and say all of the intelligence resides there,” says Zrihem. But the complexity of the connections means that it can be impossible to retrace the steps a deep-learning algorithm took to reach a given result. In such cases, the machine acts as an oracle and its results are taken on trust.

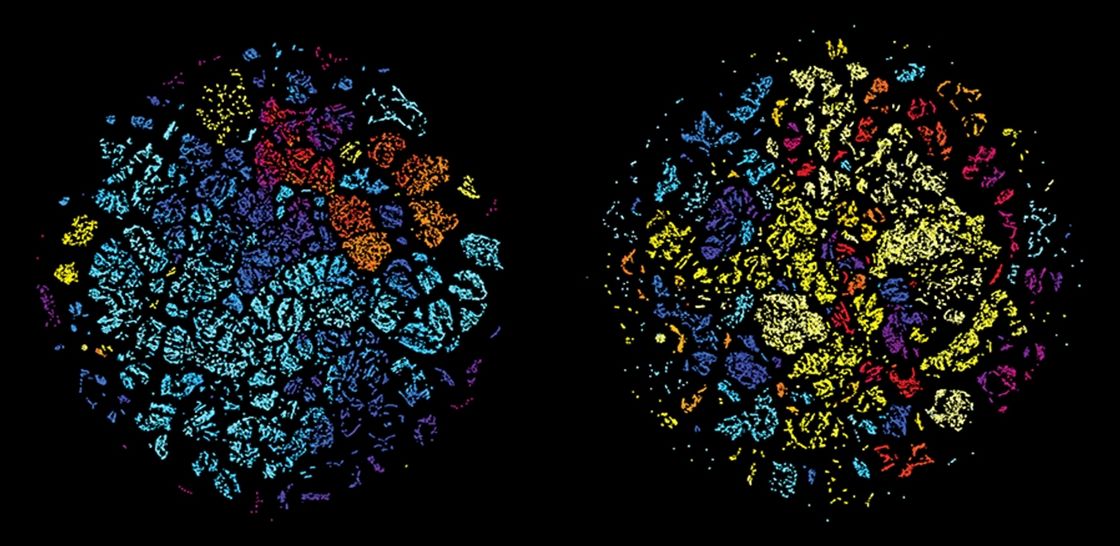

To address this, Zrihem and his colleagues created images of deep learning in action. The technique, they say, is like an fMRI for computers, capturing an algorithm’s activity as it works through a problem. The images allow the researchers to track different stages of the neural network’s progress, including dead ends.