By Angela Chen — The Chronicle of Higher Education

When the world ends, it may not be by fire or ice or an evil robot overlord. Our demise may come at the hands of a superintelligence that just wants more paper clips.

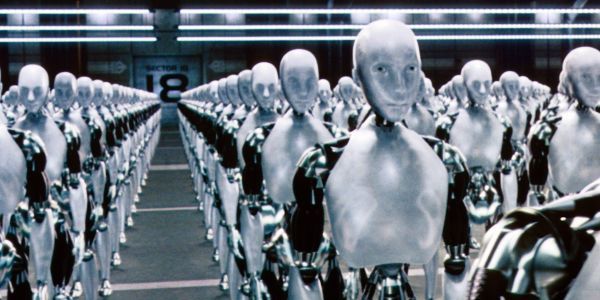

So says Nick Bostrom, a philosopher who founded and directs the Future of Humanity Institute, in the Oxford Martin School at the University of Oxford. He created the “paper-clip maximizer” thought experiment to expose flaws in how we conceive of superintelligence. We anthropomorphize such machines as particularly clever math nerds, says Bostrom, whose book Superintelligence: Paths, Dangers, Strategies was released in Britain in July and arrived stateside this month. Spurred by science fiction and pop culture, we assume that the main superintelligence-gone-wrong scenario features a hostile organization programming software to conquer the world. But those assumptions fundamentally misunderstand the nature of superintelligence: The dangers come not necessarily from evil motives, says Bostrom, but from a powerful, wholly nonhuman agent that lacks common sense.