IBM and AMD announced plans to develop next-generation computing architectures based on the combination of quantum computers and high-performance computing, known as quantum-centric supercomputing.

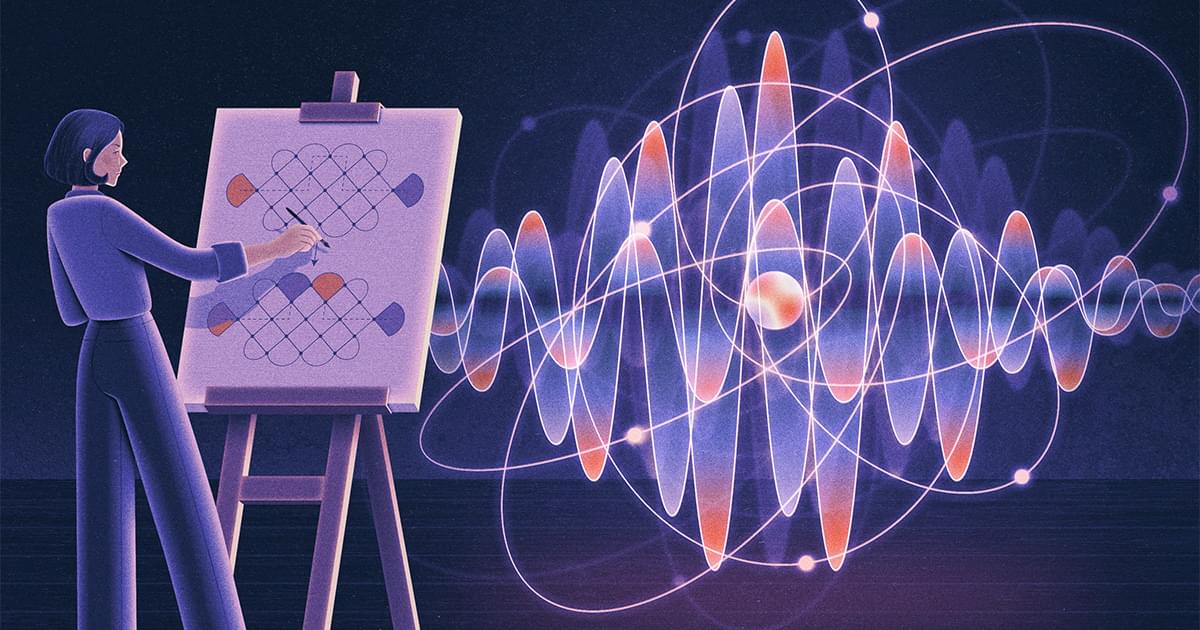

A new trick for modeling molecules with quantum accuracy takes a step toward revealing the equation at the center of a popular simulation approach, which is used in fundamental chemistry and materials science studies.

The effort to understand materials and chemical reactions eats up roughly a third of national lab supercomputer time in the U.S. The gold standard for accuracy is the quantum many-body problem, which can tell you what’s happening at the level of individual electrons. This is the key to chemical and material behaviors as electrons are responsible for chemical reactivity and bonds, electrical properties and more. However, quantum many-body calculations are so difficult that scientists can only use them to calculate atoms and molecules with a handful of electrons at a time.

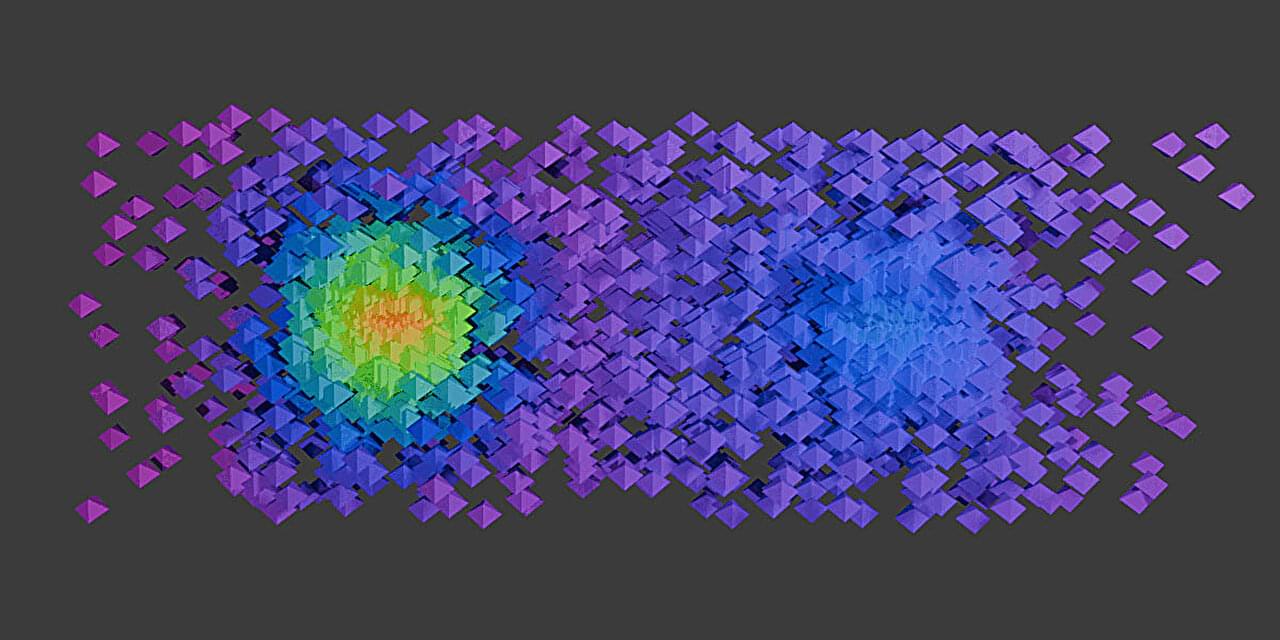

Density functional theory, or DFT, is easier—the computing resources needed for its calculations scale with the number of electrons cubed, rather than rising exponentially with each new electron. Instead of following each individual electron, this theory calculates electron densities—where the electrons are most likely to be located in space. In this way, it can be used to simulate the behavior of many hundreds of atoms.

A team of scientists at Simon Fraser University’s Quantum Technology Lab and leading Canada-based quantum company Photonic Inc. have created a new type of silicon-based quantum device controlled both optically and electrically, marking the latest breakthrough in the global quantum computing race.

The research, published in the journal Nature Photonics, reveals new diode nanocavity devices for electrical control over silicon color center qubits.

The devices have achieved the first-ever demonstration of an electrically-injected single-photon source in silicon. The breakthrough clears another hurdle toward building a quantum computer—which has enormous potential to provide computing power well beyond that of today’s supercomputers and advance fields like chemistry, materials science, medicine and cybersecurity.

Europe now has an exascale supercomputer which runs entirely on renewable energy. Of particular interest: one of the 30 inaugural projects for the machine focuses on realistic simulations of biological neurons (see https://www.fz-juelich.de/en/news/effzett/2024/brain-research)

[ https://www.nature.com/articles/d41586-025-02981-1](https://www.nature.com/articles/d41586-025-02981-1)

Large language models (LLMs) work with artificial neural networks inspired by the way the brain works. Dr. Thorsten Hater (JSC) is focused on the nature-inspired models of LLMs: neurons that communicate with each other in the human brain. He wants to use the exascale computer JUPITER to perform even more realistic simulations of the behaviour of individual neurons.

Many models treat a neuron merely as a point that is connected to other points. The spikes, or electrical signals, travel along these connections. “Of course, this is overly simplified,” says Hater. “In our model, the neurons have a spatial extension, as they do in reality. This allows us to describe many processes in detail on the molecular level. We can calculate the electric field across the entire cell. And we can thus show how signal transmission varies right down to the individual neuron. This gives us a much more realistic picture of these processes.”

For the simulations, Hater uses a program called Arbor. This allows more than two million individual cells to be interconnected computationally. Such models of natural neural networks are useful, for example, in the development of drugs to combat neurodegenerative diseases like Alzheimer’s. The physicist and software developer would like to simulate and study the changes that take place in the neurons in the brain on the exascale computer.

Questions to inspire discussion.

🌐 Q: What distinguishes embedded AI from language models like ChatGPT? A: Embedded AI interacts with the real world, while LLMs (Large Language Models) primarily answer questions based on trained information.

Chip Production and Supply.

💻 Q: What are Samsung’s plans for chip production in Texas? A: Samsung’s new Texas chip plant will produce 2nm chips with 16,000 wafers/month by the end of 2024, boosted by a $16B Tesla deal.

🔧 Q: How will the Samsung-Tesla deal impact Tesla’s chip supply? A: The deal will significantly boost Tesla’s chip supply, producing 17,000 wafers per month of 2 nanometer chips reserved solely for Tesla.

AI Infrastructure and Applications.

Questions to inspire discussion.

📷 Q: What camera technology does the Optimus bot use? A: Optimus uses car cameras with macro modes for reading small text, supplied by Simco (a Samsung division), featuring a miniaturized camera assembly with internal movement mechanisms.

Tesla AI and Chip Development.

🧠 Q: How does Tesla’s AI5 chip compare to competitors? A: The AI5 chip is potentially the best inference chip for models under 250 billion parameters, offering the lowest cost, best performance per watt, and is milliseconds faster than competitors.

💻 Q: What advantages does Tesla have in chip development? A: Tesla controls the chip design, silicon talent, and has vertical integration, giving them a significant edge over competitors in AI chip development.

Tesla Product and Business Updates.

Questions to inspire discussion.

AI and Supercomputing Developments.

🖥️ Q: What is XAI’s Colossus 2 and its significance? A: XAI’s Colossus 2 is planned to be the world’s first gigawatt-plus AI training supercomputer, with a non-trivial chance of achieving AGI (Artificial General Intelligence).

⚡ Q: How does Tesla plan to support the power needs of Colossus 2? A: Elon Musk plans to build power plants and battery storage in America to support the massive power requirements of the AI training supercomputer.

💰 Q: What is Musk’s prediction for universal income by 2030? A: Musk believes universal high income will be achieved, providing everyone with the best medical care, food, home, transport, and other necessities.

🏭 Q: How does Musk plan to simulate entire companies with AI? A: Musk aims to simulate entire companies like Microsoft with AI, representing a major jump in AI capabilities but limited to software replication, not complex physical products.