Scientists hope to accelerate the development of human-level AI using a network of powerful supercomputers — with the first of these machines fully operational by 2025.

Using supercomputers and satellite imagery, the researchers showed our planet breathing.

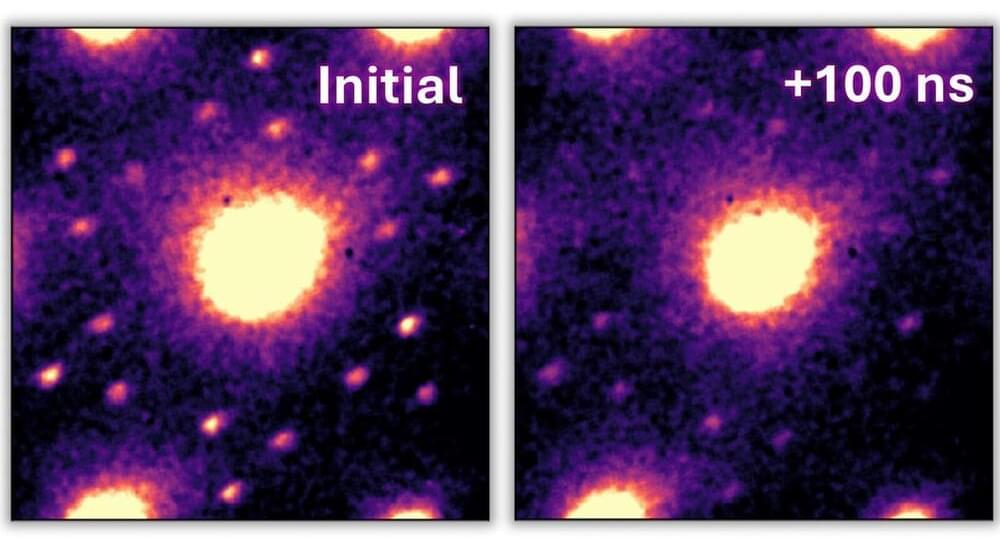

Researchers develop energy-efficient supercomputing with neural networks and charge density waves.

Researchers are creating efficient systems using neural networks and charge density waves to reduce supercomputing’s massive energy use.

As we have alluded to numerous times when talking about the next “AI” trade, data centers will be the “factories of the future” when it comes to the age of AI.

That’s the contention of Chris Miller, the author of Chip War, who penned a recent opinion column for Financial Times noting that ‘chip wars’ could very soon become ‘cloud wars’

He points out that the strategic use of high-powered computing dates back to the Cold War when the US allowed the USSR limited access to supercomputers for weather forecasting, not nuclear simulations.

Neuromorphic computers are devices that try to achieve reasoning capability by emulating a human brain. They are a different type of computer architecture that copies the physical characteristics and design principles of biological nervous systems. Although neuromorphic computations can be emulated, it’s very inefficient for classical computers to simulate. Typically new hardware is required.

The first neuromorphic computer at the scale of a full human brain is about to come online. It’s called DeepSouth, and will be finished in April 2024 at Western Sydney University. This computer should enable new research into how our brain actually functions, potentially leading to breakthroughs in how AI is created.

One important characteristic of this neuromorphic computer is that it’s constructed out of commodity hardware. Specifically, it’s built on top of FPGAs. This means it will be much easier for other organizations to copy the design. It also means that once AI starts self-improving, it can probably build new iterations of hardware quite easily. Instead of having to build factories from the ground up, leveraging existing digital technology allows all the existing infrastructure to be reused. This might have implications for how quickly we develop AGI, and how quickly superintelligence arises.

#ai #neuromorphic #computing.

A new supercomputer aims to closely mimic the human brain — it could help unlock the secrets of the mind and advance AI

https://theconversation.com/a-new-sup…

And this shows one of the many ways in which the Economic Singularity is rushing at us. The 🦾🤖 Bots are coming soon to a job near you.

NVIDIA unveiled a suite of services, models, and computing platforms designed to accelerate the development of humanoid robots globally. Key highlights include:

- NVIDIA NIM™ Microservices: These containers, powered by NVIDIA inference software, streamline simulation workflows and reduce deployment times. New AI microservices, MimicGen and Robocasa, enhance generative physical AI in Isaac Sim™, built on @NVIDIAOmniverse

- NVIDIA OSMO Orchestration Service: A cloud-native service that simplifies and scales robotics development workflows, cutting cycle times from months to under a week.

- AI-Enabled Teleoperation Workflow: Demonstrated at #SIGGRAPH2024, this workflow generates synthetic motion and perception data from minimal human demonstrations, saving time and costs in training humanoid robots.

NVIDIA’s comprehensive approach includes building three computers to empower the world’s leading robot manufacturers: NVIDIA AI and DGX to train foundation models, Omniverse to simulate and enhance AIs in a physically-based virtual environment, and Jetson Thor, a robot supercomputer. The introduction of NVIDIA NIM microservices for robot simulation generative AI further accelerates humanoid robot development.

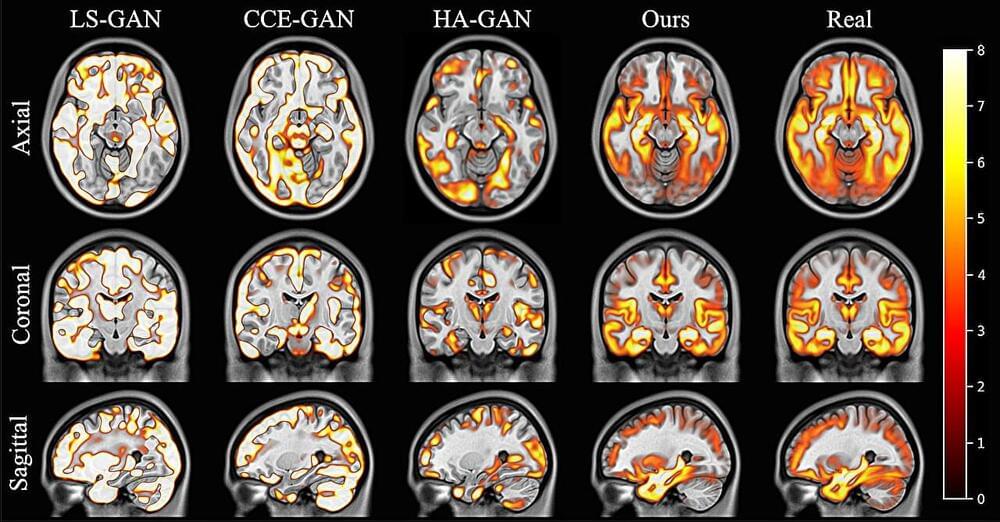

An AI model developed by scientists at King’s College London, in close collaboration with University College London, has produced three-dimensional, synthetic images of the human brain that are realistic and accurate enough to use in medical research.

The model and images have helped scientists better understand what the human brain looks like, supporting research to predict, diagnose and treat brain diseases such as dementia, stroke, and multiple sclerosis.

The algorithm was created using the NVIDIA Cambridge-1, the UK’s most powerful supercomputer. One of the fastest supercomputers in the world, the Cambridge-1 allowed researchers to train the AI in weeks rather than months and produce images of far higher quality.

Working together, the University of Innsbruck and the spin-off AQT have integrated a quantum computer into a high-performance computing (HPC) environment for the first time in Austria. This hybrid infrastructure of supercomputer and quantum computer can now be used to solve complex problems in various fields such as chemistry, materials science or optimization.

Demand for computing power is constantly increasing and the consumption of resources to support these calculations is growing. Processor clock speeds in conventional computers, typically a few GHz, appear to have reached their limit.

Performance improvements over the last 10 years have focused primarily on the parallelization of tasks using multi-core systems, which are operated in HPC centers as fast networked multi-node computing clusters. However, computing power only increases approximately linearly with the number of nodes.

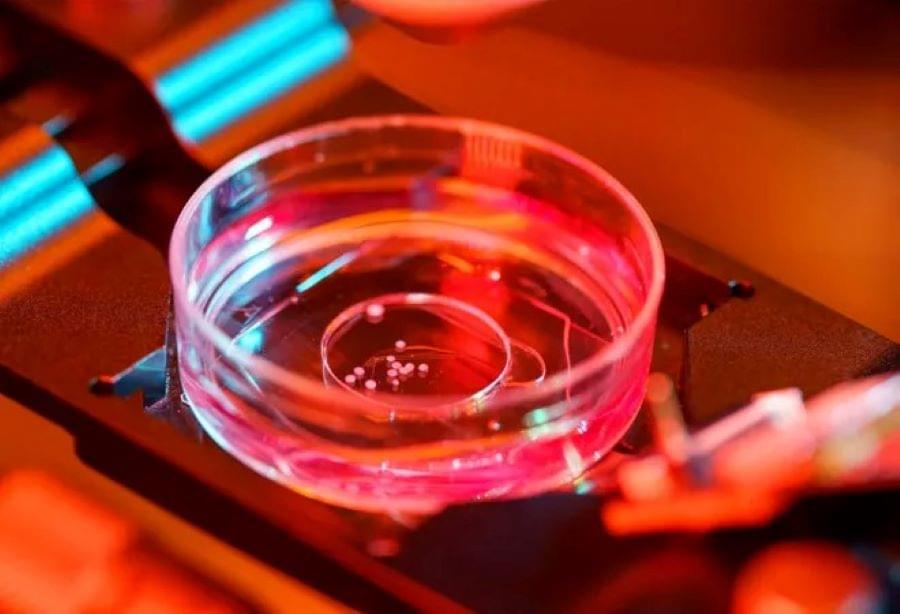

The field of organoid intelligence is recognized as groundbreaking. In this field, scientists utilize human brain cells to enhance computer functionality. They cultivate tissues in laboratories that mimic real organs, particularly the brain. These brain organoids can perform brain-like functions and are being developed by Dr. Thomas Hartung and his team at the Johns Hopkins Bloomberg School of Public Health.

For nearly two decades, scientists have used organoids to conduct experiments without harming humans or animals. Hartung, who has been cultivating brain organoids from human skin samples since 2012, aims to integrate these organoids into computing. This approach promises more energy-efficient computing than current supercomputers and could revolutionize drug testing, improve our understanding of the human brain, and push the boundaries of computing technology.

The conducted research highlights the potential of biocomputing to surpass the limitations of traditional computing and AI. Despite AI’s advancements, it still falls short of replicating the human brain’s capabilities, such as energy efficiency, learning, and complex decision-making. The human brain’s capacity for information storage and energy efficiency remains unparalleled by modern computers. Hartung’s work with brain organoids, inspired by Nobel Prize-winning stem cell research, aims to replicate cognitive functions in the lab. This research could open new avenues for understanding the human brain by allowing ethical experimentation. The team envisions scaling up the size of brain organoids and developing communication tools for input and output, enabling more complex tasks.