Questions to inspire discussion.

Data and Autonomy.

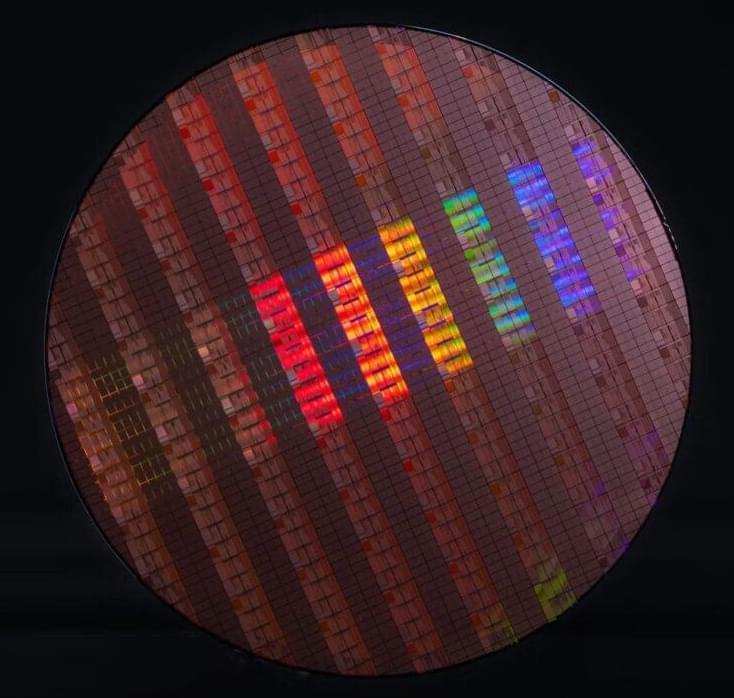

📊 Q: Why is vision data valuable in AI development? A: Vision data is worth more than zero if you can collect and process yataflops and yataflops of data, but worthless without collection capabilities, making the world’s visual data valuable for those who can collect and process it.

🚗 Q: How does solving autonomy relate to AI development? A: Solving autonomy is crucial and requires tons of real world data, which necessitates tons of robots collecting real world data in the real world, creating a cycle of data collection and AI improvement.

Company-Specific Opportunities.

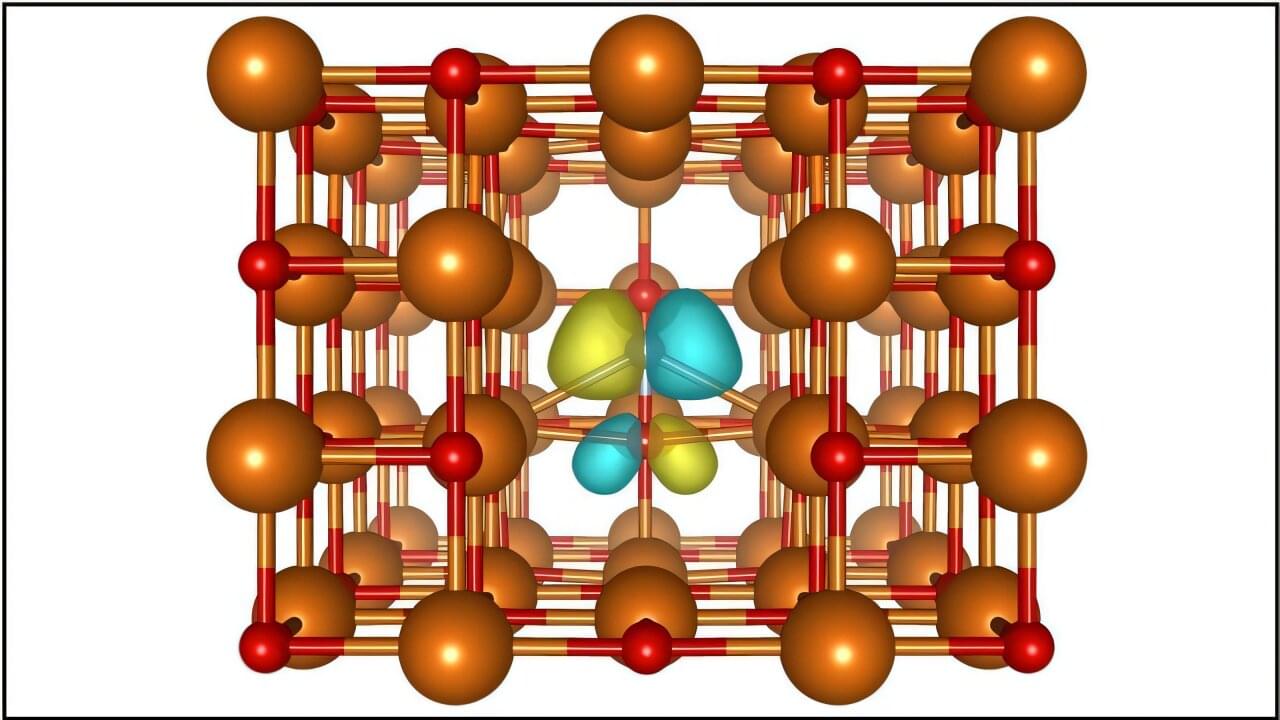

🔋 Q: What advantage does Tesla have in developing humanoid robots? A: Tesla has essentially built the robot’s brain in their vehicles, allowing them to transplant this brain into humanoid robots, giving them a massive head start in development.