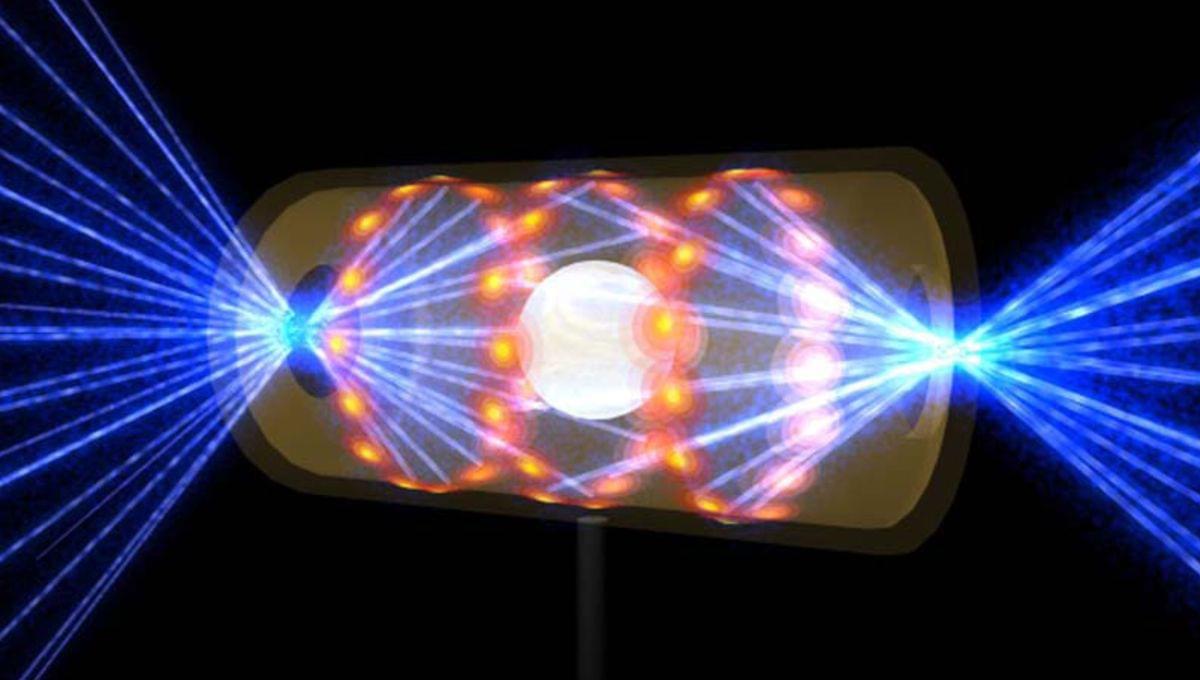

Practical fusion power that can provide cheap, clean energy could be a step closer thanks to artificial intelligence. Scientists at Lawrence Livermore National Laboratory have developed a deep learning model that accurately predicted the results of a nuclear fusion experiment conducted in 2022. Accurate predictions can help speed up the design of new experiments and accelerate the quest for this virtually limitless energy source.

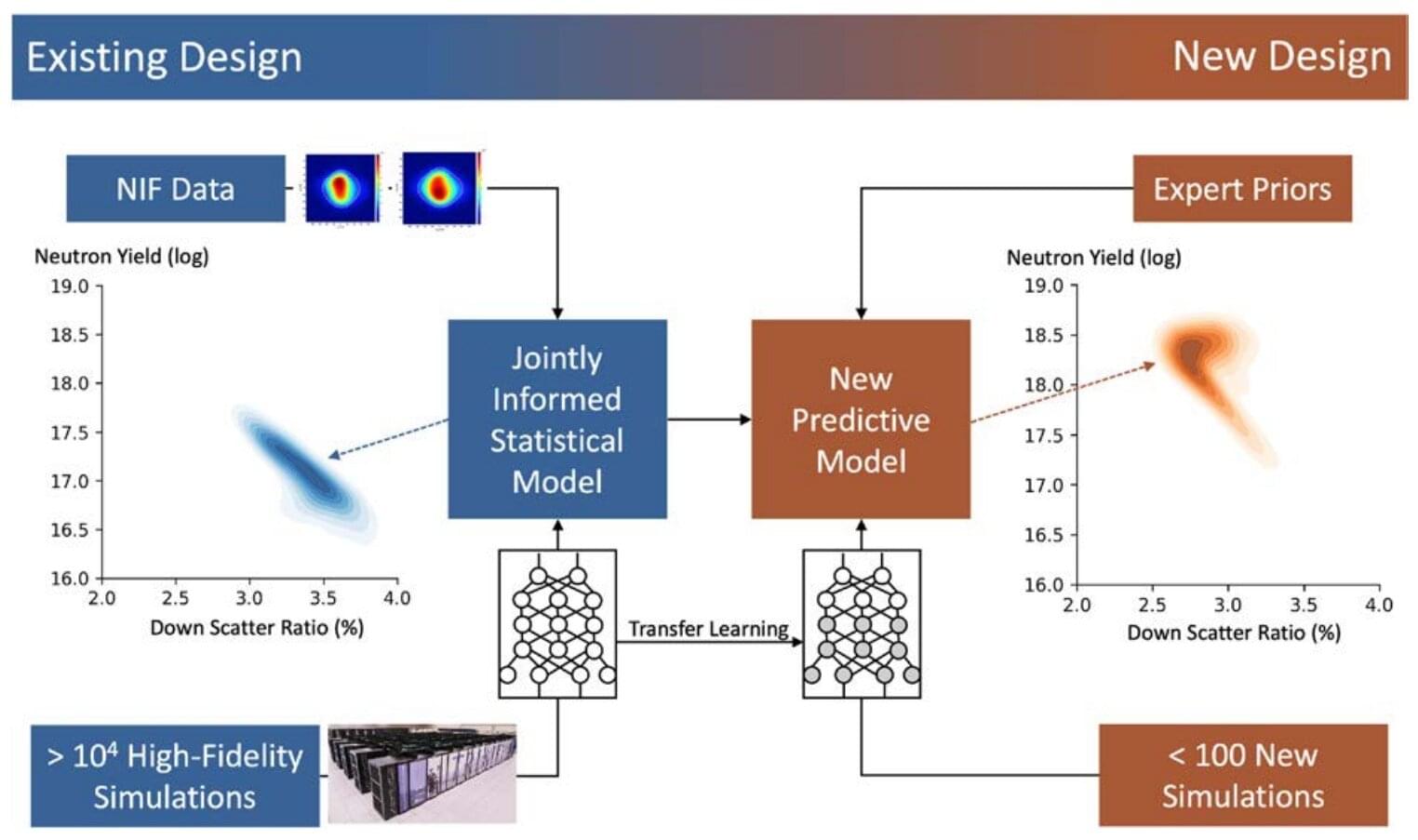

In a paper published in Science, researchers describe how their AI model predicted with a probability of 74% that ignition was the likely outcome of a small 2022 fusion experiment at the National Ignition Facility (NIF). This is a significant advance as the model was able to cover more parameters with greater precision than traditional supercomputers.

Currently, nuclear power comes from nuclear fission, which generates energy by splitting atoms. However, it can produce radioactive waste that remains dangerous for thousands of years. Fusion generates energy by fusing atoms, similar to what happens inside the sun. The process is safer and does not produce any long-term radioactive waste. While it is a promising energy source, it is still a long way from being a viable commercial technology.