Over the past 50 years, geographers have embraced each new technological shift in geographic information systems (GIS)—the technology that turns location data into maps and insights about how places and people interact—first the computer boom, then the rise of the internet and data-sharing capabilities with web-based GIS, and later the emergence of smartphone data and cloud-based GIS systems.

Now, another paradigm shift is transforming the field: the advent of artificial intelligence (AI) as an independent “agent” capable of performing GIS functions with minimal human oversight.

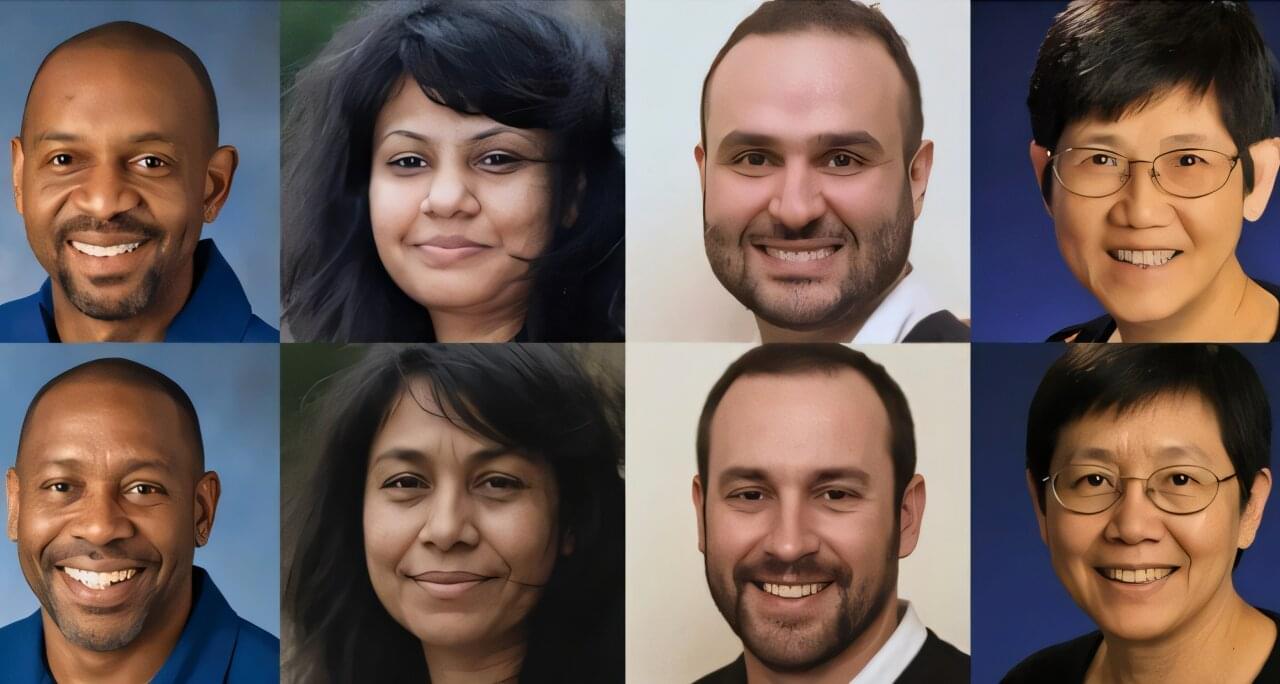

In a study published in Annals of GIS, a multi-institutional team led by geography researchers at Penn State built and tested four AI agents in order to introduce a conceptual framework of autonomous GIS and examine how this shift is redefining the practice of GIS.