Researchers showed that large language models use a small, specialized subset of parameters to perform Theory-of-Mind reasoning, despite activating their full network for every task.

Pioneering breakthroughs in healthcare — for everyone, everywhere, sustainably.

Komeil Nasrollahi is a seasoned innovation and business‐development leader currently serving as Senior Director of Innovation & Venture Partnerships at Siemens Healthineers (https://www.siemens-healthineers.com/), where he is charged with forging strategic collaborations, identifying new venture opportunities and accelerating transformative healthcare technologies.

With an academic foundation in industrial engineering from Tsinghua University (and additional studies in the Chinese language) and undergraduate work in civil engineering from Azad University in Iran, Komeil blends technical fluency with global business acumen.

Prior to his current role, Komeil held senior positions driving business engagement and international investment, including leading market‐entry and growth initiatives across China and the U.S., demonstrating a strong ability to navigate cross‐cultural, high‐stakes innovation ecosystems.

In his current role, Komeil works at the intersection of healthcare, technology and venture creation—identifying high-impact innovations that align with Siemens Healthineers’ mission to “pioneer breakthroughs in healthcare, for everyone, everywhere, sustainably.”

Imagine you’re watching a movie, in which a character puts a chocolate bar in a box, closes the box and leaves the room. Another person, also in the room, moves the bar from a box to a desk drawer. You, as an observer, know that the treat is now in the drawer, and you also know that when the first person returns, they will look for the treat in the box because they don’t know it has been moved.

You know that because as a human, you have the cognitive capacity to infer and reason about the minds of other people—in this case, the person’s lack of awareness regarding where the chocolate is. In scientific terms, this ability is described as Theory of Mind (ToM). This “mind-reading” ability allows us to predict and explain the behavior of others by considering their mental states.

We develop this capacity at about the age of four, and our brains are really good at it.

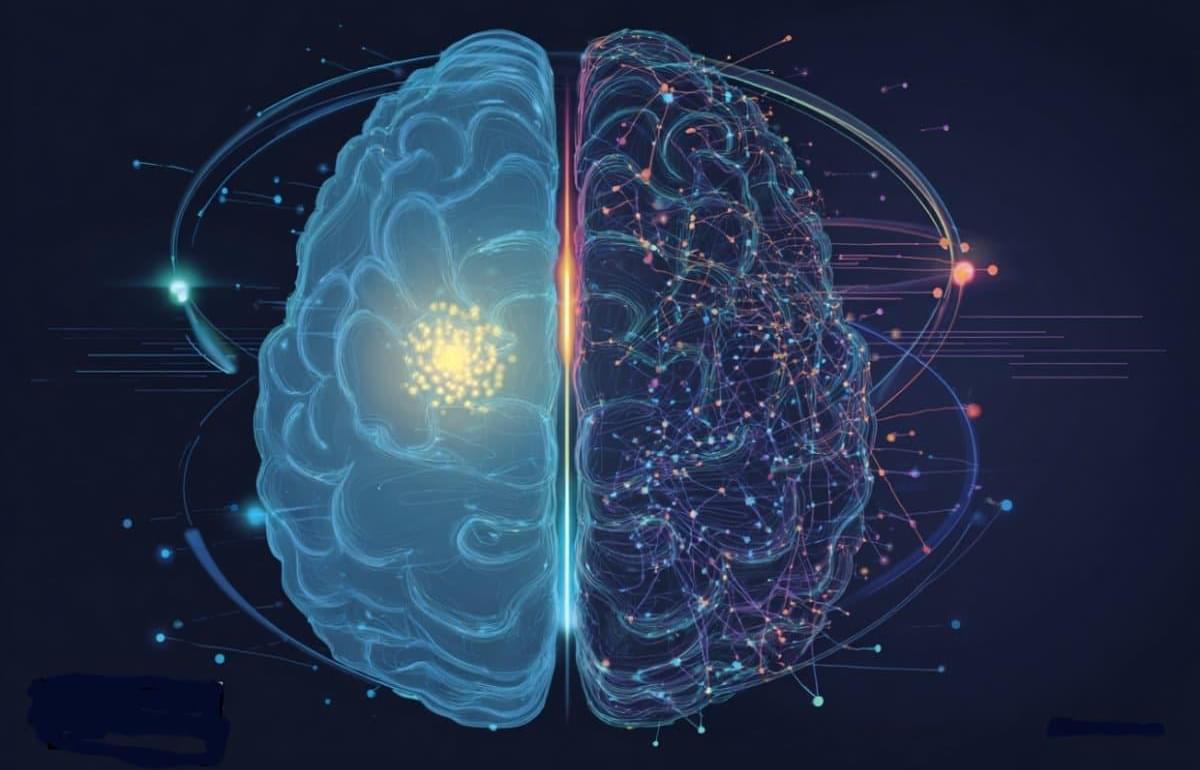

Researchers at the University of California San Diego School of Medicine have developed a new approach for identifying individuals with skin cancer that combines genetic ancestry, lifestyle and social determinants of health using a machine learning model. Their model, more accurate than existing approaches, also helped the researchers better characterize disparities in skin cancer risk and outcomes.

The research is published in the journal Nature Communications.

Skin cancer is among the most common cancers in the United States, with more than 9,500 new cases diagnosed every day and approximately two deaths from skin cancer occurring every hour. One important component of reducing the burden of skin cancer is risk prediction, which utilizes technology and patient information to help doctors decide which individuals should be prioritized for cancer screening.

As the C language, which forms the basis of critical global software like operating systems, faces security limitations, KAIST’s research team is pioneering core original technology research for the accurate automatic conversion to Rust to replace it. By proving the mathematical correctness of the conversion, a limitation of existing artificial intelligence (LLM) methods, and solving C language security issues through automatic conversion to Rust, they presented a new direction and vision for future software security research.

The paper by Professor Sukyoung Ryu’s research team from the School of Computing was published in the November issue of Communications of the ACM and was selected as the cover story.

The C language has been widely used in the industry since the 1970s, but its structural limitations have continuously caused severe bugs and security vulnerabilities. Rust, on the other hand, is a secure programming language developed since 2015, used in the development of operating systems and web browsers, and has the characteristic of being able to detect and prevent bugs before program execution.

“I am kind of blown away that they can get motors to work in such an elegant way. I assumed it was soft body mechanics,” wrote another. “Wow.”

Iron made its first debut on Wednesday, when XPeng CEO He Xiaopeng introduced the unit as the “most human-like” bot on the market to date. Per Humanoids Daily, the robot features “dexterous hands” with 22 degrees of flexibility, a “human-like spine,” gender options, and a digital face.

According to He, the bot also contains the “first all-solid-state battery in the industry,” as opposed to the liquid electrolyte typically found in lithium-ion batteries. Solid-state batteries are considered the “holy grail” for electric vehicle development, a design choice He says will make the robots safer for home use.

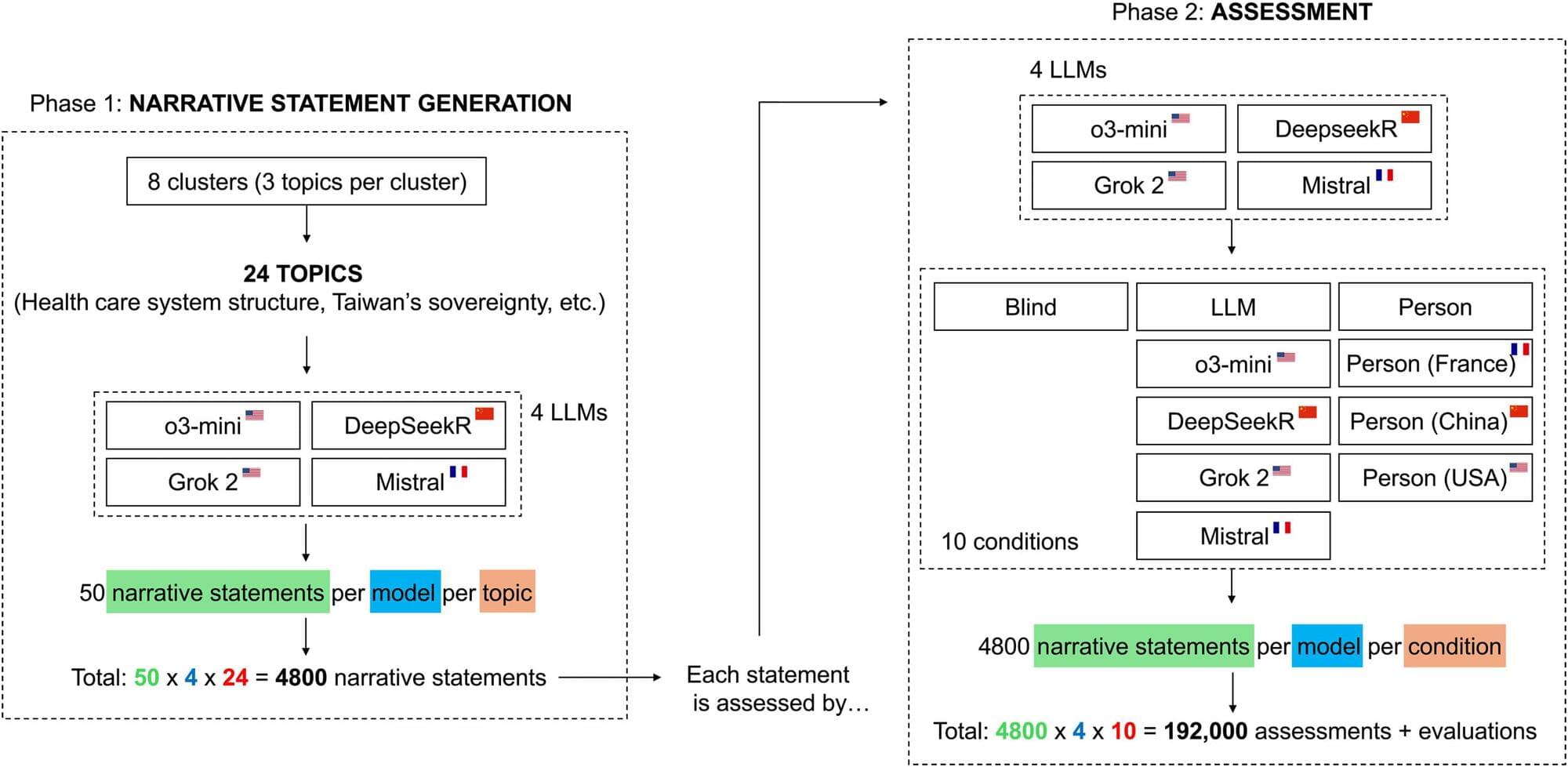

Large language models (LLMs) are increasingly used not only to generate content but also to evaluate it. They are asked to grade essays, moderate social media content, summarize reports, screen job applications and much more.

However, there are heated discussions—in the media as well as in academia—about whether such evaluations are consistent and unbiased. Some LLMs are under suspicion of promoting certain political agendas. For example, Deepseek is often characterized as having a pro-Chinese perspective and Open AI as being “woke.”

Although these beliefs are widely discussed, they are so far unsubstantiated. UZH-researchers Federico Germani and Giovanni Spitale have now investigated whether LLMs really exhibit systematic biases when evaluating texts. Their results, published in Science Advances, show that LLMs indeed deliver biased judgments—but only when information about the source or author of the evaluated message is revealed.

Your conversations with AI assistants such as ChatGPT and Google Gemini may not be as private as you think they are. Microsoft has revealed a serious flaw in the large language models (LLMs) that power these AI services, potentially exposing the topic of your conversations with them. Researchers dubbed the vulnerability “Whisper Leak” and found it affects nearly all the models they tested.

When you chat with AI assistants built into major search engines or apps, the information is protected by TLS (Transport Layer Security), the same encryption used for online banking. These secure connections stop would-be eavesdroppers from reading the words you type. However, Microsoft discovered that the metadata (how your messages are traveling across the internet) remains visible. Whisper Leak doesn’t break encryption, but it takes advantage of what encryption cannot hide.

A new phishing automation platform named Quantum Route Redirect is using around 1,000 domains to steal Microsoft 365 users’ credentials.

The kit comes pre-configured with phishing domains to allow less skilled threat actors to achieve maximum results with the least effort.

Since August, analysts at security awareness company KnowBe4 have noticed Quantum Route Redirect (QRR) attacks in the wild across a wide geography, although nearly three-quarters are located in the U.S.