The wars in Ukraine and Gaza have shown the world that a new technology is now affecting the battlefield: artificial intelligence (AI). The Ukrainian and Russian armies are using AI to help locate and identify targets, pilot drones, and support tactical decision-making.

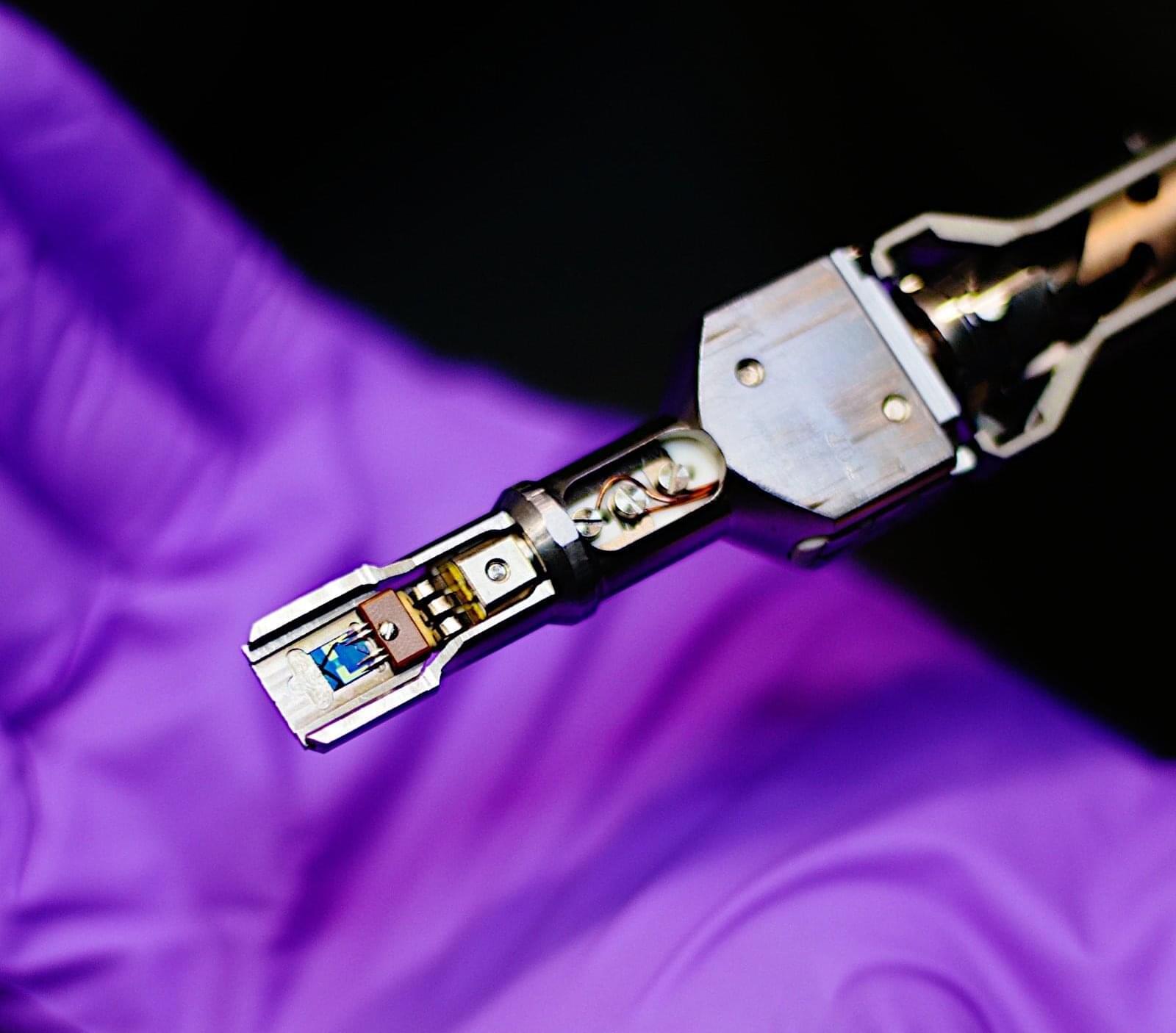

Category: robotics/AI – Page 66

The hexatic phase: Ultra-thin 2D materials in a state between solid and liquid observed for the first time

When ice melts into water, it happens quickly, with the transition from solid to liquid being immediate. However, very thin materials do not adhere to these rules. Instead, an unusual state between solid and liquid arises: the hexatic phase. Researchers at the University of Vienna have now succeeded in directly observing this exotic phase in an atomically thin crystal.

Using state-of-the-art electron microscopy and neural networks, they filmed a silver iodide crystal protected by graphene as it melted. Ultra-thin, two-dimensional materials enabled researchers to directly observe atomic-scale melting processes. The new findings significantly advance the understanding of these phase transitions. Surprisingly, the observations contradict previous predictions—a result now published in Science.

The sudden transition in melting ice is typical of the melting behavior of all three-dimensional materials, from metals and minerals to frozen drinks. However, when a material becomes so thin that it is practically two-dimensional, the rules of melting change dramatically. Between the solid and liquid phases, a new, exotic intermediate phase of matter can arise, known as the “hexatic phase.”

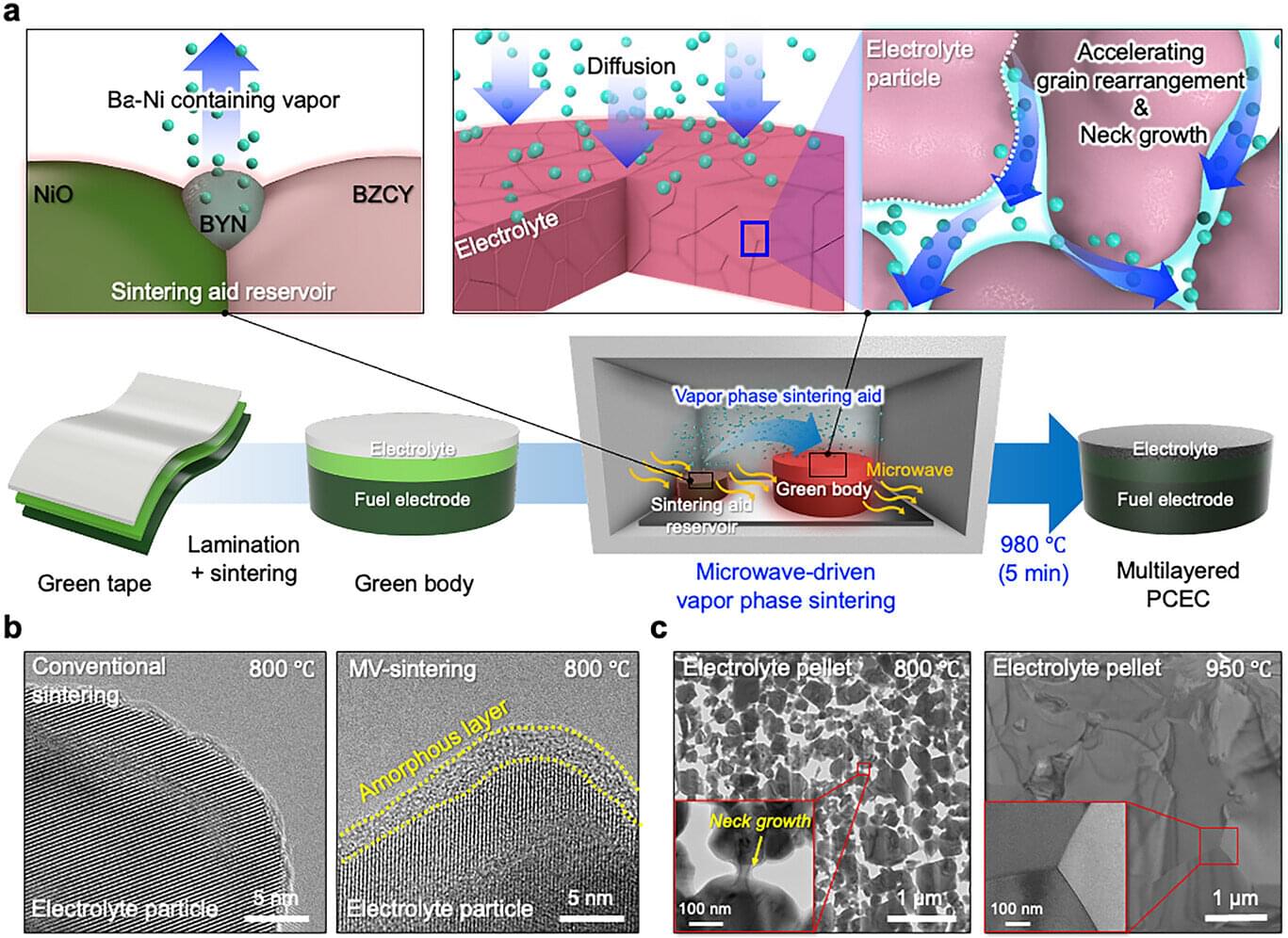

Ceramic electrochemical cell production temperature drops by over 500°C with new method

As power demand surges in the AI era, the protonic ceramic electrochemical cell (PCEC), which can simultaneously produce electricity and hydrogen, is gaining attention as a next-generation energy technology. However, this cell has faced the technical limitation of requiring an ultra-high production temperature of 1,500°C.

A KAIST research team has succeeded in establishing a new manufacturing process that lowers this limit by more than 500°C for the first time.

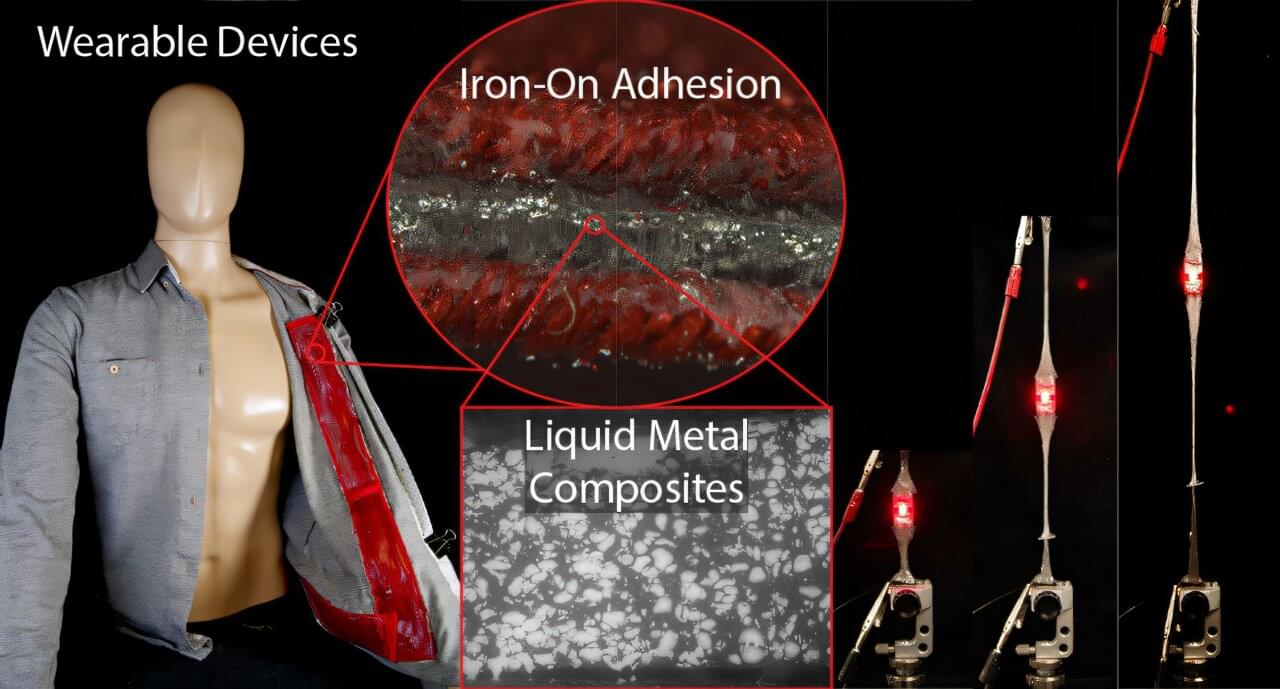

Iron-on electronic patches enable easy integration of circuits into fabrics

Iron-on patches can repair clothing or add personal flair to backpacks and hats. And now they could power wearable tech, too. Researchers reporting in ACS Applied Materials & Interfaces have combined liquid metal and a heat-activated adhesive to create an electrically conductive patch that bonds to fabric when heated with a hot iron. In demonstrations, circuits ironed onto a square of fabric lit up LEDs and attached an iron-on microphone to a button-up shirt.

“E-textiles and wearable electronics can enable diverse applications from health care and environmental monitoring to robotics and human-machine interfaces. Our work advances this exciting area by creating iron-on soft electronics that can be rapidly and robustly integrated into a wide range of fabrics,” says Michael D. Bartlett, a researcher at Virginia Tech and corresponding author on the study.

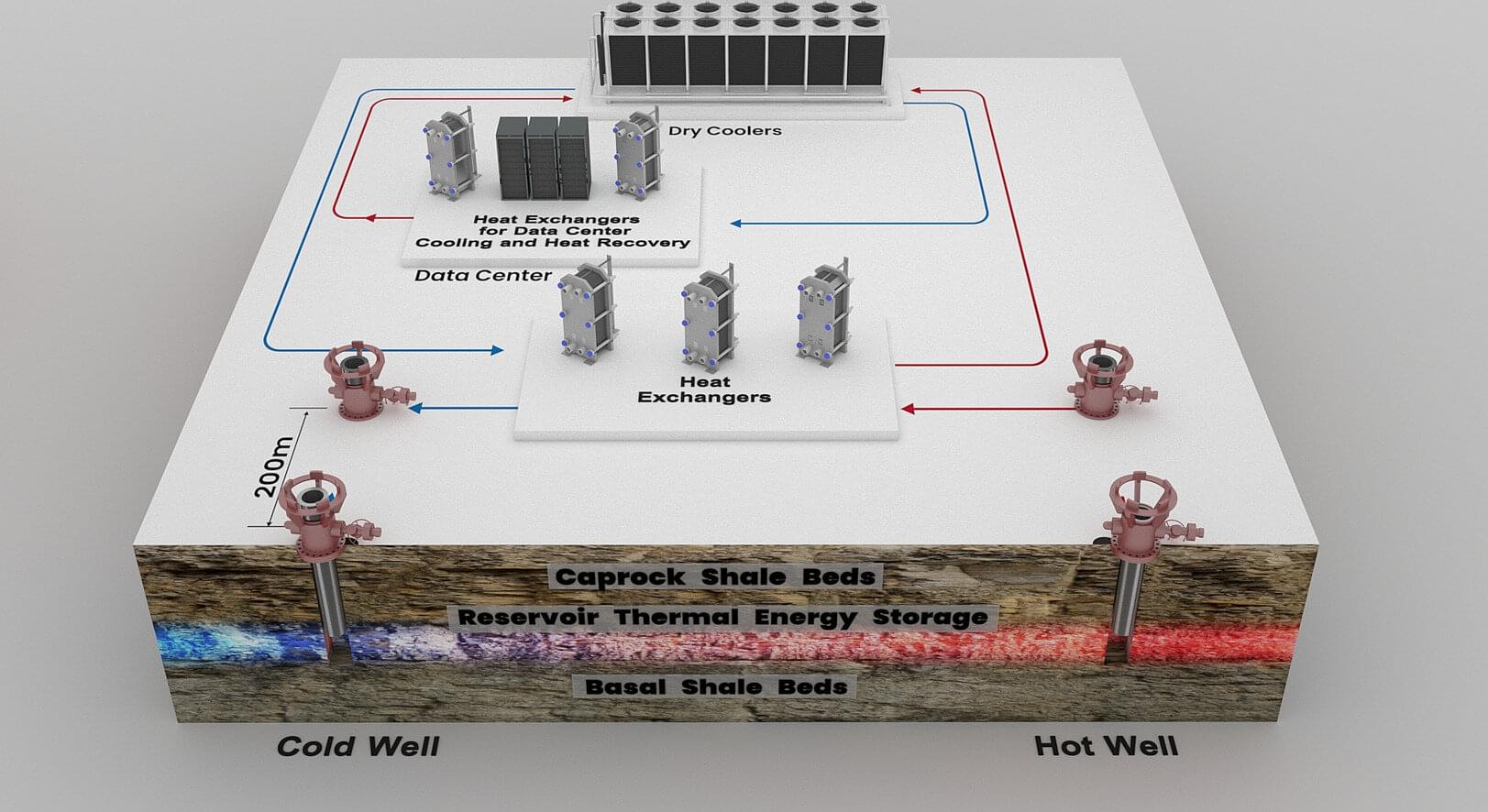

Reservoir thermal energy storage offers efficient cooling for data centers

The rise of artificial intelligence, cloud platforms, and data processing is driving a steady increase in global data center electricity consumption. While running computer servers accounts for the largest share of data center energy use, cooling systems come in second—but a new study by researchers at the National Laboratory of the Rockies (NLR), formerly known as NREL, offers a potential solution to reduce peak energy consumption.

Published in Applied Energy, a techno-economic analysis led by Hyunjun Oh, David Sickinger, and Diana Acero-Allard—researchers in NLR’s energy storage and computational science groups—has demonstrated a system to cool data centers more efficiently and cost-effectively.

The approach, called reservoir thermal energy storage (RTES), stores cold energy underground then uses it to cool facilities during peak-demand periods.

Memories and ideas are living organisms | Michael Levin and Lex Fridman

Lex Fridman Podcast full episode: https://www.youtube.com/watch?v=Qp0rCU49lMs.

Thank you for listening ❤ Check out our sponsors: https://lexfridman.com/sponsors/cv9485-sb.

See below for guest bio, links, and to give feedback, submit questions, contact Lex, etc.

*GUEST BIO:*

Michael Levin is a biologist at Tufts University working on novel ways to understand and control complex pattern formation in biological systems.

*CONTACT LEX:*

*Feedback* — give feedback to Lex: https://lexfridman.com/survey.

*AMA* — submit questions, videos or call-in: https://lexfridman.com/ama.

*Hiring* — join our team: https://lexfridman.com/hiring.

*Other* — other ways to get in touch: https://lexfridman.com/contact.

*EPISODE LINKS:*

Michael Levin’s X: https://twitter.com/drmichaellevin.

Michael Levin’s Website: https://drmichaellevin.org.

Michael Levin’s Papers: https://drmichaellevin.org/publications/

- Biological Robots: https://arxiv.org/abs/2207.00880

- Classical Sorting Algorithms: https://arxiv.org/abs/2401.05375

- Aging as a Morphostasis Defect: https://pubmed.ncbi.nlm.nih.gov/38636560/

- TAME: https://arxiv.org/abs/2201.10346

- Synthetic Living Machines: https://www.science.org/doi/10.1126/scirobotics.abf1571

*SPONSORS:*

To support this podcast, check out our sponsors & get discounts:

*Shopify:* Sell stuff online.

Go to https://lexfridman.com/s/shopify-cv9485-sb.

*CodeRabbit:* AI-powered code reviews.

Go to https://lexfridman.com/s/coderabbit-cv9485-sb.

*LMNT:* Zero-sugar electrolyte drink mix.

Go to https://lexfridman.com/s/lmnt-cv9485-sb.

*UPLIFT Desk:* Standing desks and office ergonomics.

Go to https://lexfridman.com/s/uplift_desk-cv9485-sb.

*Miro:* Online collaborative whiteboard platform.

Go to https://lexfridman.com/s/miro-cv9485-sb.

*MasterClass:* Online classes from world-class experts.

Go to https://lexfridman.com/s/masterclass-cv9485-sb.

*PODCAST LINKS:*

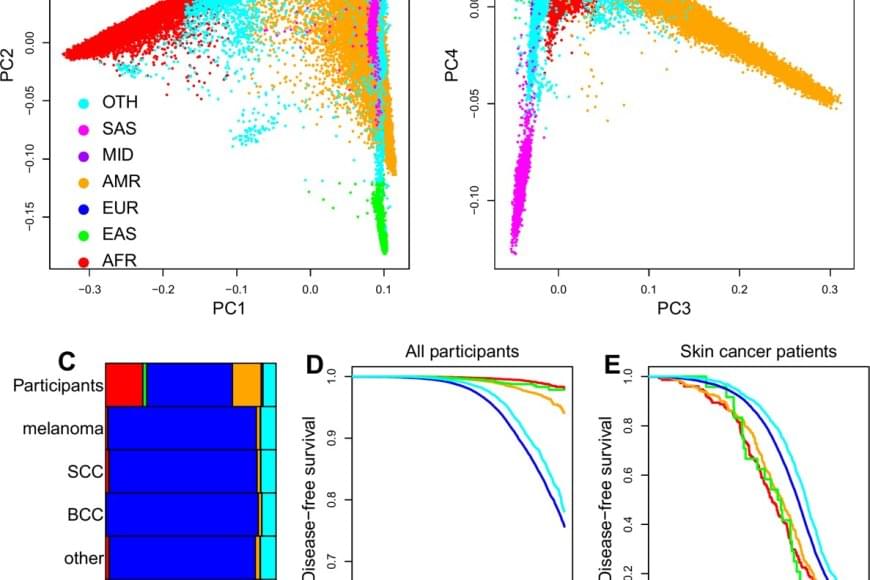

AI model to detect skin cancer

Key findings from the study include:

Researchers have developed a new approach for identifying individuals with skin cancer that combines genetic ancestry, lifestyle and social determinants of health using a machine learning model. Their model, more accurate than existing approaches, also helped the researchers better characterize disparities in skin cancer risk and outcomes.

Skin cancer is among the most common cancers in the United States, with more than 9,500 new cases diagnosed every day and approximately two deaths from skin cancer occurring every hour. One important component of reducing the burden of skin cancer is risk prediction, which utilizes technology and patient information to help doctors decide which individuals should be prioritized for cancer screening.

Traditional risk prediction tools, such as risk calculators based on family history, skin type and sun exposure, have historically performed best in people of European ancestry because they are more represented in the data used to develop these models. This leaves significant gaps in early detection for other populations, particularly those with darker skin, who are less likely to be of European ancestry. As a result, skin cancer in people of non-European ancestry is frequently diagnosed at later stages when it is more difficult to treat. As a consequence of later stage detection, people of non-European ancestry also tend to have worse overall outcomes from skin cancer.

NVIDIA Awards up to $60,000 Research Fellowships to PhD Students

For 25 years, the NVIDIA Graduate Fellowship Program has supported graduate students doing outstanding work relevant to NVIDIA technologies. Today, the program announced the latest awards of up to $60,000 each to 10 Ph.D. students involved in research that spans all areas of computing innovation.

Selected from a highly competitive applicant pool, the awardees will participate in a summer internship preceding the fellowship year. Their work puts them at the forefront of accelerated computing — tackling projects in autonomous systems, computer architecture, computer graphics, deep learning, programming systems, robotics and security.

The NVIDIA Graduate Fellowship Program is open to applicants worldwide.