A strange new robot from South Korea could be the key to exploring parts of the Moon no one has ever reached, and it’s built to survive the impossible.

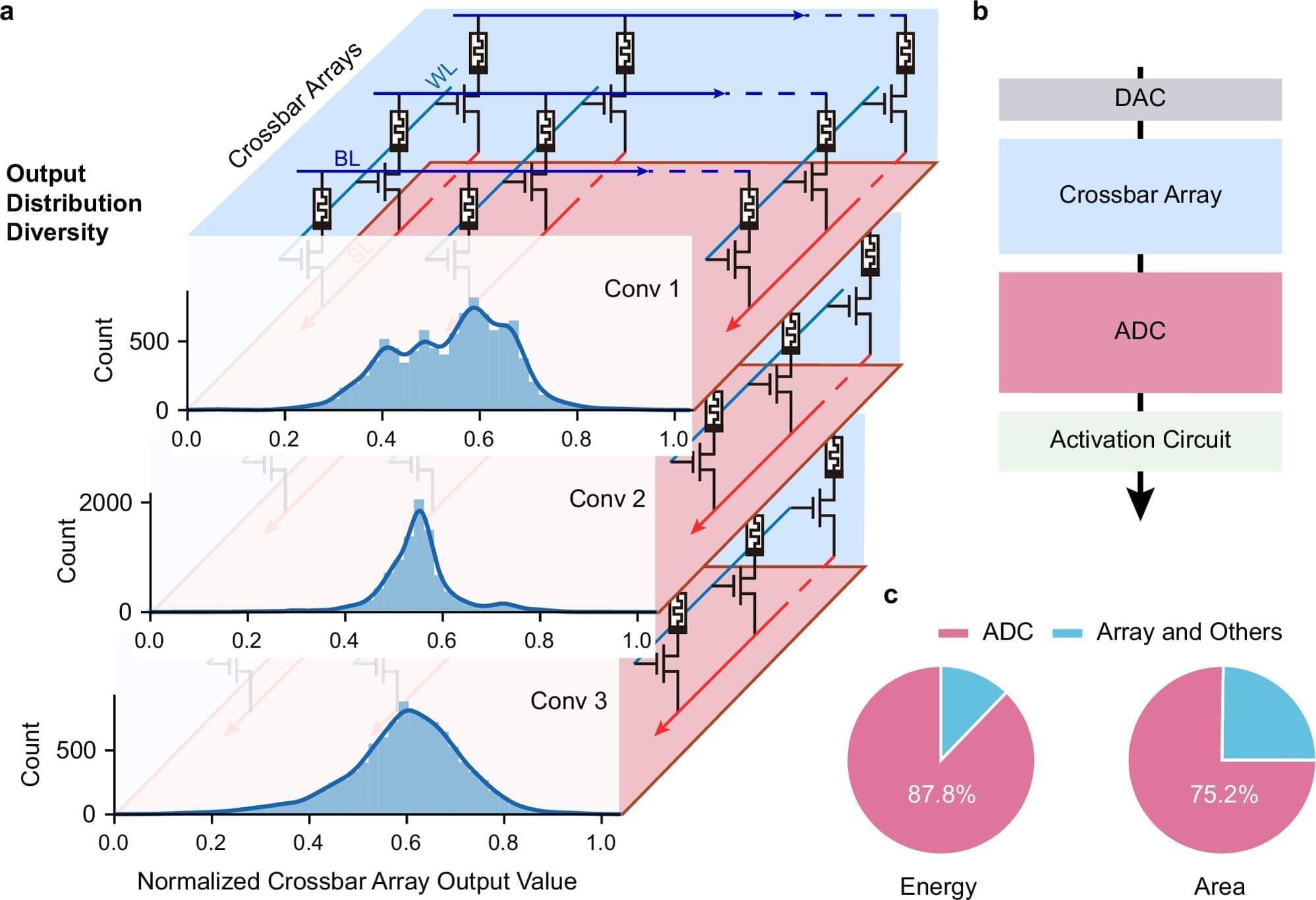

A cross-institutional team led by researchers from the Department of Electrical and Electronic Engineering (EEE), under the Faculty of Engineering at The University of Hong Kong (HKU), have achieved a major breakthrough in the field of artificial intelligence (AI) hardware by developing a new type of analog-to-digital converter (ADC) that uses innovative memristor technology. The work is published in Nature Communications.

Challenges with conventional AI hardware Conventional AI accelerators face challenges because the essential components that convert analog signals into digital form are often bulky and power-consuming. Led by Professor Ngai Wong, Professor Can Li and Dr. Zhengwu Liu of HKU EEE, in collaboration with researchers from Xidian University and the Hong Kong University of Science and Technology, the cross-disciplinary research team developed a new type of ADC that uses innovative memristor technology. This new converter can process signals more efficiently and accurately, paving the way for faster, more energy-efficient AI chips.

Adaptive system and efficiency gains The research team created an adaptive system that automatically adjusts its settings based on the data it receives, i.e., dynamically fine-tuning how signals are converted. This results in a 15.1× improvement in energy efficiency and a 12.9× reduction in circuit area compared with state-of-the-art solutions.

Help us help the kids of Ukraine to dream about the future.

Space, hope, and a great cause on the side of freedom. Follow the links. Make the donation, put a telescope, model rocket or robot in the hands of a kid who very badly needs Permission to Dream!

Space4 Ukraine empowers young Ukrainians whose education has been disrupted by war, giving them access to the tools, training, and opportunities they need to rebuild their future—and ours. Because no human potential should ever be wasted.

npj Computational Materials, Article number: (2025) Cite this article

We are providing an unedited version of this manuscript to give early access to its findings. Before final publication, the manuscript will undergo further editing. Please note there may be errors present which affect the content, and all legal disclaimers apply.

Automation and robotics, particularly with the integration of AI, are transforming industries and poised to significantly impact the workforce, but are likely to lead to a reduction in work hours and increased productivity rather than total job destruction.

## Questions to inspire discussion.

Investment & Market Opportunity.

🤖 Q: What is the revenue potential for robotics by 2025? A: ARK Invest projects a $26 trillion global revenue opportunity across household and manufacturing robotics by 2025, driven by convergence of humanoid robots, AI, and computer vision technologies.

💰 Q: How should companies evaluate robot ROI for deployment? A: Robots are worth paying for based on task-specific capabilities delivering 2–10% productivity gains, unlike autonomous vehicles requiring full job performance—Roomba succeeded despite early limitations by being novel and time-saving for specific tasks.

Implementation Strategy.

Social media posts about unemployment can predict official jobless claims up to two weeks before government data is released, according to a study. Unemployment can be tough, and people often post about it online.

Researcher Sam Fraiberger and colleagues recently developed an artificial intelligence model that identifies unemployment disclosures on social media. The work is published in the journal PNAS Nexus.

Data from 31.5 million Twitter users posting between 2020 and 2022 was used to train a transformer-based classifier called JoblessBERT to detect unemployment-related posts, even those that featured slang or misspellings, such as “I needa job!” The authors used demographic adjustments to account for Twitter’s non-representative user base, then forecast US unemployment insurance claims at national, state, and city levels.

Children exposed to high levels of screen time before age 2 showed changes in brain development that were linked to slower decision-making and increased anxiety by their teenage years, according to new research by Asst. Prof. Tan Ai Peng and her team from A*STAR Institute for Human Development and Potential (A*STAR IHDP) and National University of Singapore (NUS) Yong Loo Lin School of Medicine, using data from the Growing Up in Singapore Towards Healthy Outcomes (GUSTO) cohort.

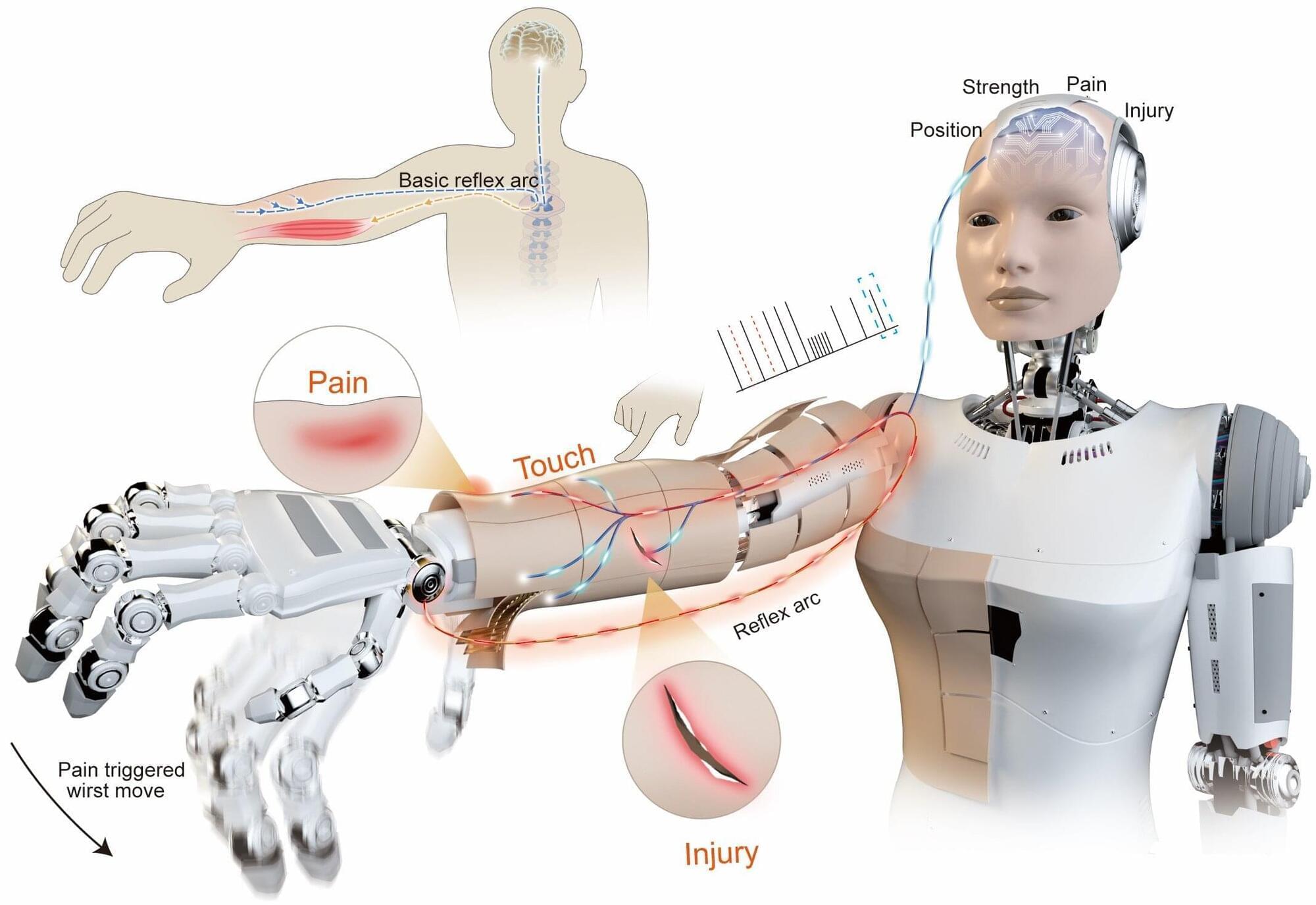

If you accidentally put your hand on a hot object, you’ll naturally pull it away fast, before you have to think about it. This happens thanks to sensory nerves in your skin that send a lightning-fast signal to your spinal cord, which immediately activates your muscles. The speed at which this happens helps prevent serious burns. Your brain is only informed once the movement has already started.

If something similar happens to a humanoid robot, it typically has to send sensor data to a central processing unit (CPU), wait for the system to process it, and then send a command to the arm’s actuators to move. Even a brief delay can increase the risk of serious damage.

But as humanoid robots move out of labs and factories and into our homes, hospitals and workplaces, they will need to be more than just pre-programmed machines if they are to live up to their potential. Ideally, they should be able to interact with the environment instinctively. To help make that happen, scientists in China have developed a neuromorphic robotic e-skin (NRE-skin) that gives robots a sense of touch and even an ability to feel pain.

Cars from companies like Tesla already promise hands-free driving, but recent crashes show that today’s self-driving systems can still struggle in risky, fast-changing situations.

Now, researchers say the next safety upgrade may come from an unexpected source: The brains of the people riding inside those cars.

In a new study appearing in Cyborg and Bionic Systems, Chinese researchers tested whether monitoring passengers’ brain activity could help self-driving systems make safer decisions in risky situations.