At any given moment, trillions of particles called neutrinos are streaming through our bodies and every material in our surroundings, without noticeable effect. Smaller than electrons and lighter than photons, these ghostly entities are the most abundant particles with mass in the universe.

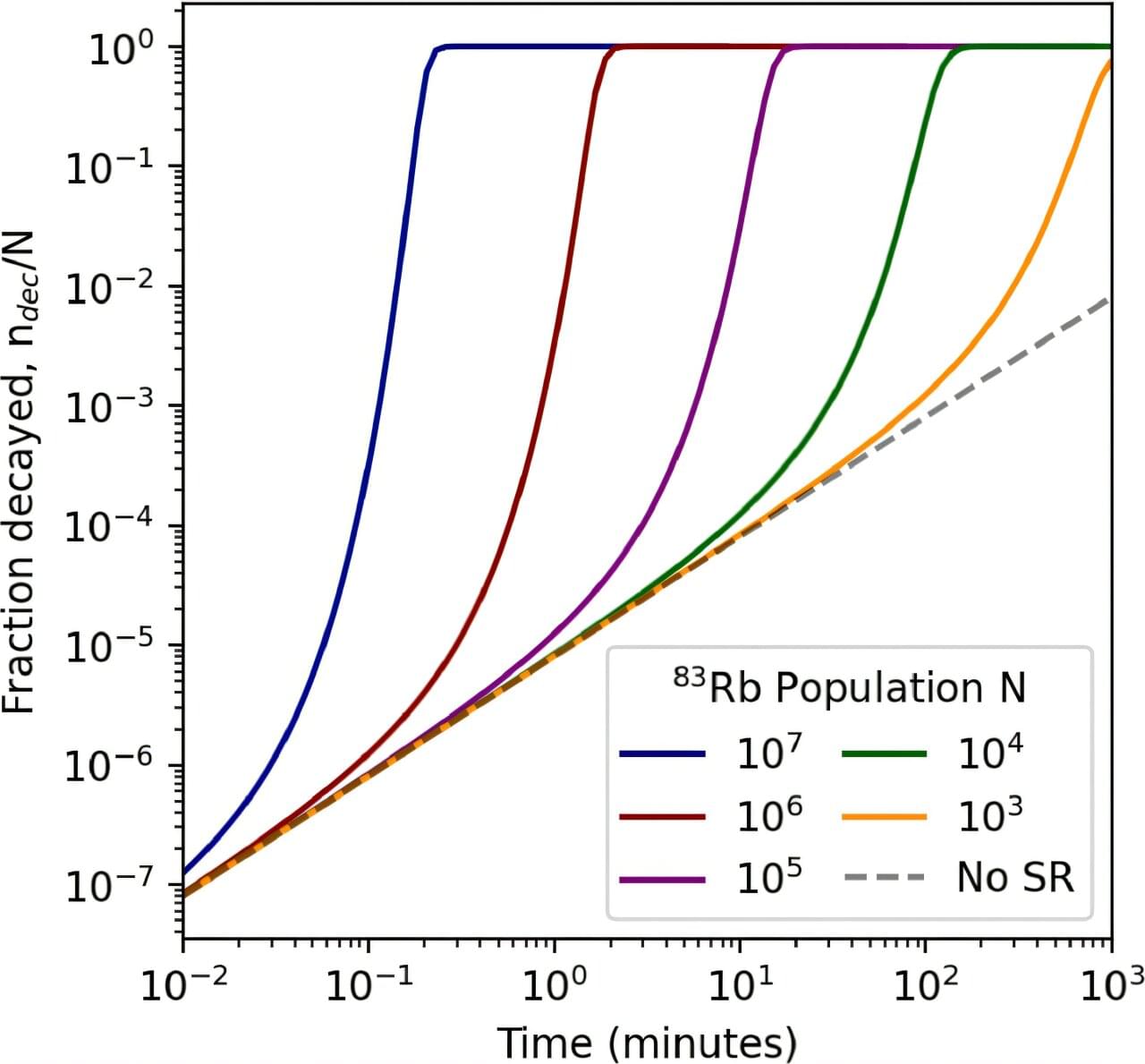

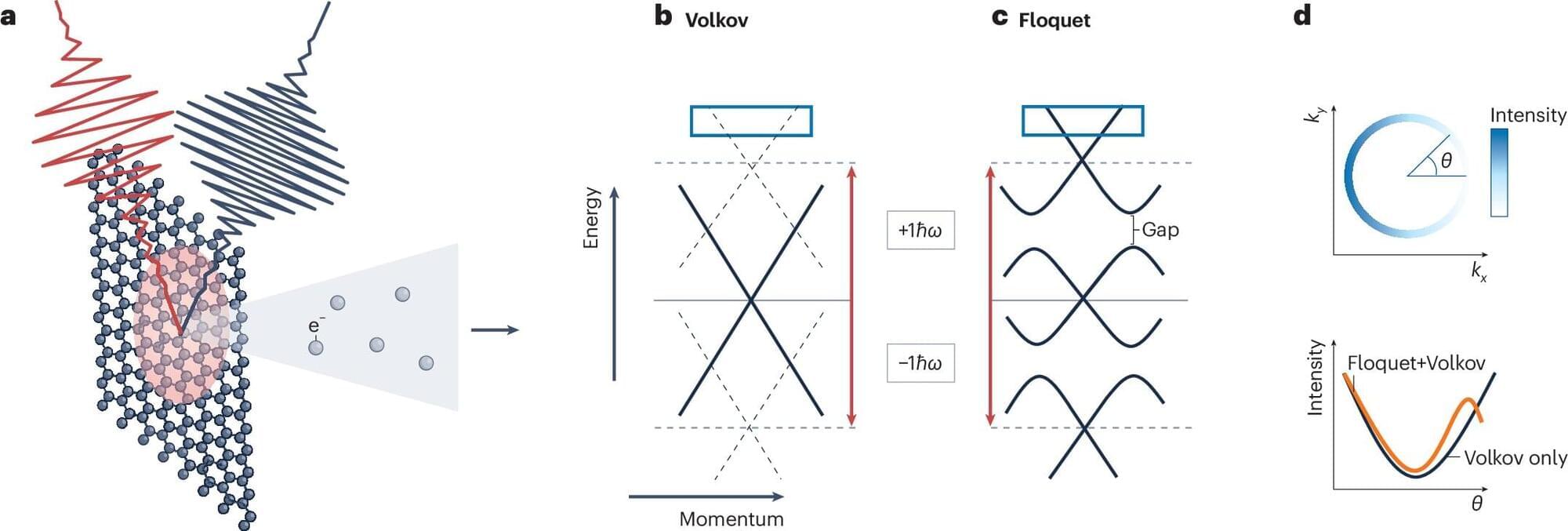

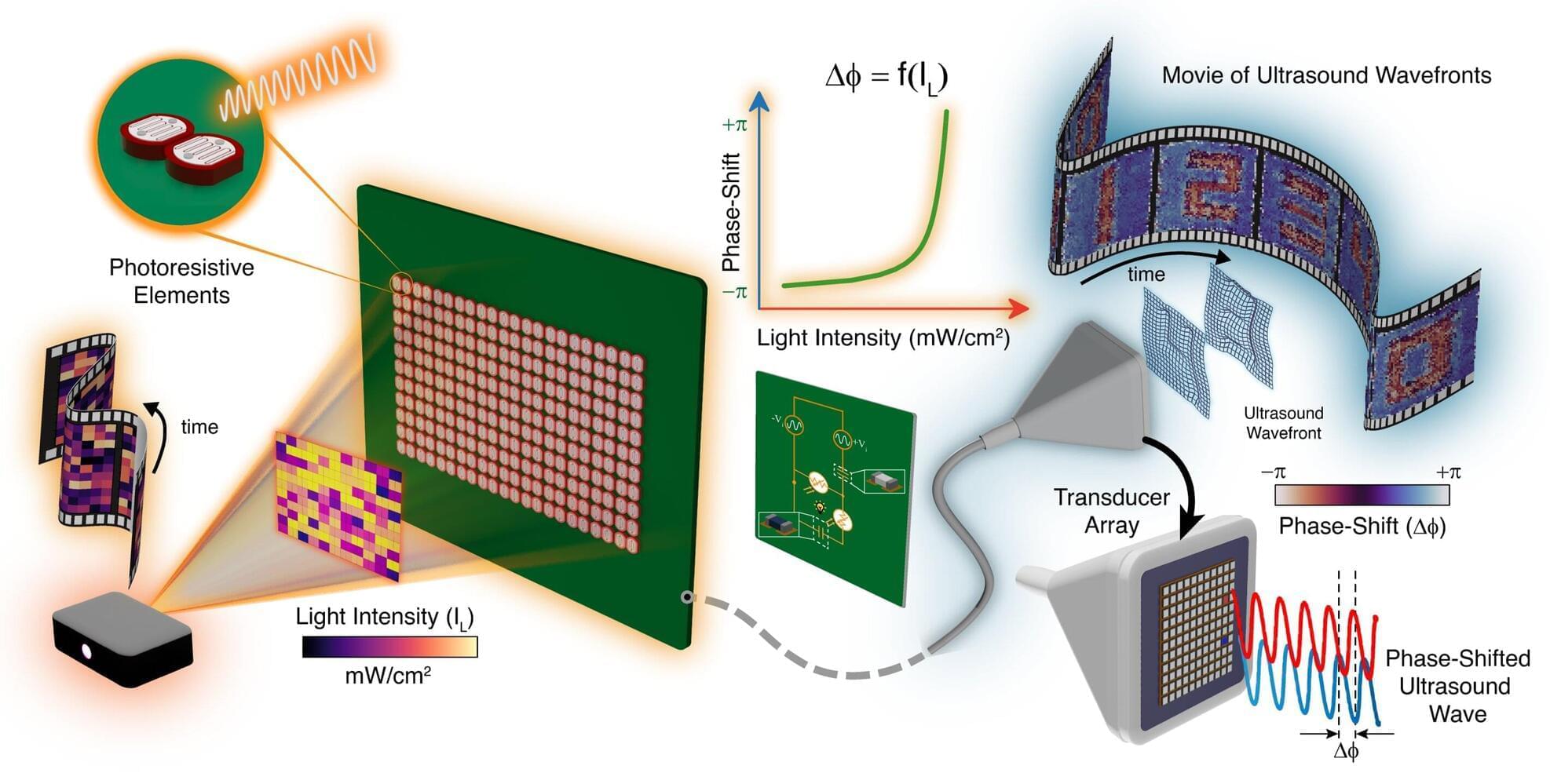

The exact mass of a neutrino is a big unknown. The particle is so small, and interacts so rarely with matter, that it is incredibly difficult to measure. Scientists attempt to do so by harnessing nuclear reactors and massive particle accelerators to generate unstable atoms, which then decay into various byproducts including neutrinos. In this way, physicists can manufacture beams of neutrinos that they can probe for properties including the particle’s mass.

Now MIT physicists propose a much more compact and efficient way to generate neutrinos that could be realized in a tabletop experiment.