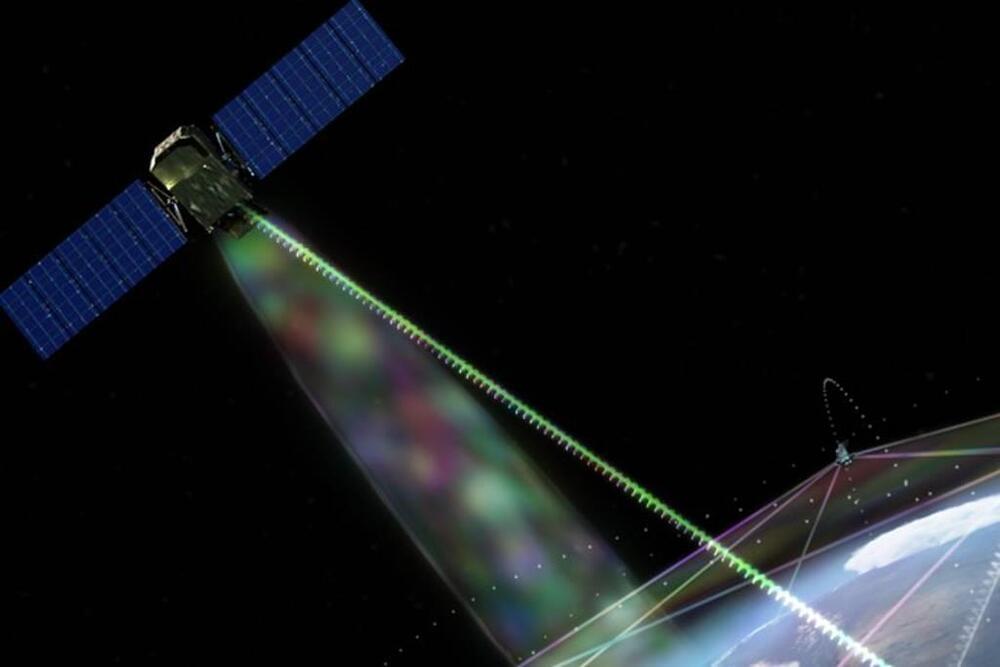

An increase in counterspace weapons is challenging the military’s approach of placing all of its billion-dollar eggs (exquisite satellites) in one basket (far-out geosynchronous orbit).

FORT SILL, Okla., Aug. 17 2021 — The U.S. Army has completed a directed-energy maneuver short-range air defense (DE M-SHORAD) “combat shoot-off” — its first development and demonstration of a high-power laser weapon. As part of the DE M-SHORAD combat shoot-off, the Army Rapid Capabilities and Critical Technologies Office (RCCTO), alongside Air and Missile Defense Cross Functional Team, Fires Center of Excellence, and the U.S. Army Test and Evaluation Command, took a laser-equipped Stryker vehicle to Fort Sill, Okla. At the combat shoot-off, the Stryker faced a number of realistic scenarios designed to establish, for the first time in the Army, the desired characteristics for future DE M-SHORAD systems.

The results make “a significant step toward ignition,” the Lawrence Livermore National Laboratory announced on Tuesday.

At the National Ignition Facility, which is the size of three football fields, super powerful laser beams recreate the temperatures and pressures similar to what exists in the cores of stars, giant planets and inside exploding nuclear weapons, a spokesperson tells CNBC.

On Aug. 8 a laser light was focused onto a target the size of a BB which resulted in “a hot-spot the diameter of a human hair, generating more than 10 quadrillion watts of fusion power for 100 trillionths of a second,” the written statement says.

What’s key is that the results make “a significant step toward ignition,” said a statement from the Lawrence Livermore National Laboratory.

In March, the Defense Health Agency, which oversees TRICARE, announced that by May, advanced behavioral analysis services outside of clinical settings will no longer be covered by the military insurance.

Registered behavior technicians help implement treatment and behavior plans that teach behaviors and skills universally used.

From April: Autism services for military families could be cut under DoD plan

Prior to the TRICARE changes, technicians could accompany children with autism to school and help facilitate the child’s learning.

Development of the aircraft isn’t focused solely around military use; Hermeus is intent on bringing innovation to commercial flight, too. “While this partnership with the US Air Force underscores US Department of Defense interest in hypersonic aircraft, when paired with Hermeus’ partnership with NASA announced in February 2,021 it is clear that there are both commercial and defense applications for what we’re building,” said Hermeus CEO and co-founder AJ Piplica.

Hermeus’ Quarterhorse is a hypersonic aircraft that can fly at Mach 5 speeds, or 3,000 mph—fast enough to go from the US to Europe in 90 minutes.

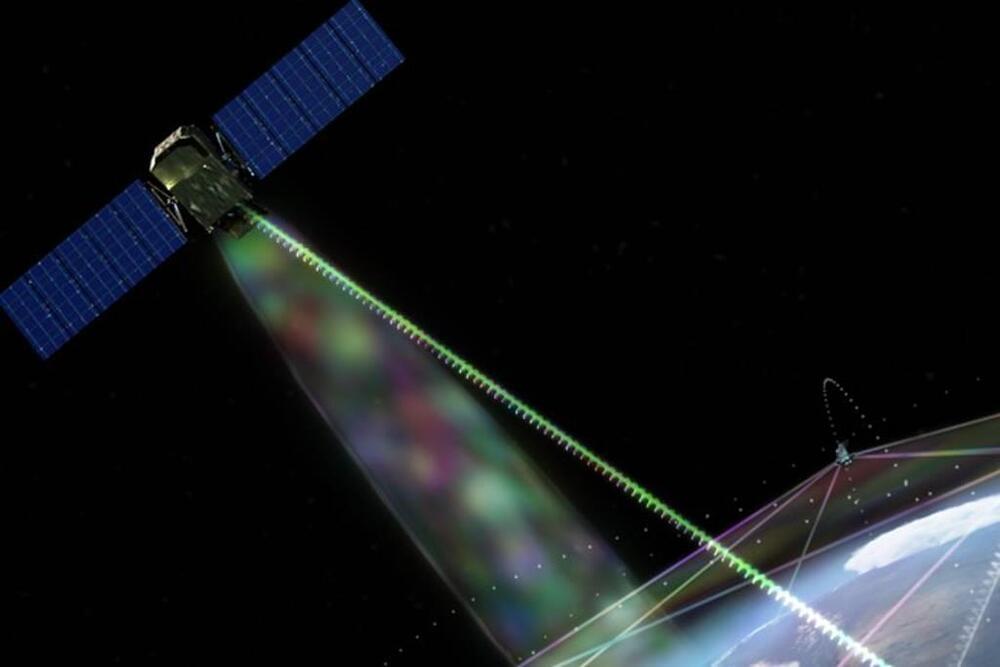

Artificial camouflage is the functional mimicry of the natural camouflage that can be observed in a wide range of species1,2,3. Especially, since the 1800s, there were a lot of interesting studies on camouflage technology for military purposes which increases survivability and identification of an anonymous object as belonging to a specific military force4,5. Along with previous studies on camouflage technology and natural camouflage, artificial camouflage is becoming an important subject for recently evolving technologies such as advanced soft robotics1,6,7,8 electronic skin in particular9,10,11,12. Background matching and disruptive coloration are generally claimed to be the underlying principles of camouflage covering many detailed subprinciples13, and these necessitate not only simple coloration but also a selective expression of various disruptive patterns according to the background. While the active camouflage found in nature mostly relies on the mechanical action of the muscle cells14,15,16, artificial camouflage is free from matching the actual anatomies of the color-changing animals and therefore incorporates much more diverse strategies17,18,19,20,21,22, but the dominant technology for the practical artificial camouflage at visible regime (400–700 nm wavelength), especially RGB domain, is not fully established so far. Since the most familiar and direct camouflage strategy is to exhibit a similar color to the background23,24,25, a prerequisite of an artificial camouflage at a unit device level is to convey a wide range of the visible spectrum that can be controlled and changed as occasion demands26,27,28. At the same time, the corresponding unit should be flexible and mechanically robust, especially for wearable purposes, to easily cover the target body as attachable patches without interrupting the internal structures, while being compatible with the ambient conditions and the associated movements of the wearer29,30.

System integration of the unit device into a complete artificial camouflage device, on the other hand, brings several additional issues to consider apart from the preceding requirements. Firstly, the complexity of the unit device is anticipated to be increased as the sensor and the control circuit, which are required for the autonomous retrieval and implementation of the adjacent color, are integrated into a multiplexed configuration. Simultaneously, for nontrivial body size, the concealment will be no longer effective with a single unit unless the background consists of a monotone. As a simple solution to this problem, unit devices are often laterally pixelated12,18 to achieve spatial variation in the coloration. Since its resolution is determined by the numbers of the pixelated units and their sizes, the conception of a high-resolution artificial camouflage device that incorporates densely packed arrays of individually addressable multiplexed units leads to an explosive increase in the system complexity. While on the other hand, solely from the perspective of camouflage performance, the delivery of high spatial frequency information is important for more natural concealment by articulating the texture and the patterns of the surface to mimic the microhabitats of the living environments31,32. As a result, the development of autonomous and adaptive artificial camouflage at a complete device level with natural camouflage characteristics becomes an exceptionally challenging task.

Our strategy is to combine thermochromic liquid crystal (TLC) ink with the vertically stacked multilayer silver (Ag) nanowire (NW) heaters to tackle the obstacles raised from the earlier concept and develop more practical, scalable, and high-performance artificial camouflage at a complete device level. The tunable coloration of TLC, whose reflective spectrum can be controlled over a wide range of the visible spectrum within the narrow range of temperature33,34, has been acknowledged as a potential candidate for artificial camouflage applications before21,34, but its usage has been more focused on temperature measurement35,36,37,38 owing to its high sensitivity to the temperature change. The susceptible response towards temperature is indeed an unfavorable feature for the thermal stability against changes in the external environment, but also enables compact input range and low power consumption during the operation once the temperature is accurately controlled.

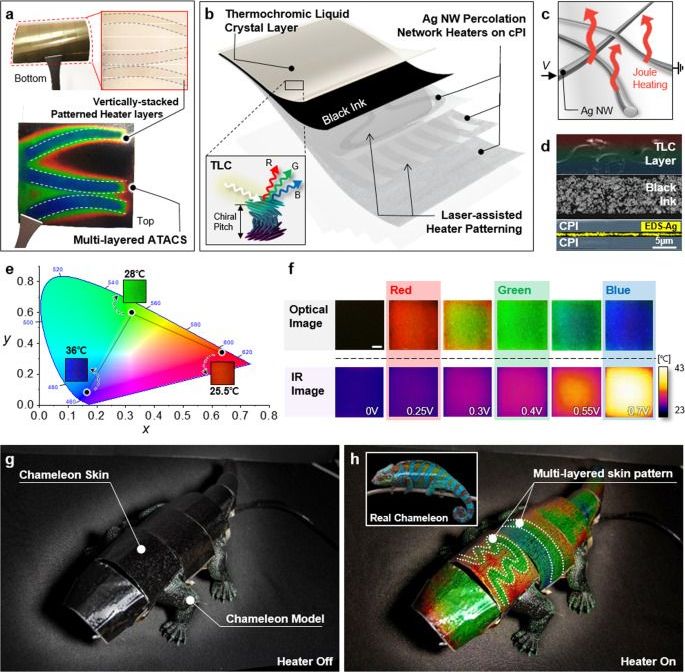

AIR PLASMA BREATHING via Ground Stations, in lieu of on-board energy supply: Recently, both a German team and a Chinese team have demonstrated jet engines capable of as much thrust as a traditional jet engine, but powered only by electricity. In both cases, the engine uses large amounts of energy to turn ambient atmosphere into plasma, then jetison it via magnetic nozzles. This is to be differentiated from space ion drives, which use tiny amounts of fuel, ejected at high velocities to slowly accellerate a vehicle in free space. By contrast, this new type of engine has huge amounts of fuel available to it in the form of the ambient atmosphere. Such craft could operate in any planetary atmosphere in our solar system, whether on Venus, Earth, Mars, the gas giant or ice giant planets. The only bottleneck holding this type of engine from replacing all current airplanes is the lack of a sufficiently dense on-board energy source. The most obvious enabling technology which will allow this new type of jet, which will require no fuel for its entire lifetime—since its fuel will be the atmosphere—is fusion energy. Fusion is dense enough to fit into a small package, easily mounted on an airplane. Until fusion is obtained there is one other possibility which is currently available, which is beaming energy to a flying vehicle from ground stations. An air-plasma-breathing vehicle, whether a self-standing airplane, or a partial booster phase for a rocket to low-earth-orbit, would have to follow a trajectory within direct line-of-sight of a series of ground beaming stations. A string of such stations would be akin to a land highway, a corridior within which air traffic or space-bound vehicles could travel. Such a corridior would be easy to create. Even over ocean, aircraft carriers or other nuclear vessels could transmit large amounts of energy to such vehicles. For rockets travelling to orbit, such a system would reduce reaction mass, since a portion of its fuel would not be carried by the vehicle. File: compilation of papers on beamed energy for flying vehicles:

Beam-powered propulsion, also known as directed energy propulsion, is a class of aircraft or spacecraft propulsion that uses energy beamed to the spacecraft from a remote power plant to provide energy. The beam is typically either a microwave or a laser beam and it is either pulsed or continuous. A continuous beam lends itself to thermal rockets, photonic thrusters and light sails, whereas a pulsed beam lends itself to ablative thrusters and pulse detonation engines.

The rule of thumb that is usually quoted is that it takes a megawatt of power beamed to a vehicle per kg of payload while it is being accelerated to permit it to reach low earth orbit.

This technology is also part of the research aim of the To The Stars Academy of Arts & Sciences. I compiled the below documents to explore the research the U.S. Government and Military has already collected and what they have tested in regards to the technology.

✅ Instagram: https://www.instagram.com/pro_robots.

You’re on PRO Robotics, and in this video we present the July 2,021 news digest. New robots, drones and drones, artificial intelligence and military robots, news from Elon Musk and Boston Dynamics. All the most interesting high-tech news for July in this Issue. Be sure to watch the video to the end and write in the comments, which news you are most interested in?

0:00 Announcement of the first part of the issue.

0:23 Home robot assistants and other.

10:50 Boston Dynamics news, Tesla Model S Plaid spontaneous combustion, Elon Musk’s new rocket, Richard Brandson.

20:25 WAIC 2,021 Robotics Exhibition. New robots, drones, cities of the future.

33:10 Artificial intelligence to program robots.

#prorobots #robots #robot #future technologies #robotics.

More interesting and useful content:

✅ Elon Musk Innovation https://www.youtube.com/playlist?list=PLcyYMmVvkTuQ-8LO6CwGWbSCpWI2jJqCQ

Drones are neat and fun and all that good stuff (I should probably add the caveat here that I’m obviously not referring to the big, terrible military variety), but when it comes to quadcopters, there’s always been the looming question of general usefulness. The consumer-facing variety are pretty much the exclusive realm of hobbyists and imaging.

We’ve seen a number of interesting applications for things like agricultural surveillance, real estate and the like, all of which are effectively extensions of that imaging capability. But a lot can be done with a camera and the right processing. One of the more interesting applications I’ve seen cropping up here and there is the warehouse drone — something perhaps a bit counterintuitive, as you likely (and understandably) associate drones with the outdoors.

Looking back, it seems we’ve actually had two separate warehouse drone companies compete in Disrupt Battlefield. There was IFM (Intelligent Flying Machines) in 2016 and Vtrus two years later. That’s really the tip of the iceberg for a big list of startups effectively pushing to bring drones to warehouses and factory floors.

The Air Force Research Laboratory at Kirtland Air Force Base has released a new analysis of the Department of Defense’s investments into directed energy technologies, or DE. The report, titled “Directed Energy Futures 2060,” makes predictions about what the state of DE weapons and applications will be 40 years from now and offers a range of scenarios in which the United States might find itself either leading the field in DE or lagging behind peer-state adversaries. In examining the current state of the art of this relatively new class of weapons, the authors claim that the world has reached a “tipping point” in which directed energy is now critical to successful military operations.

One of the document’s most eyebrow-raising predictions is that a “force field” could be created by “a sufficiently large fleet or constellation of high-altitude DEW systems” that could provide a “missile defense umbrella, as part of a layered defense system, if such concepts prove affordable and necessary.” The report cites several existing examples of what it calls “force fields,” including the Active Denial System, or “pain ray,” as well as non-kinetic counter-drone systems, and potentially counter-missile systems, that use high-power microwaves to disable or destroy their targets. Most intriguingly, the press release claims that “the concept of a DE weapon creating a localized force field may be just on the horizon.”