Learn more about MinnaLearn’s and the University of Helsinki’s AI course — no programming or complicated math required.

Defining computational neuroscience The evolution of computational neuroscience Computational neuroscience in the twenty-first century Some examples of computational neuroscience The SpiNNaker supercomputer Frontiers in computational neuroscience References Further reading

The human brain is a complex and unfathomable supercomputer. How it works is one of the ultimate mysteries of our time. Scientists working in the exciting field of computational neuroscience seek to unravel this mystery and, in the process, help solve problems in diverse research fields, from Artificial Intelligence (AI) to psychiatry.

Computational neuroscience is a highly interdisciplinary and thriving branch of neuroscience that uses computational simulations and mathematical models to develop our understanding of the brain. Here we look at: what computational neuroscience is, how it has grown over the last thirty years, what its applications are, and where it is going.

Worms can entangle themselves into a single, giant knot, only to quickly unravel themselves from the tightly wound mess within milliseconds. Now, math shows how they do it.

Researchers studied California blackworms (Lumbriculus variegatus) — thin worms that can grow to be 4 inches (10 centimeters) in length — in the lab, watching as the worms intertwined by the thousands. Even though it took the worms minutes to form into a ball-shaped blob akin to a snarled tangle of Christmas lights, they could untangle from the jumble in the blink of an eye when threatened, according to a study published April 28 in the journal Science (opens in new tab).

It was Arthur C. Clarke who famously said that “Any sufficiently advanced technology is indistinguishable from magic” (although I’d argue that Jack Kirby and Jim Starlin rather perfected the idea). Now, a group of real-life scientists at the RIKEN Interdisciplinary Theoretical and Mathematical Sciences in Japan have taken it a step further: by identifying a new quantum property to measure the weirdness of spacetime, and officially calling it “magic.” From the scientific paper “Probing chaos by magic monotones,” recently published in the journal Physical Review D:

😗

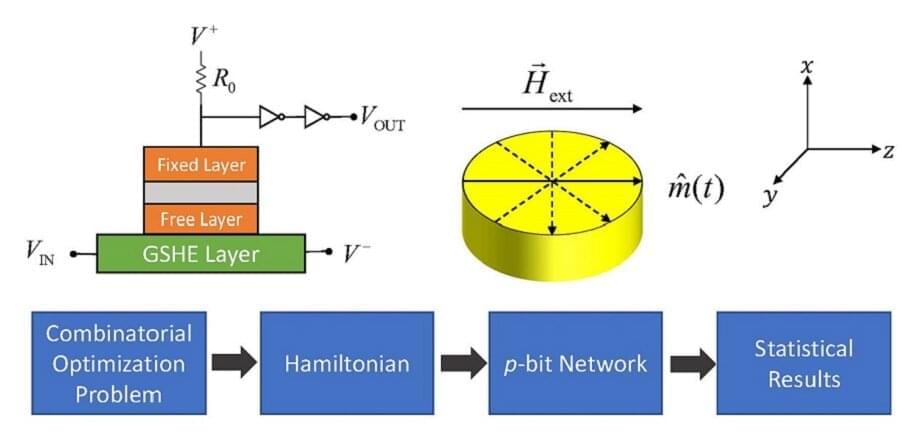

According to computational complexity theory, mathematical problems have different levels of difficulty in the context of their solvability. While a classical computer can solve some problems ℗ in polynomial time—i.e., the time required for solving P is a polynomial function of the input size—it often fails to solve NP problems that scale exponentially with the problem size and thus cannot be solved in polynomial time. Classical computers based on semiconductor devices are, therefore, inadequate for solving sufficiently large NP problems.

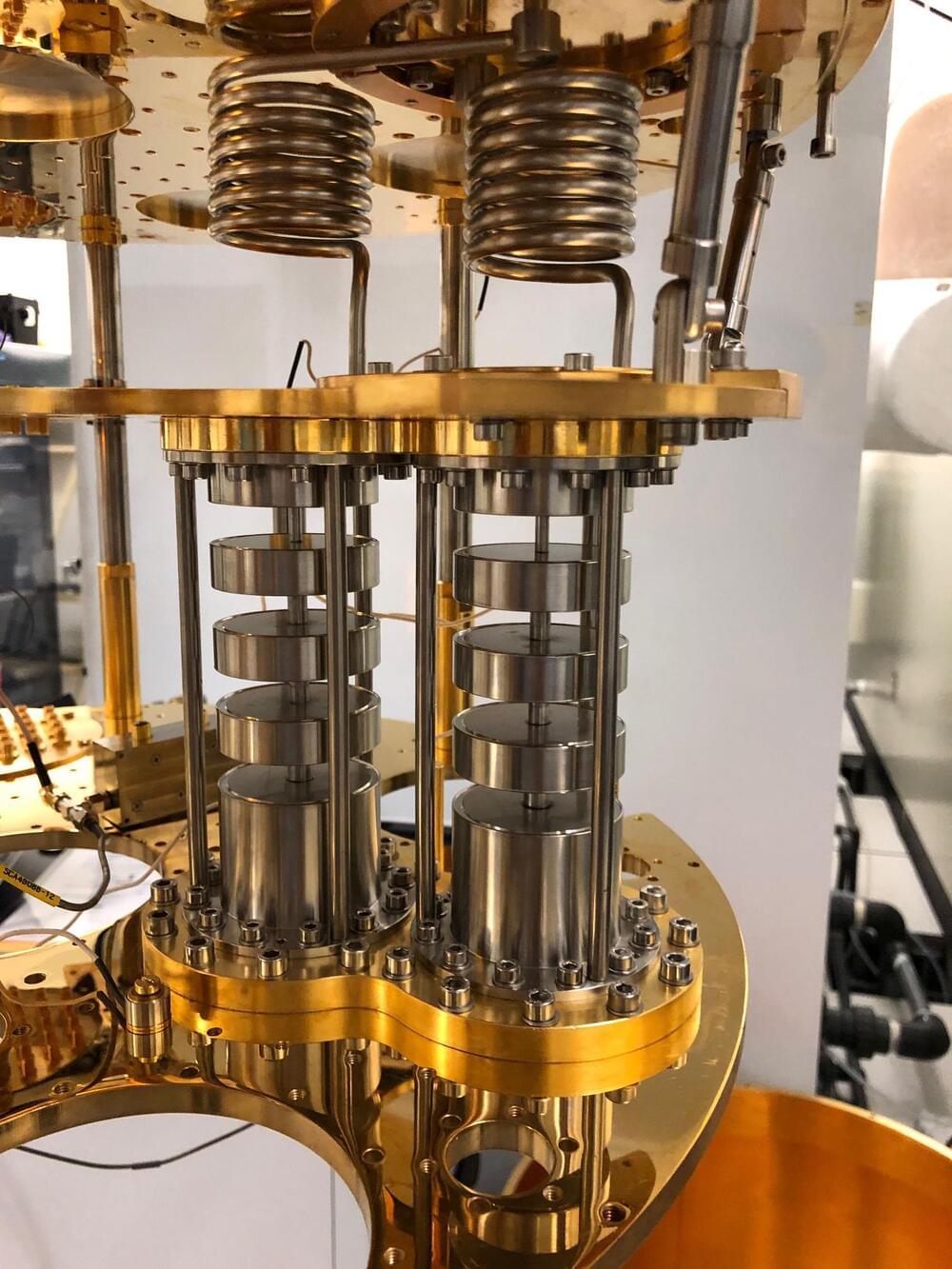

In this regard, quantum computers are considered promising as they can perform a large number of operations in parallel. This, in turn, speeds up the NP problem-solving process. However, many physical implementations are highly sensitive to thermal fluctuations. As a result, quantum computers often demand stringent experimental conditions such extremely low temperatures for their implementation, making their fabrication complicated and expensive.

Fortunately, there is a lesser-known and as-yet underexplored alternative to quantum computing, known as probabilistic computing. Probabilistic computing utilizes what are called “stochastic nanodevices,” whose operations rely on thermal fluctuations, to solve NP problems efficiently. Unlike in the case of quantum computers, thermal fluctuations facilitate problem solving in probabilistic computing. As a result, probabilistic computing is, in fact, easier to implement in real life.

This video is about the story of two geniuses, Albert Einstein and the famous logician Kurt Godel. It is about their meeting at IAS, Princeton, New Jersey, when they both walked and discussed many things. For Godel, Einstein was his best friend and till his last days, he remain close to Einstein. Their nature was opposite to each other, yet both of them were very good friends. What did they talk about with each other? What did they share? What were their thoughts? For Godel, Einstein was more like his guide and for Einstein, it was a great pleasure to walk with him.

In the first episode, we discover their first meeting with each other and the development of friendship between them.

Subscribe for more physics and mathematics videos:

Join this channel to get access to perks:

https://www.youtube.com/channel/UCVDb3R8XY1FIEIccTT1NnpA/join.

Channel: Join this channel to get access to perks: / @physicsforstudents.

Liquid-crystal elastomers (LCEs) are shape-shifting materials that stretch or squeeze when stimulated by an external input such as heat, light, or a voltage. Designing these materials to produce desired shapes is a challenging math problem, but Daniel Castro and Hillel Aharoni from the Weizmann Institute of Science, Israel, have now provided an analytical solution for flat materials that shape-shift within a single plane—like font-changing letters on a page [1]. Such “planar” designs could help in producing rods that change their cross section (from, say, round to square) without buckling.

LCEs consist of networks of polymer fibers containing liquid-crystal molecules. When exposed to a stimulus, the molecules align in a way that causes the material to shrink or extend in a predefined direction—called the director. Researchers can design an LCE by choosing the director orientation at each point in the material. However, calculating the “director field” for an arbitrary shape change is difficult, so approximate methods are typically used.

Castro and Aharoni focused on a specific design problem: how to create an LCE that stretches only in two dimensions. These planar LCEs often suffer from residual stress that causes the material to wrinkle or buckle out of the plane. The researchers showed that finding a buckle-free design is similar to a well-known mathematical problem that has been studied in other contexts, such as minimizing the mass of load-carrying structures. Taking inspiration from these previous studies, Castro and Aharoni provided a method for exactly deriving the director field for any desired planar LCE. “Our results could be readily implemented by a wide range of experimentalists, as well as by engineers and designers,” Aharoni says.

For one, classical physics can predict, with simple mathematics, how an object will move and where it will be at any given point in time and space. How objects interact with each other and their environments follow laws we first encounter in high school science textbooks.

What happens in minuscule realms isn’t so easily explained. At the level of atoms and their parts, measuring position and momentum simultaneously yields only probability. Knowing a particle’s exact state is a zero-sum game in which classical notions of determinism don’t apply: the more certain we are about its momentum, the less certain we are about where it will be.

We’re not exactly sure what it will be, either. That particle could be both an electron and a wave of energy, existing in multiple states at once. When we observe it, we force a quantum choice, and the particle collapses from its state of superposition into one of its possible forms.

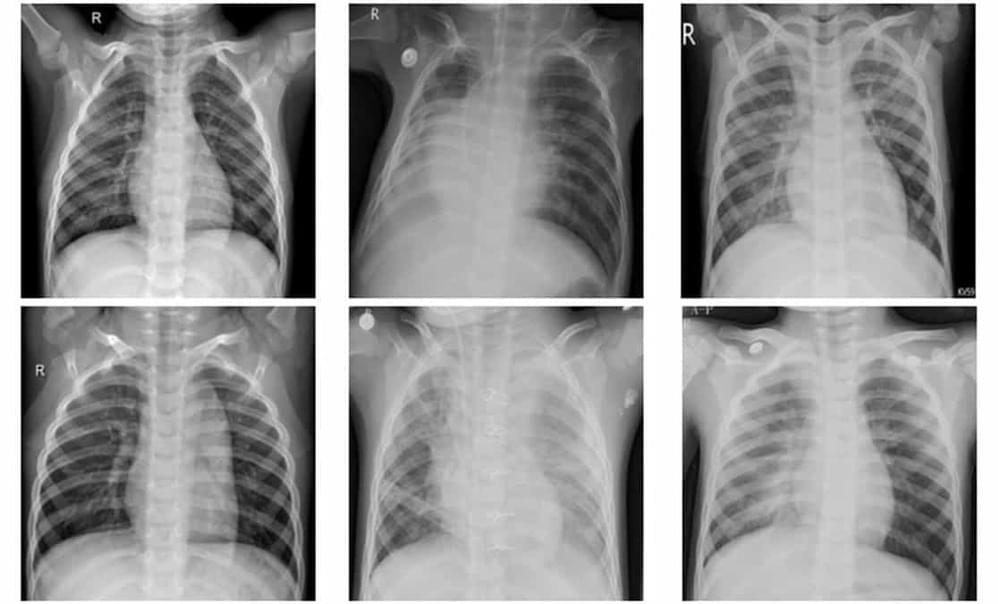

Pneumonia is a potentially fatal lung infection that progresses rapidly. Patients with pneumonia symptoms – such as a dry, hacking cough, breathing difficulties and high fever – generally receive a stethoscope examination of the lungs, followed by a chest X-ray to confirm diagnosis. Distinguishing between bacterial and viral pneumonia, however, remains a challenge, as both have similar clinical presentation.

Mathematical modelling and artificial intelligence could help improve the accuracy of disease diagnosis from radiographic images. Deep learning has become increasingly popular for medical image classification, and several studies have explored the use of convolutional neural network (CNN) models to automatically identify pneumonia from chest X-ray images. It’s critical, however, to create efficient models that can analyse large numbers of medical images without false negatives.

Now, K M Abubeker and S Baskar at the Karpagam Academy of Higher Education in India have created a novel machine learning framework for pneumonia classification of chest X-ray images on a graphics processing unit (GPU). They describe their strategy in Machine Learning: Science and Technology.