Batteries that work harder, better, faster, stronger?

Connected workers benefit from enhanced collaboration through digital communication platforms. This is particularly impactful in scenarios where quality issues arise and require immediate attention. Seamless communication channels allow for swift coordination between different departments, including production, quality control, and maintenance, facilitating quick resolutions to quality challenges and minimizing the impact on the final product.

The Future of Manufacturing Excellence

The connected worker is proving to be a catalyst for transformative change in the realm of quality control within manufacturing. As technology continues to advance, the integration of IoT, predictive maintenance, augmented reality, and data analytics will further empower workers to uphold and elevate product quality standards. Manufacturers embracing these advancements are not only ensuring the production of high-quality goods but are also positioning themselves at the forefront of Industry 4.0, where connectivity and innovation converge to redefine the future of manufacturing excellence.

We are witnessing a professional revolution where the boundaries between man and machine slowly fade away, giving rise to innovative collaboration.

Photo by Mateusz Kitka (Pexels)

As Artificial Intelligence (AI) continues to advance by leaps and bounds, it’s impossible to overlook the profound transformations that this technological revolution is imprinting on the professions of the future. A paradigm shift is underway, redefining not only the nature of work but also how we conceptualize collaboration between humans and machines.

As creator of the ETER9 Project (2), I perceive AI not only as a disruptive force but also as a powerful tool to shape a more efficient, innovative, and inclusive future. As we move forward in this new world, it’s crucial for each of us to contribute to building a professional environment that celebrates the interplay between humanity and technology, where the potential of AI is realized for the benefit of all.

Ok… here we go again! (Yes, this is real. Already being tested in full wafers.)

Lars Holmquist, a professor of design and innovation at Nottingham Trent University, said psychologists have historically proven that humans interpret interactions with computers like real social relationships.

Bookmark

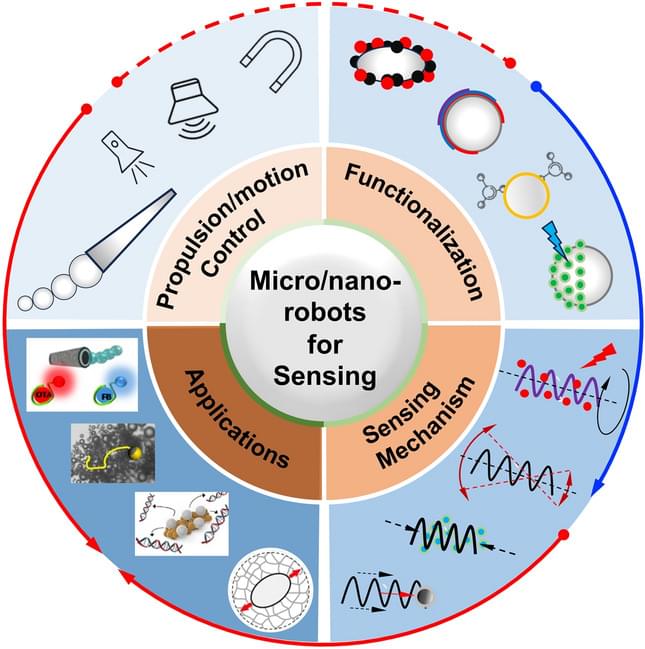

Untethered micro/nanorobots that can wirelessly control their motion and deformation state have gained enormous interest in remote sensing applications due to their unique motion characteristics in various media and diverse functionalities. Researchers are developing micro/nanorobots as innovative tools to improve sensing performance and miniaturize sensing systems, enabling in situ detection of substances that traditional sensing methods struggle to achieve. Over the past decade of development, significant research progress has been made in designing sensing strategies based on micro/nanorobots, employing various coordinated control and sensing approaches. This review summarizes the latest developments on micro/nanorobots for remote sensing applications by utilizing the self-generated signals of the robots, robot behavior, microrobotic manipulation, and robot-environment interactions.

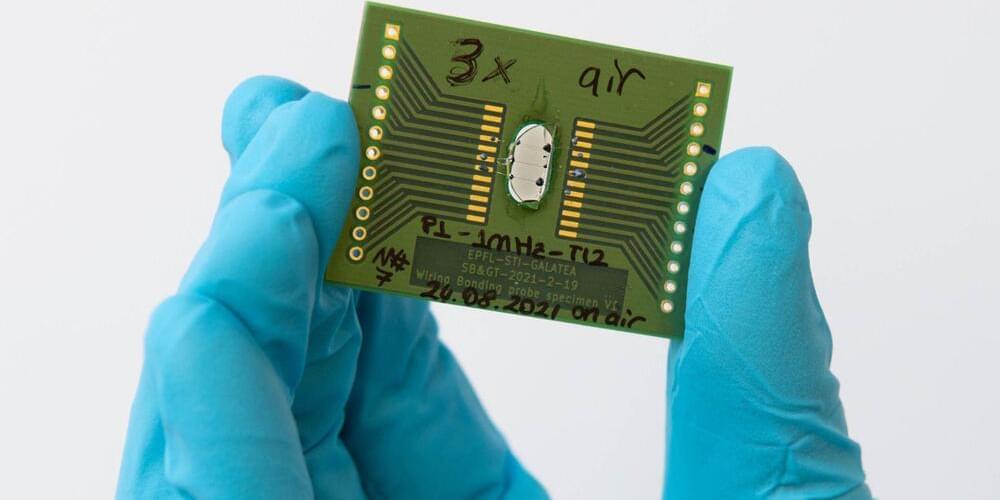

The team was thrilled with this discovery and saw the potential for creating durable patterns on the glass surface that could produce electricity when illuminated. This is a significant breakthrough because the technique does not require any additional materials, and all that is needed is tellurite glass and a femtosecond laser to create an active photoconductive material.

“Tellurium being semiconducting, based on this finding we wondered if it would be possible to write durable patterns on the tellurite glass surface that could reliably induce electricity when exposed to light, and the answer is yes,” explains Yves Bellouard who runs EPFL’s Galatea Laboratory.

Embark on a greener maritime future with Crowley’s eWolf, the first all-electric tugboat in the US, setting sail to revolutionize sustainability on the seas.

In-development space innovations from the private space industry include space cannons, modular space station units, and 3D-printed rockets.