Innovative white-label voice ordering technology will enable select operators to increase revenue and maintain high-quality customer experiences, without increasing labor costs or sacrificing hospitality.

The backpack also boasts a camera, microphone, speaker, network interface, processor, and storage, of course.

A person with poor vision heading to work faces many challenges, such as difficulty identifying the traffic lights. One day, the person is handed a backpack that is able to recognize the objects surrounding them, describing the people and stores nearby.

Now, an innovation can help support individuals with multiple tasks through the tech giant – Microsoft’s latest innovation – an artificial intelligence (AI) endowed smart backpack.

New research may lead to highly precise, power-efficient light measurement tools, driving advancements in various technology fields.

Researchers have discovered a way to improve optical frequency combs to measure light waves with much higher precision than previously accomplished. This could lead to the development and improvement of devices that require such precision, like atomic clocks. The researchers showed that dissipative Kerr solitons (DKSs) can create chip-based optical frequency combs with enough output power for use in optical atomic clocks and other practical applications.

N. Phillips/NIST/Wikimedia Commons.

More precise clocks.

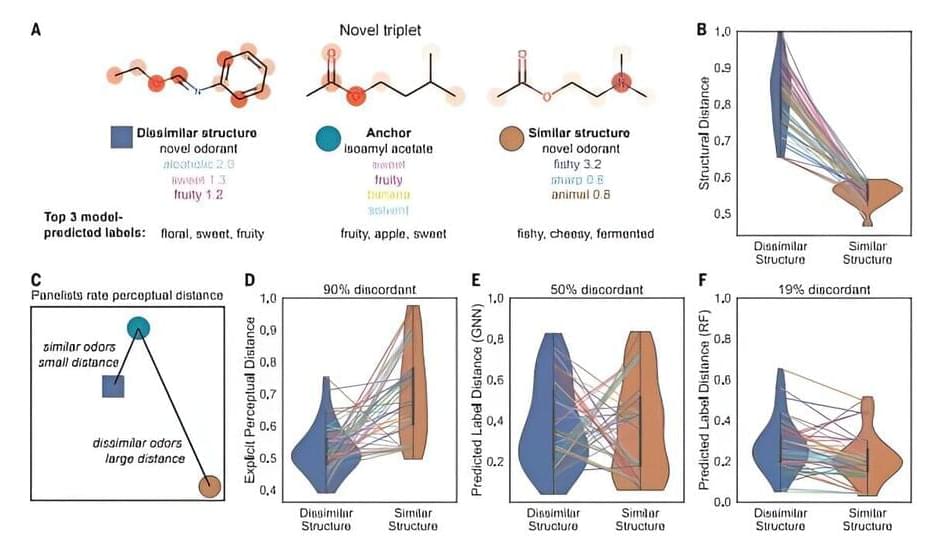

In a major breakthrough, scientists have built a tool to predict the odor profile of a molecule, just based on its structure. It can identify molecules that look different but smell the same, as well as molecules that look very similar but smell totally different. The research was published in Science.

Professor Jane Parker, University of Reading, said, “Vision research has wavelength, hearing research has frequency—both can be measured and assessed by instruments. But what about smell? We don’t currently have a way to measure or accurately predict the odor of a molecule, based on its molecular structure.”

“You can get so far with current knowledge of the molecular structure, but eventually you are faced with numerous exceptions where the odor and structure don’t match. This is what has stumped previous models of olfaction. The fantastic thing about this new ML generated model is that it correctly predicts the odor of those exceptions.”

Chinese scientists have just made a massive breakthrough in developing 2D, one-atom-thick semiconductors, SCMP reports.

Chinese scientists have made a significant breakthrough in the world of semiconductors, the.

PonyWang/iStock.

Only one atom thick.

This is good news! The article says this could lead to treatment of other cancers.

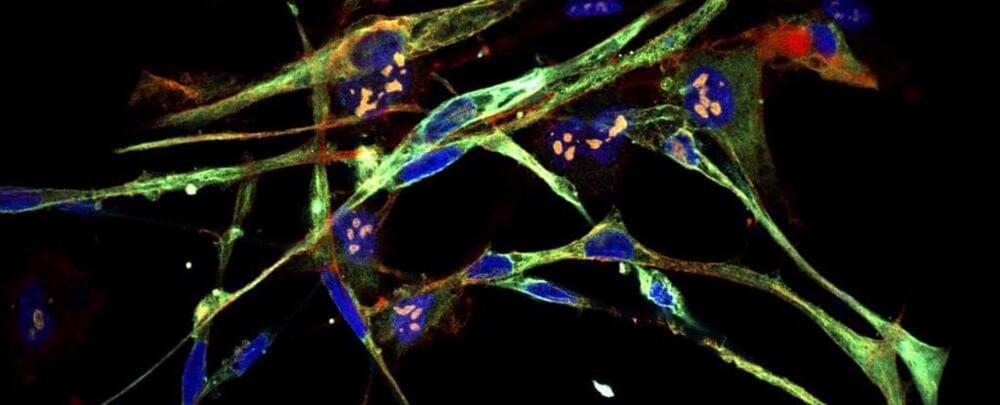

A particularly aggressive form of childhood cancer that forms in muscle tissue might have a new treatment option on the horizon.

Scientists have successfully induced rhabdomyosarcoma cells to transform into normal, healthy muscle cells. It’s a breakthrough that could see the development of new therapies for the cruel disease, and it could lead to similar breakthroughs for other types of human cancers.

“The cells literally turn into muscle,” says molecular biologist Christopher Vakoc of Cold Spring Harbor Laboratory.

1 Department of Biotechnology, School of Science, GITAM (Deemed to be University), Visakhapatnam, Andhra Pradesh, 530,045, India; 2 Department of Cell and Developmental Biology, University of Illinois at Urbana-Champaign, Urbana, IL, 61,801, USA

Correspondence: Sireesha V Garimella; Pankaj Chaturvedi, Email [email protected]; [email protected]

Abstract: Cancer continues to rank among the world’s leading causes of mortality despite advancements in treatment. Cancer stem cells, which can self-renew, are present in low abundance and contribute significantly to tumor recurrence, tumorigenicity, and drug resistance to various therapies. The drug resistance observed in cancer stem cells is attributed to several factors, such as cellular quiescence, dormancy, elevated aldehyde dehydrogenase activity, apoptosis evasion mechanisms, high expression of drug efflux pumps, protective vascular niche, enhanced DNA damage response, scavenging of reactive oxygen species, hypoxic stability, and stemness-related signaling pathways. Multiple studies have shown that mitochondria play a pivotal role in conferring drug resistance to cancer stem cells, through mitochondrial biogenesis, metabolism, and dynamics. A better understanding of how mitochondria contribute to tumorigenesis, heterogeneity, and drug resistance could lead to the development of innovative cancer treatments.

Jidoka Technologies, among the pioneers in the field of automated cognitive inspection for manufacturing, is happy to announce the launch of its innovative self-training software designed to revolutionise AI-based object detection techniques. In the era of rapidly evolving technologies, visual quality in manufacturing is not always binary – OK or NOK, and is highly subjective. It relies on people’s experience and expertise for decisions that need to be taken within seconds despite the presence of rules and SOP.

The criteria for accepting or rejecting defects in components can fluctuate due to factors like environmental conditions, the influence of external elements such as oil and dust on the part, and changes in standards based on the part’s functionality and its intended user. Although these variations are in the acceptable range, automated machine systems that rely on rule-based approaches would be rejecting the parts leading to a high percentage of false positives.

For over three years, Jidoka Technologies has been deploying its AI-driven software to address complex visual inspection tasks, with a track record of over 40 success stories spanning across automotive, consumer goods, print, and packaging industries. Customers have been asking for self-training for two use-cases, where there is a change in environment or variations for existing lines or where there is a high mix-low volume eg: the PCB industry where the requirements are dynamically changing. By building on this rich experience, the organisation is now introducing its self-training software, empowering customers with the ability to independently train and deploy AI models on the go.

With AI models like DALL-E 2, Midjourney, Stable Diffusion, and Adobe’s Firefly, over 15 billion images have been created, each raising the ceiling of creativity.

In the blink of an eye, the world of digital art has undergone a transformative revolution catalyzed by the surge in AI-generated masterpieces. A recent report by the Artificial Intelligence-centric blog, Everypixel Journal.

Communities dedicated to AI art have sprung up across the digital landscape, acting as vibrant hubs for artists to hone their skills, exchange techniques, and create mesmerizing imagery. From the likes of Reddit to Twitter and Discord, a dynamic ecosystem of innovation has emerged, culminating in an astonishing volume of content.