Estimating quiet worlds, silent civilizations, and ancient relics in the milky way

What if advanced civilizations aren’t absent—they’re just waiting? What if they looked at our universe, full of burning stars and abundant energy, and decided it’s too hot, too expensive, too wasteful to be awake? What if everyone else has gone into hibernation, sleeping through the entire age of stars, waiting trillions of years for the universe to cool? The Aestivation Hypothesis offers a stunning solution to the Fermi Paradox: intelligent civilizations aren’t missing—they’re deliberately dormant, conserving energy for a colder, more efficient future. We might be the only ones awake in a sleeping cosmos.

Over the next 80 minutes, we’ll explore one of the most patient answers to why we haven’t found aliens. From thermodynamic efficiency to cosmic hibernation, from automated watchers keeping vigil to the choice between experiencing now versus waiting for optimal conditions trillions of years ahead, we’ll examine why the rational strategy might be to sleep through our entire era. This changes everything about the Fermi Paradox, the Drake Equation, and what it means to be awake during the universe’s most “expensive” age.

CHAPTERS:

0:00 — Introduction: The Patience of Stars.

4:30 — The Fermi Paradox Once More.

8:20 — Introducing the Aestivation Hypothesis.

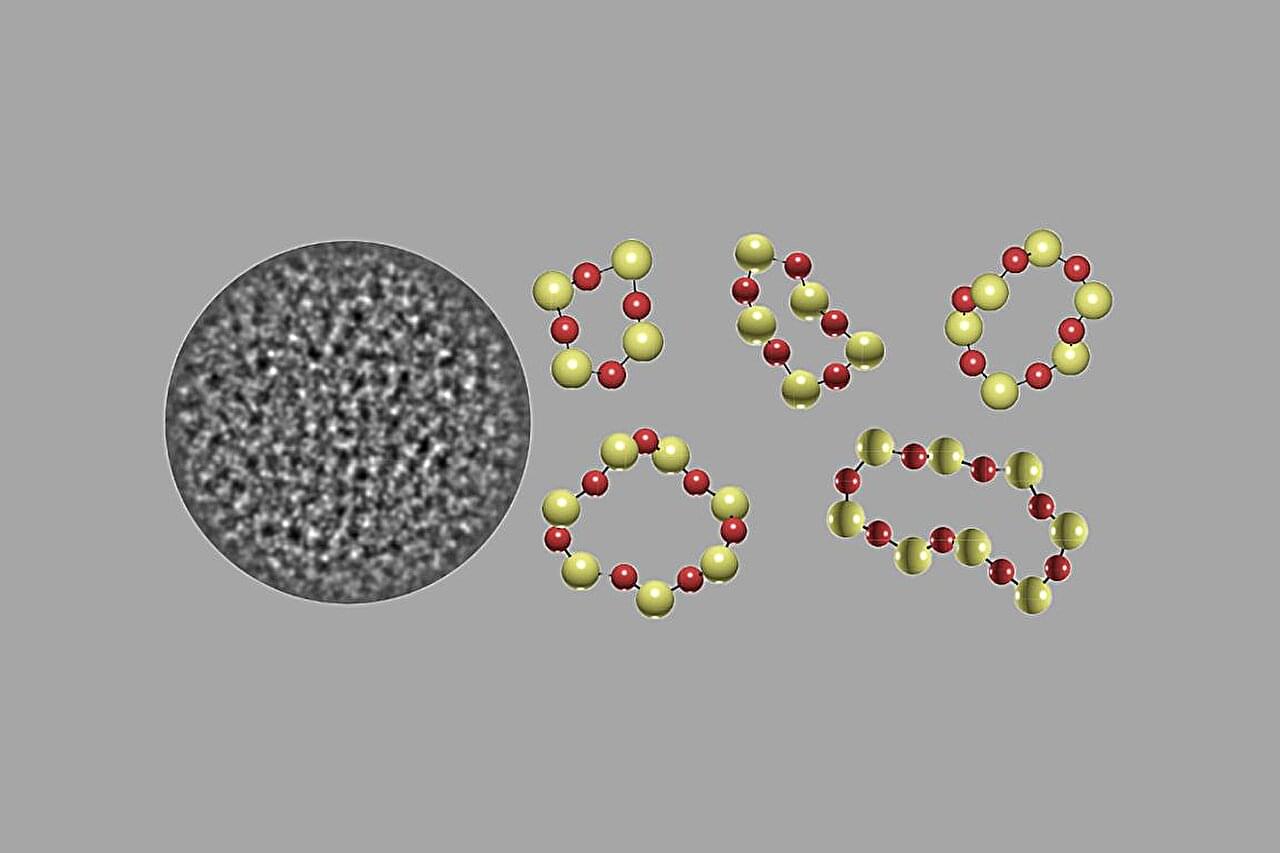

Researchers at the California NanoSystems Institute at UCLA published a step-by-step framework for determining the three-dimensional positions and elemental identities of atoms in amorphous materials. These solids, such as glass, lack the repeating atomic patterns seen in a crystal. The team analyzed realistically simulated electron-microscope data and tested how each step affected accuracy.

The team used algorithms to analyze rigorously simulated imaging data of nanoparticles—so small they’re measured in billionths of a meter. For amorphous silica, the primary component of glass, they demonstrated 100% accuracy in mapping the three-dimensional positions of the constituent silicon and oxygen atoms, with precision about seven trillionths of a meter under favorable imaging conditions.

While 3D atomic structure determination has a history of more than a century, its application has been limited to crystal structures. Such techniques depend on averaging a pattern that is repeated trillions of times.

Researchers from the Faculty of Engineering at The University of Hong Kong (HKU) have developed two innovative deep-learning algorithms, ClairS-TO and Clair3-RNA, that significantly advance genetic mutation detection in cancer diagnostics and RNA-based genomic studies.

The pioneering research team, led by Professor Ruibang Luo from the School of Computing and Data Science, Faculty of Engineering, has unveiled two groundbreaking deep-learning algorithms—ClairS-TO and Clair3-RNA—set to revolutionize genetic analysis in both clinical and research settings.

Leveraging long-read sequencing technologies, these tools significantly improve the accuracy of detecting genetic mutations in complex samples, opening new horizons for precision medicine and genomic discovery. Both research articles have been published in Nature Communications.

Facebook admitted something that should have been front-page news.

In an FTC antitrust filing, Meta revealed that only 7% of time on Instagram and 17% on Facebook is spent actually socializing with friends and family.

The rest?

Algorithmically selected content. Short-form video. Engagement optimized by AI.

This wasn’t a philosophical confession. It was a legal one. But it quietly confirms what many of us have felt for years:

What we still call “social networks” are not social.

They are attention machines.

In this video I break down recent research exploring metacognition in large language model ensembles and the growing shift toward System 1 / System 2 style AI architectures.

Some researchers are no longer focusing on making single models bigger. Instead, they are building systems where multiple models interact, critique each other, and dynamically switch between fast heuristic reasoning and slower deliberate reasoning. In other words: AI systems that monitor and regulate their own thinking.

Artificial metacognition: Giving an AI the ability to ‘think’ about its ‘thinking’

https://theconversation.com/artificia… System 1 to System 2: A Survey of Reasoning Large Language Models https://arxiv.org/abs/2502.17419 The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity https://dl.acm.org/doi/10.1145/374625… Emotions? Towards Quantifying Metacognition and Generalizing the Teacher-Student Model Using Ensembles of LLMs https://arxiv.org/abs/2502.17419 Metacognition https://research.sethi.org/metacognit… Robot passes the mirror test by inner speech https://www.sciencedirect.com/science… METIS: Metacognitive Evaluation for Intelligent Systems https://research.sethi.org/metacognit… Distinguishing the reflective, algorithmic, and autonomous minds: Is it time for a tri-process theory? Get access Arrow https://academic.oup.com/book/6923/ch… #science #explained #news #research #sciencenews #ai #robots #artificialintelligence.

From System 1 to System 2: A Survey of Reasoning Large Language Models.

https://arxiv.org/abs/2502.

The Illusion of Thinking: Understanding the Strengths and Limitations of Reasoning Models via the Lens of Problem Complexity.

https://dl.acm.org/doi/10.1145/374625…

Emotions? Towards Quantifying Metacognition and Generalizing the Teacher-Student Model Using Ensembles of LLMs.

https://arxiv.org/abs/2502.

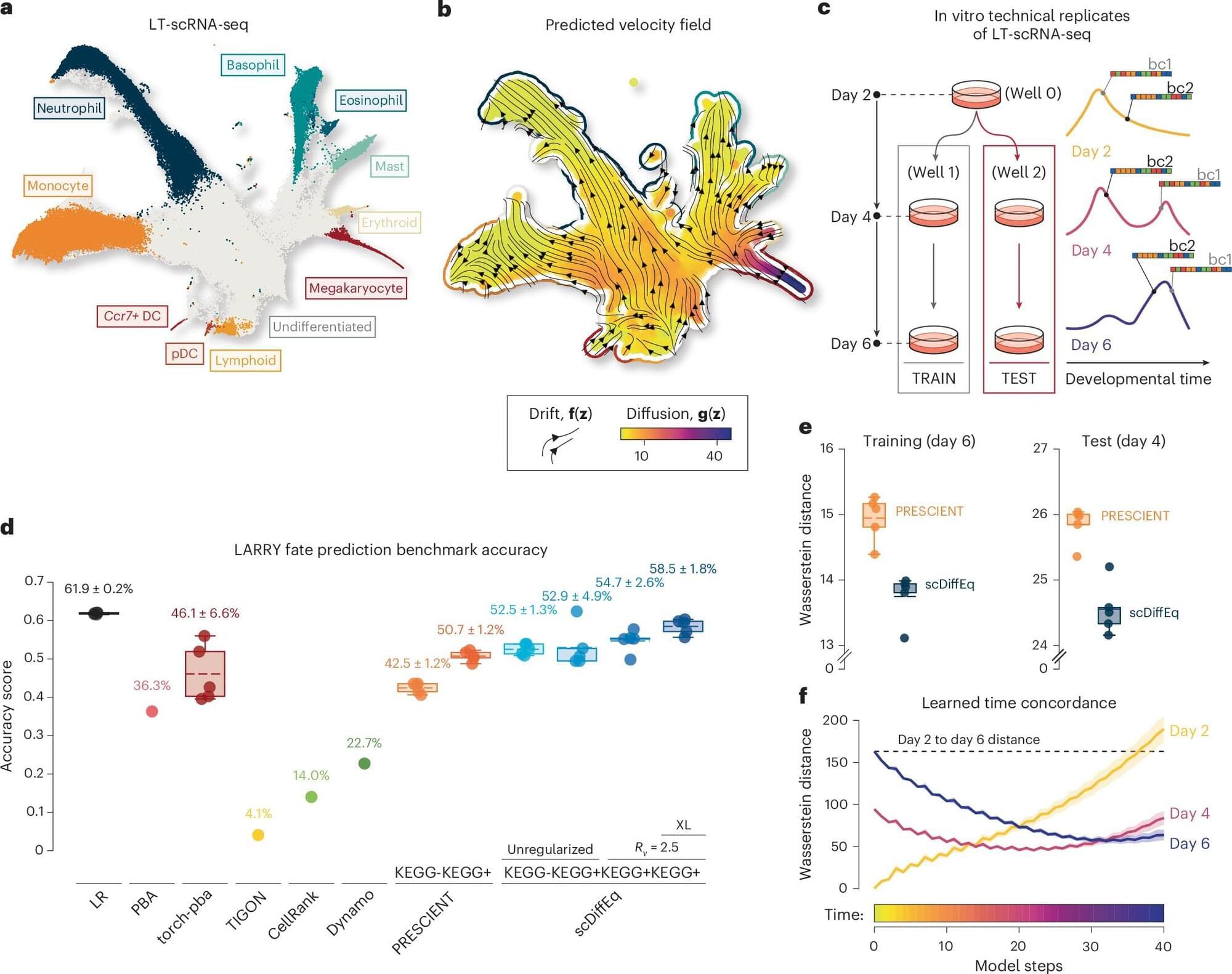

The development of humans and other animals unfolds gradually over time, with cells taking on specific roles and functions via a process called cell fate determination. The fate of individual cells, or in other words, what type of cells they will become, is influenced both by predictable biological signals and random physiological fluctuations.

Over the past decades, medical researchers and neuroscientists have been able to study these processes in greater depth, using a technique known as single-cell RNA sequencing (scRNA-seq). This is an experimental tool that can be used to measure the gene activity of individual cells.

To better understand how cells develop over time, researchers also rely on mathematical models. One of these models, dubbed the drift-diffusion equation, describes the evolution of systems as the combination of predictable changes (i.e., drift) and randomness (i.e., diffusion).

An artificial intelligence algorithm used newborn blood samples to shed light on the biological complexity of what can go wrong after preterm birth, a Stanford Medicine-led study found.